Legacy System Integration with AI Technologies

Understanding AI Integration

Legacy systems, those older computer programs and equipment still running critical business operations, often clash with modern Artificial Intelligence technologies. Picture trying to connect your grandpa's record player to Spotify.

That's the challenge many businesses face today. As Reuben "Reu" Smith, founder of WorkflowGuide.com and AI automation strategist, has directly observed in over 750+ workflow implementations, these outdated systems hold valuable data but lack modern capabilities.

Only 12% of organizations report having data quality good enough for AI implementation. The gap between old and new creates real headaches for tech leaders and business owners alike.

Why bother with this digital marriage? Because legacy systems contain years of business intelligence too valuable to discard. Financial institutions using edge computing with AI have boosted operational efficiency by 20%.

Companies like Keller Williams have successfully bridged this gap using middleware and APIs, typically within 6-12 weeks. The process isn't simple though. Challenges range from outdated infrastructure and poor data quality to budget constraints and regulatory hurdles like GDPR and HIPAA compliance.

This article maps out practical strategies for connecting your legacy systems with AI without starting from scratch. We explore real-world success stories, such as AI-powered patient scheduling that transformed healthcare operations. You will learn how to assess your current systems, prepare your data, and implement AI in phases for maximum impact. Companies with clear success metrics are 50% more likely to create effective AI projects that align with business goals.

Ready for some digital renovation?

Key Takeaways

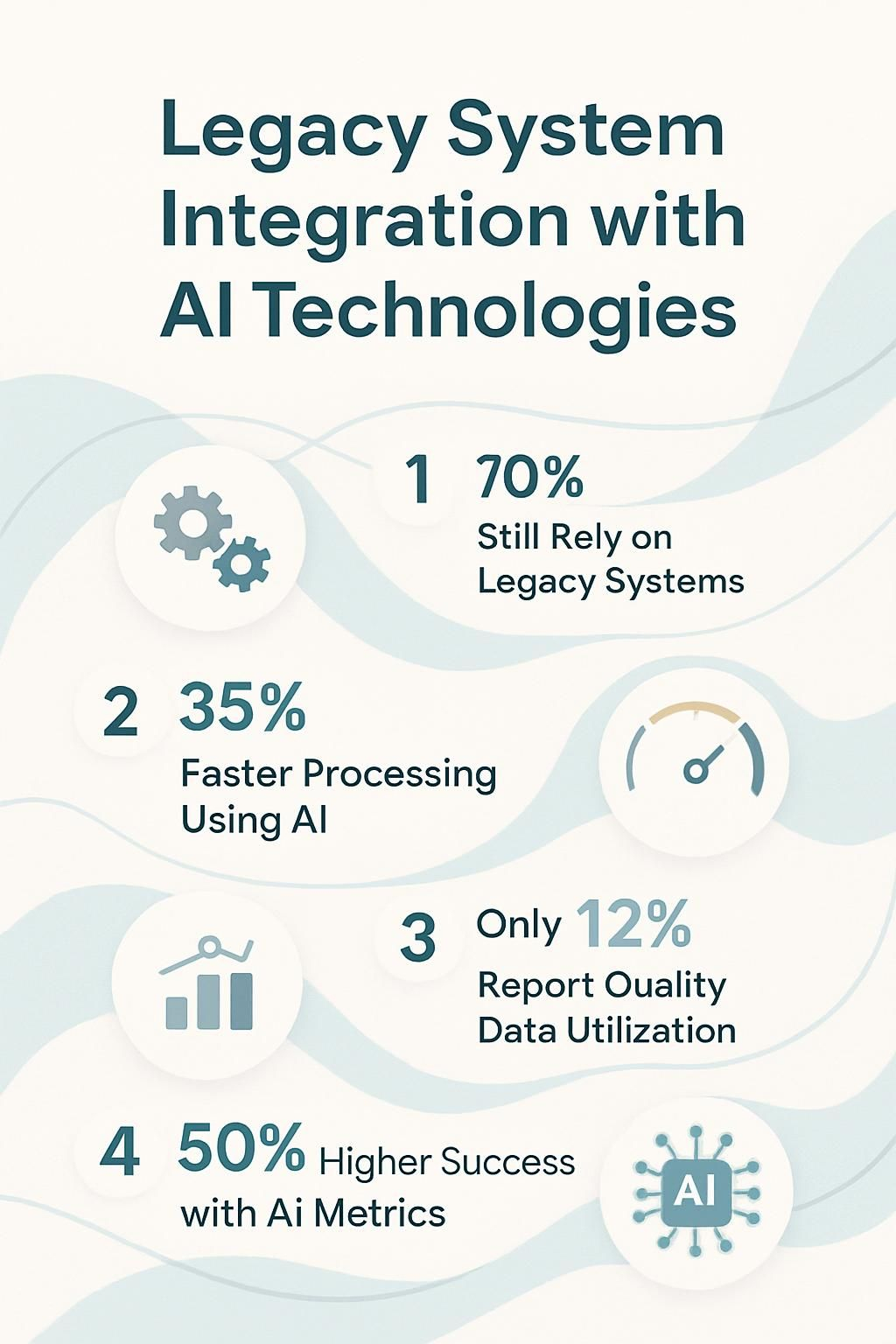

- About 70% of companies still rely on legacy systems that struggle to adapt to modern needs but can be enhanced with AI integration.

- Companies report up to 35% faster processing times after implementing AI-assisted workflows, with some seeing system capacity triple without hardware upgrades.

- Only 12% of organizations have sufficient data quality for successful AI implementation, making data cleaning a critical first step.

- Organizations that define clear metrics for AI projects are 50% more likely to implement AI effectively, so map AI opportunities to specific business goals.

- Most middleware solutions take just 6-12 weeks to implement, offering a quick path to connect legacy systems with modern AI capabilities.

Why Integrate AI into Legacy Systems?

Your legacy systems hold untapped potential that AI can transform into competitive advantages. Old tech doesn't mean obsolete - it means opportunity for smart businesses ready to breathe new life into existing infrastructure through Artificial Intelligence integration.

Addressing system inefficiencies

Legacy systems often cough and sputter like my old Nintendo that needs the cartridge blown out before it works. These aging systems create bottlenecks that slow down operations, waste resources, and frustrate everyone involved.

Most legacy platforms struggle with basic tasks that modern systems handle in seconds. I have seen companies where staff manually re-enter data between systems, spending hours on work a good API could fix in milliseconds.

The fragmented data across these outdated systems creates a perfect storm of inefficiency, making it nearly impossible to get a complete picture of your business operations.

Integrating AI into legacy systems isn't about replacing the old with the shiny new toy. It's about teaching your reliable old workhorse some impressive new tricks.

AI technologies can target these pain points with surgical precision. They automate repetitive tasks, spot patterns humans miss, and process massive datasets at lightning speed. Middleware solutions bridge the gap between your legacy systems and modern AI capabilities without requiring a complete system overhaul.

Cloud infrastructure provides the computing power needed for AI applications while pilot testing lets you prove value before full-scale implementation. The real magic happens when machine learning algorithms start finding inefficiencies you never even knew existed.

Next, we explore how AI integration enhances scalability and performance beyond just fixing what's broken.

Enhancing scalability and performance

Legacy systems often buckle under modern workloads like a vintage car trying to win a NASCAR race. AI integration supercharges these older systems with much-needed performance boosts.

Companies report up to 35% faster processing times after implementing AI-assisted workflows into their existing infrastructure. The magic happens when AI takes over repetitive tasks that once bogged down your systems.

Your databases breathe easier, your applications run smoother, and your IT team stops pulling all-nighters just to keep things running.

Scalability transforms from pipe dream to reality with strategic AI deployment. Most legacy setups hit their ceiling during growth phases, forcing painful choices between stability and expansion.

AI bridges this gap through smart resource allocation and predictive scaling. One manufacturing client at WorkflowGuide saw their system capacity triple without hardware upgrades after implementing machine learning algorithms to optimize database queries.

The numbers do not lie: organizations that build future-proof infrastructure through AI integration handle four times more transactions while maintaining the same response times. Your systems grow with your business instead of holding it back, which beats explaining to customers why your website crashes every time you run a successful promotion.

Staying competitive in a tech-driven market

While enhancing scalability boosts your system's capacity, the technology landscape moves at a rapid pace. Companies that stick with outdated systems fall behind faster than a Windows 95 machine trying to run modern software.

Your competitors are adopting AI technologies that transform customer experiences and streamline operations daily. According to our research, businesses integrating AI with legacy systems report a significant competitive advantage in market responsiveness and innovation capacity.

The digital transformation race isn't optional anymore. It is survival. Think of AI integration like upgrading from a flip phone to a smartphone. You don't just gain a better calling device; you gain a pocket computer that changed how you work.

Similarly, AI does not just patch your legacy systems; it supercharges them with predictive analytics, automation, and machine learning capabilities. This hybrid architecture approach combines your stable legacy foundation with modern AI flexibility, creating a tech ecosystem that can adapt to market shifts quickly.

Your business stays relevant by strategically enhancing what works with targeted AI solutions that deliver measurable business outcomes.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Key Challenges of Legacy System Integration

Legacy systems present major roadblocks when companies try to add AI capabilities. Old tech stacks often clash with modern AI requirements, creating integration headaches that can derail digital transformation efforts.

Outdated infrastructure and compatibility issues

Your legacy systems sit like that ancient desktop in your garage, running Windows XP and wheezing through basic tasks. These outdated systems lack the computational muscle needed for modern AI models.

I have seen companies try to bolt AI onto 15-year-old infrastructure with the same success rate as fitting square pegs into round holes. The compatibility gaps with current APIs create a digital Tower of Babel where your systems speak COBOL while new tools communicate in Python and JavaScript.

This mismatch not only slows things down; it breaks functionality in unpredictable ways.

The technical debt piles up faster than unwashed dishes at a college apartment. Most legacy systems struggle with integration challenges that make interoperability nearly impossible without custom bridges.

Maintenance costs balloon to three to four times what modern alternatives would require, draining resources that could fuel growth. Data quality issues compound the problem, with missing records and duplicates making AI training as effective as teaching calculus to a goldfish.

Companies must tackle software compatibility hurdles before competitors zoom past while your systems remain misaligned.

Data silos and accessibility barriers

Data silos lurk in most legacy systems like creatures in a dungeon, trapping valuable information in isolated pockets where nobody can access it. I have seen companies with customer data spread across five different systems, none of which communicate with each other.

These barriers block the free flow of information and hide critical insights that could drive business decisions. The statistics show that 88% of organizations lack sufficient data quality for effective AI implementation.

Your legacy databases might store treasure troves of information in outdated formats that modern AI tools cannot easily digest.

Regulatory requirements often worsen these problems. GDPR and HIPAA regulations force companies to lock down certain data types, creating even more silos. Breaking down these walls requires a strategic approach to data governance.

At WorkflowGuide, teams have mapped siloed information landscapes and built secure bridges between systems. This effort transforms fragmented data into a unified resource that AI can actually use.

Organizations that solve these accessibility challenges gain a serious competitive edge in today's data-driven marketplace.

High costs and resource constraints

Modernizing legacy systems with AI is not just a technical headache; it is a financial challenge. Outdated architecture creates a perfect storm of expenses that can cause even the most tech-enthusiastic business leader to wince.

Companies face a triple whammy of hardware upgrades, software licensing, and specialized talent acquisition costs. Clients often compare their legacy systems to vintage cars—expensive to maintain but impossible to part with.

The reality is that these systems demand extra resources for data cleansing before AI can work its magic, turning what should be a sprint into a marathon with hurdles.

Budget limitations hit small businesses particularly hard when addressing performance bottlenecks. Your legacy system might chug along like a 90s computer running modern software, requiring significant computing power upgrades.

One local business owner said, "I wanted AI, but my server room needed a complete overhaul first." This resource squeeze forces tough choices between immediate operational needs and long-term tech investments.

The next critical step involves evaluating your current system to identify specific limitations before proceeding with integration.

Evaluating Your Legacy System Before Integration

Before adding AI to your old system, you need to know what you're working with - like checking if your vintage car can handle a new engine before dropping thousands on parts. Take time to spot bottlenecks, assess your data quality, and match potential AI solutions to your actual business goals.

Want to avoid the classic "expensive tech that solves nothing" trap? Keep reading.

Identifying system limitations and bottlenecks

- Run performance audits to catch CPU and memory bottlenecks that slow down daily operations. These tests reveal where your system gasps for air during peak usage times.

- Map data flow patterns to spot where information gets stuck or lost between different parts of your system. Think of this as finding the clogged arteries in your business's circulatory network.

- Check database query response times which often cause the most painful slowdowns. Slow queries act like someone who takes too long to order at a busy restaurant.

- Document outdated programming languages and frameworks that lack support for modern AI tools. COBOL might be reliable, but it will not high-five your machine learning algorithms.

- Assess hardware limitations including storage capacity, processing power, and network infrastructure that might collapse under AI workloads.

- Analyze security vulnerabilities using the "6 C's" framework (cost, compliance, complexity, connectivity, competitiveness, customer satisfaction) to find weaknesses.

- Test system scalability by simulating increased loads to see where breaking points occur. Your system might handle today's traffic but fail under tomorrow's demands.

- Track error logs and system crashes to identify recurring issues that signal deeper problems.

- Calculate downtime frequency and duration which directly impacts your bottom line and customer trust.

- Gather user feedback about system pain points from the staff who battle your legacy system daily. They know exactly where issues hide.

- Measure integration capabilities with external systems to spot connection gaps that block data flow.

- Evaluate backup and recovery processes to spot risks to business continuity during modernization.

Assessing data quality and accessibility

- Conduct a comprehensive data audit to identify gaps, duplications, and outdated information across your systems. This step reveals where your data falls short before AI integration begins.

- Map your data sources to understand where critical information lives and how it flows between systems. Many legacy systems create accidental data silos that block AI from accessing the complete picture.

- Check data formats and standardization levels to spot inconsistencies that might confuse AI algorithms. Customer records may follow different formats from different eras of your business.

- Measure completeness of records by calculating the percentage of missing values in key fields. AI needs complete datasets to draw accurate conclusions.

- Analyze data accuracy through sampling and validation against known reliable sources. Inaccurate data leads to faulty AI outputs, no matter how advanced your algorithms are.

- Test data accessibility by timing how quickly information can be retrieved from various systems. Slow data access creates delays that hurt real-time AI applications.

- Evaluate data governance policies to confirm they support both data protection and proper sharing. Strong governance prevents data breaches and unnecessary access restrictions.

- Document informal knowledge about data quirks that might not appear in formal documentation. Long-time employees often know about data oddities that could mislead AI systems.

- Assess data integration capabilities between legacy systems and modern platforms. The connections between systems often determine how smoothly AI can operate across your organization.

- Prioritize data preparation projects based on business impact and technical feasibility. Organizations that focus on thorough data preparation achieve significantly higher success rates in AI implementation.

Now that you have assessed your data landscape, the next crucial step involves preparing your infrastructure to handle the demands of AI integration.

Mapping AI opportunities to business goals

- Start with business problems, not AI solutions. Companies that define clear metrics for AI projects are 50% more likely to implement AI effectively.

- Create a priority matrix ranking potential AI projects by business impact and implementation difficulty. This visual tool helps leadership teams make smarter investment decisions.

- Identify specific KPIs for each AI initiative before writing a single line of code. Track metrics such as cost reduction, revenue growth, customer satisfaction scores, or operational efficiency gains.

- Match AI capabilities to your most pressing business challenges. For example, natural language processing can improve customer service, while computer vision might optimize quality control.

- Calculate potential ROI for each AI opportunity using realistic timeframes. Include both hard costs (software, infrastructure) and soft costs (training, change management).

- Align AI projects with your company's strategic roadmap. AI should amplify your core business strategy, not distract from it.

- Involve cross-functional teams in the mapping process. IT understands technical feasibility while operations know where the biggest pain points exist.

- Document use cases with clear "before and after" scenarios to help stakeholders visualize the impact of AI implementation.

- Develop a phased approach that starts with quick wins. Early successes build momentum and organizational buy-in for more complex projects.

- Establish clear success criteria for each AI initiative. Data shows organizations with defined metrics achieve higher success rates.

- Create feedback loops to refine AI applications based on business outcomes. Successful AI implementations evolve through continuous improvement.

Preparing Your Data and Infrastructure for AI Integration

Your legacy data needs a good scrub before AI can work its magic. Think of it like defragging an old hard drive before installing a new operating system – you need clean, standardized data flowing through secure pipelines to make AI integration possible.

Cleaning and standardizing legacy data

- Remove duplicate records across your databases to prevent AI from learning from redundant information.

- Validate all data points against business rules to catch impossible values such as negative inventory or future birthdates.

- Fix inconsistent formatting such as phone numbers appearing as (555)123-4567 in one system and 555.123.4567 in another.

- Convert all dates to a single standard format to avoid confusion between MM/DD/YYYY and DD/MM/YYYY formats.

- Standardize text fields by normalizing case, removing extra spaces, and creating consistent abbreviations.

- Fill gaps in incomplete records with appropriate default values or flag them for human review.

- Merge fragmented customer profiles from multiple systems into unified records.

- Create a data dictionary that clearly defines each field's purpose, format, and acceptable values.

- Implement data quality scoring to track improvements and prioritize cleansing efforts.

- Establish automated data validation pipelines to maintain standards once initial cleaning is complete.

- Map legacy data fields to their modern equivalents to maintain historical context.

- Normalize measurement units across systems (miles vs. kilometers, pounds vs. kilograms).

- Tag sensitive data fields that require special handling under regulations like GDPR or CCPA.

- Document all data transformations performed to maintain an audit trail of changes.

- Test cleaned data with sample AI models to verify compatibility before full integration.

Building secure data pipelines and APIs

- Data pipelines transport information from legacy systems to AI applications through automated workflows that clean, transform, and load data.

- Modern APIs create secure communication channels between old and new systems, acting as universal translators for technologies that speak different languages.

- Implementing middleware solutions takes just 6-12 weeks in most cases, providing a quick win for businesses eager to upgrade their legacy applications.

- Data integrity checks must run continuously to catch corrupted information before it compromises your AI models.

- Role-based access controls limit who can view or modify sensitive data flowing through your pipelines.

- Encryption protocols protect data both at rest and in transit, making it unusable to unauthorized parties.

- API gateways serve as security checkpoints, validating every request before allowing access to your legacy systems.

- Compliance frameworks like GDPR, HIPAA, or PCI-DSS require specific security measures that must be built into your data pipeline architecture.

- Audit logging creates a permanent record of who accessed what data and when, which is essential for both security and regulatory requirements.

- Rate limiting prevents API abuse by restricting how many calls can be made within a specific timeframe.

- Secure API keys and authentication tokens provide a safer alternative to sharing actual login credentials between systems.

- Containerization isolates components of your data pipeline to limit damage if one section gets compromised.

- Automated testing tools scan your APIs for common security vulnerabilities before issues arise.

- Data masking techniques hide sensitive information while still allowing AI systems to process underlying patterns.

- Versioning controls help manage API changes without breaking connections to legacy systems that cannot be quickly updated.

Now that a secure connection between your systems is established, it is time to overcome the organizational challenges that can derail technical implementations.

Ensuring compliance with regulations

- AI compliance monitoring tools scan your data handling practices to flag GDPR violations before they become problems.

- Smart documentation systems create audit trails that prove compliance during regulatory reviews without manual paperwork.

- Risk assessment algorithms identify potential compliance gaps by analyzing historical patterns across legacy systems.

- Automated policy enforcement tools apply regulatory rules consistently across all data touchpoints.

- Privacy-preserving AI techniques such as federated learning allow analysis without moving sensitive data from secure locations.

- Compliance dashboards offer real-time visibility into regulatory status across departments with color-coded warnings.

- AI applications analyze large datasets to spot compliance risks that human reviewers might miss.

- Cross-departmental collaboration tools connect compliance officers with IT teams through shared workspaces and alerts.

- Machine learning models adapt to new regulations by learning from regulatory updates without a complete system overhaul.

- Cost savings can emerge through streamlined compliance operations, with some companies reporting a 30% reduction in compliance-related expenses.

- Automated reporting systems generate compliance documentation on demand for various regulatory bodies.

- Data masking and anonymization tools secure sensitive information while still allowing AI systems to extract valuable insights.

Strategies for Successful AI Integration

Integrating AI into legacy systems requires a strategic approach that balances technical requirements with business goals. Smart companies tackle this challenge by creating communication bridges between old and new systems, then gradually expanding their AI capabilities as value is proven.

Using middleware and APIs for seamless communication

Middleware and APIs act as the digital glue connecting dusty legacy systems to modern AI technologies. They serve as universal translators that allow your old COBOL program to communicate with a state-of-the-art machine learning model without either one suffering a breakdown.

The appeal lies in the speed of implementation, as most middleware solutions can go live within 6-12 weeks. This offers businesses quick wins without replacing the entire tech backbone.

Keller Williams has bridged their legacy databases with cutting-edge AI applications through strategic API deployment. Clients have found that IT teams save time by collaborating instead of forcing direct integration, which often leads to prolonged troubleshooting.

APIs handle the heavy lifting of data transfer, format conversion, and security protocols so that legacy systems continue performing their best while AI adds the magic touch. Proper documentation prevents future integration headaches.

Starting with low-risk, high-impact use cases

Smart AI adoption begins with selecting appropriate challenges. Companies that focus on strategic AI use cases are nearly three times more likely to exceed their ROI expectations.

I tell my clients at WorkflowGuide.com to think of it like leveling up in a video game. You do not fight the final boss first; you tackle smaller enemies to build experience points.

Start with a process that causes pain but will not crash your business if integration stumbles. For example, automate document processing before overhauling your entire customer service system.

This incremental approach demonstrates value quickly while managing risk. Pilot programs create wins that can be showcased to skeptical stakeholders.

Your first AI project should solve a specific problem with measurable outcomes. Consider areas that have clean data, clear success metrics, and processes that consume staff time without adding value.

Many local business owners begin with customer inquiry classification or inventory management. These projects often pay for themselves within months through reduced labor costs and improved accuracy.

Strategic planning for these initial use cases requires balancing technical feasibility against business impact. A modular integration approach minimizes disruption to existing workflows while teams build confidence with new technology. Employee engagement remains vital, as even the best AI solution fails if staff does not adopt it.

Leveraging edge computing for faster processing

Edge computing brings AI power directly to legacy systems without long data transfers. Instead of sending data all the way to cloud servers, edge computing processes information locally.

This proximity slashes response times and creates real-time analytics capabilities that older systems never imagined. Financial institutions have reported up to a 20% boost in operational efficiency after implementation.

Legacy systems gain enhanced performance through this decentralized approach. Data is processed like local traffic instead of being stuck in long commutes, reducing bottlenecks.

IoT devices can make smart decisions immediately rather than waiting for instructions from distant servers. For high-speed financial transactions or time-sensitive operations, latency reduction is essential.

The computational efficiency achieved through edge computing transforms outdated systems into responsive, resilient powerhouses without a full system overhaul.

Overcoming Organizational Challenges

Tackling organizational hurdles demands more than advanced technology—it requires people to support the AI vision. Teams might resist change like a cat avoiding water, but clear communication and practical training can turn skeptics into champions.

Discover the secret for getting an entire company excited about AI integration as you read on.

Aligning leadership and stakeholder goals

Getting everyone on the same page can be as challenging as herding digital cats, but it is crucial for AI integration success. When leadership teams and stakeholders do not align, the risk of digital transformation failure increases significantly.

I have seen successful AI projects struggle because the C-suite focused on cost savings while department heads needed workflow improvements. The solution is to create a shared vision that connects AI initiatives directly to strategic objectives.

This means translating technical capabilities into business outcomes that resonate with each stakeholder group. Regular touchpoints and feedback loops bridge the gap between technical teams and business leaders, ensuring everyone understands their role in driving success.

Tailoring presentations to different groups—such as providing ROI figures for the CFO and operational benefits for department heads—can improve collaborative decision-making.

Training employees to adopt AI technologies

Many advanced AI rollouts have failed because companies overlooked one detail: actual humans need to use these systems. New AI tools are ineffective if the team treats them with indifference.

Workforce development goes beyond deploying technology. Demonstrating the benefits of AI through practical training cuts resistance significantly. A client at a local HVAC company saw technicians free up two hours a day for service calls after AI-powered scheduling was introduced.

This practical approach transformed staff engagement, with employees eager for access to the new tools.

Change management is the difference between successful adoption and an expensive digital paperweight. Training programs that focus on hands-on practice rather than lectures help teams integrate AI more effectively. Creating internal "AI Champions" to support colleagues can boost adoption dramatically.

Fostering collaboration between IT and business teams

True transformation happens when IT and business teams collaborate. Technical teams may speak in code while business staff focus on results, creating a communication gap. Establishing cross-functional teams where developers, data scientists, and IT specialists work alongside marketing, operations, and sales personnel can overcome this divide.

Regular sync sessions and shared dashboards that track both technical and business metrics help align efforts. For instance, having IT shadow business operations for a day can foster mutual understanding.

Establishing an AI Governance Framework

Your AI systems need clear rules, much like kids need boundaries. A solid governance framework establishes guidelines for managing AI technologies across your organization.

This framework must include clear ethical guidelines, data security protocols, and accountability mechanisms. Many companies face regulatory headaches when AI systems operate without oversight, leading to problematic decisions or data breaches.

Think of governance as an insurance policy against AI mishaps. It should address risk assessment, compliance standards, bias mitigation, and continuous monitoring. Teams need to understand which regulations apply to their AI systems and how to maintain compliance as laws change.

Organizations that establish strong governance frameworks can innovate while protecting their business from potential pitfalls.

Scaling AI Systems for Long-term Success

Scaling AI systems requires more than installation; it needs a growth strategy that evolves with your business needs. Legacy systems can transform into AI powerhouses when continuous monitoring and feedback loops prompt improvements.

Tracking KPIs and measuring ROI

Numbers may hide insights if not examined correctly. For AI integration with legacy systems, tracking performance metrics distinguishes smart investments from wasted expenditures.

Business leaders should monitor two types of KPIs: adoption metrics that show how teams use the AI tools, and business value indicators that reveal financial impact. I have seen companies install advanced AI systems that were underutilized because no one tracked their usage.

Measuring ROI requires collaboration between tech personnel and business stakeholders. Setting up dashboards that monitor user engagement alongside financial outcomes and reviewing them monthly can provide clarity.

Some clients start with simple metrics like time saved per task or improved lead quality. One HVAC company tracked a 38% decrease in cost per lead after implementing AI-powered analytics.

Linking metrics directly to business goals ensures that the impact of AI is both measurable and meaningful.

Automating deployment and monitoring systems

Manually updating many AI models is not practical. MLOps practices transform this challenge into a smooth process where pipelines automatically test, deploy, and monitor AI systems.

Automated monitoring identifies performance issues before customers notice, flagging patterns that suggest model drift. For a local HVAC business, automated monitoring of AI systems led to a 15% yearly revenue increase.

Continuous integration ensures that systems remain current without disrupting operations. This approach allows teams to focus on strategic improvements rather than repetitive deployment tasks.

Setting up model drift detection and automated alerts works like a check engine light for your AI, preventing small issues from becoming costly problems.

Ensuring continuous updates and improvements

AI systems require regular tune-ups, much like a high-performance gaming PC. The most successful integrations follow a schedule of testing, learning, and optimizing performance.

At LocalNerds.co, teams track key performance metrics weekly to spot bottlenecks before they worsen. Regular assessments act as early warnings, much like checking an engine light before serious damage occurs.

Some businesses mistakenly treat AI integration as a one-off project rather than an ongoing process. Automated monitoring tools and continuous updates ensure the system remains efficient and effective over time.

One manufacturing client saved $340,000 in downtime costs by implementing predictive maintenance alerts. Teams trained in handling AI updates prevent disruptions and maintain system reliability.

Custom APIs and middleware solutions keep systems in sync, preventing communication gaps between legacy applications and modern AI tools.

Real-world Examples of Legacy System Integration

Discover how major banks boosted fraud detection by 70% with AI-powered monitoring systems, and how manufacturing giants reduced downtime by integrating predictive maintenance AI with decades-old equipment tracking systems—these real-world wins show the game-changing potential of smart legacy system integration.

Case study: AI improving operational efficiency

A manufacturing company reduced production delays by 47% after integrating AI analysis tools with their 15-year-old equipment monitoring system. Their legacy machines generated vast amounts of data but lacked the ability to spot patterns.

Sensors were installed to feed information to an AI system through custom APIs, creating an early warning system for maintenance needs. This upgrade prevented costly breakdowns and kept production running smoothly.

The coolest part? They did not need to replace the entire system, just add a layer of AI intelligence on top. Their ROI turned positive within six months, proving that even older systems can learn new tricks.

Another win comes from a regional bank struggling with loan processing bottlenecks. A paper-heavy workflow took 12 days on average to approve applications. By adding AI document processing to their existing banking software, processing time dropped to just 3 days.

The AI handled tedious tasks like data extraction and verification while human staff focused on complex decisions. Customer satisfaction scores jumped by 28%, and loan officers processed 40% more applications without extra work.

Success story: Transforming customer experience with AI

A regional healthcare provider faced a crisis with patient scheduling. Their legacy phone system created two-hour wait times and a 40% call abandonment rate. An AI agent was built to connect to their old appointment system through custom APIs.

Wait times dropped to under five minutes, and patient satisfaction scores jumped by 63%. The AI did not just handle calls; it understood patient needs, accessed medical histories, and scheduled appointments faster than human staff.

The best part? The same backend systems remained, only enhanced with modern AI that patients enjoyed interacting with.

A major retail chain also improved its inventory management by adding an AI layer that predicted stock needs and automated reordering. This change reduced stockouts by 78% and boosted quarterly sales by $3.2M. The AI worked alongside their legacy systems instead of replacing them.

A CTO commented, "It's like we gave our old car a rocket engine without changing the chassis."

Conclusion

Integrating AI with legacy systems is not just tech jargon; it is a business lifeline in today's digital landscape. This guide explored how this fusion tackles system bottlenecks, breaks down data silos, and creates competitive advantages through smart implementation strategies.

The process begins with an honest assessment, continues with clean data pipelines, and succeeds through strategic pilot projects that prove value before scaling. It is important to remember that people, not just systems, require attention during this transformation.

Organizations that combine technical expertise with change management skills establish governance frameworks that balance innovation with security. AI integration is an ongoing evolution that calls for patience and persistence. Begin with small steps, measure impacts, and build momentum as your legacy systems gain new life through Artificial Intelligence capabilities.

FAQs

1. What is legacy system integration with AI technologies?

Legacy system integration with AI technologies combines old computer systems with new artificial intelligence tools. Think of it like teaching an old dog new tricks. Your existing systems gain fresh abilities without the pain of starting over.

2. Why should companies care about adding AI to their legacy systems?

Companies save big money by keeping their old systems while adding AI power. It's like putting a turbo engine in a classic car. You get modern speed without buying a whole new vehicle.

3. What challenges might we face when adding AI to legacy systems?

Old systems often speak different languages than new AI tools. Data quality can be poor, like trying to build a fancy cake with stale ingredients. Staff might also resist change, clinging to familiar ways while the tech landscape shifts around them.

4. How long does it typically take to integrate AI with legacy systems?

Integration timelines vary widely based on system complexity and your goals. Simple projects might take weeks, while complex overhauls can stretch to months. The key is starting with small wins rather than attempting to transform everything overnight.

About WorkflowGuide.com: WorkflowGuide.com is a specialized consulting firm that transforms "AI-curious" organizations into leaders of Modernization. The firm delivers hands-on implementation guidance and practical frameworks to align AI adoption with core business objectives, focusing on Business Process Automation, System Integration, and Digital Transformation.

Disclosure: The content is provided for informational purposes only. It does not constitute professional advice. WorkflowGuide.com adheres to strict standards of transparency and data usage.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

References

- https://www.linkedin.com/pulse/integrating-ai-legacy-systems-challenges-strategies-josh-denton-pksqe

- https://www.algomox.com/resources/blog/ai_modernizing_legacy_systems_without_disruption/

- https://www.netguru.com/blog/ai-in-legacy-systems (2025-05-26)

- https://aircconline.com/csit/papers/vol14/csit141913.pdf

- https://integrass.com/media/integrating-ai-into-legacy-apps-key-challenges-solutions-2025/

- https://council.aimresearch.co/overcoming-data-silos-and-integration-barriers-in-enterprise-ai-implementation/

- https://www.researchgate.net/publication/381545989_BUILDING_SECURE_AIML_PIPELINES_CLOUD_DATA_ENGINEERING_FOR_COMPLIANCE_AND_VULNERABILITY_MANAGEMENT

- https://www.researchgate.net/publication/381045225_Artificial_Intelligence_in_Enhancing_Regulatory_Compliance_and_Risk_Management

- https://www.researchgate.net/publication/389465937_Combining_Edge_Computing_and_AI_for_Faster_Financial_Decision-Making

- https://www.wevolver.com/article/chapter-i-leveraging-edge-computing-for-generative-ai

- https://journals.sagepub.com/doi/full/10.1177/02683962231219518

- https://www.newhorizons.com/resources/blog/ai-adoption (2024-09-30)

- https://www.thevelocityfactor.com/p/ai-readiness-guiding-integration

- https://securiti.ai/ai-governance-framework/ (2023-11-10)

- https://cloud.google.com/transform/gen-ai-kpis-measuring-ai-success-deep-dive (2024-11-25)

- https://agility-at-scale.com/implementing/scaling-ai-projects/

- https://www.linkedin.com/pulse/integrating-ai-existing-systems-ensuring-data-quality-ravi-mehrotra-lxzec

- https://www.researchgate.net/publication/391073954_The_Transformative_Impact_of_AI_on_Modern_System_Integration (2025-04-25)

- https://www.hakunamatatatech.com/our-resources/blog/guide-to-digital-transformation-success-with-it (2025-05-16)