Cross-Functional AI Use Case Evaluation Methods

Understanding AI Integration

AI use case evaluation involves finding and ranking potential AI projects across different company departments. Many businesses struggle with this process.

Cross-functional evaluation helps companies pick AI projects that solve real business problems rather than just chasing shiny new tech.

Think of AI use case evaluation like assembling a puzzle. You need pieces from every department to see the full picture. The IDEAL Framework (Identify Use Case, Determine Data, Establish Model, Architect Infrastructure, Launch Experience) gives teams a roadmap for this process.

Other helpful tools include the BXT Framework and Impact-Feasibility Matrix, which help balance what is possible with what is valuable.

Companies that align AI initiatives across departments see up to 1.5x faster revenue growth. Real-world success stories back this up. Societe Generale prioritized over 100 AI use cases based on strategic fit.

Hitachi boosted warehouse productivity by 8% through cross-team evaluation. ASOS lifted email click rates by 75% with AI-driven personalization.

As founder of WorkflowGuide.com, I have directly observed how proper AI evaluation transforms businesses. My team has built over 750 workflows and generated $200M for partners through problem-first automation.

The key? We don't substitute tools for strategy. We focus on boosting meaningful human work through smart AI integration.

This article will show you practical methods to evaluate AI use cases across your organization. We cover frameworks for discovery, prioritization strategies, and ways to measure ROI.

Ready for some AI evaluation magic?

Key Takeaways

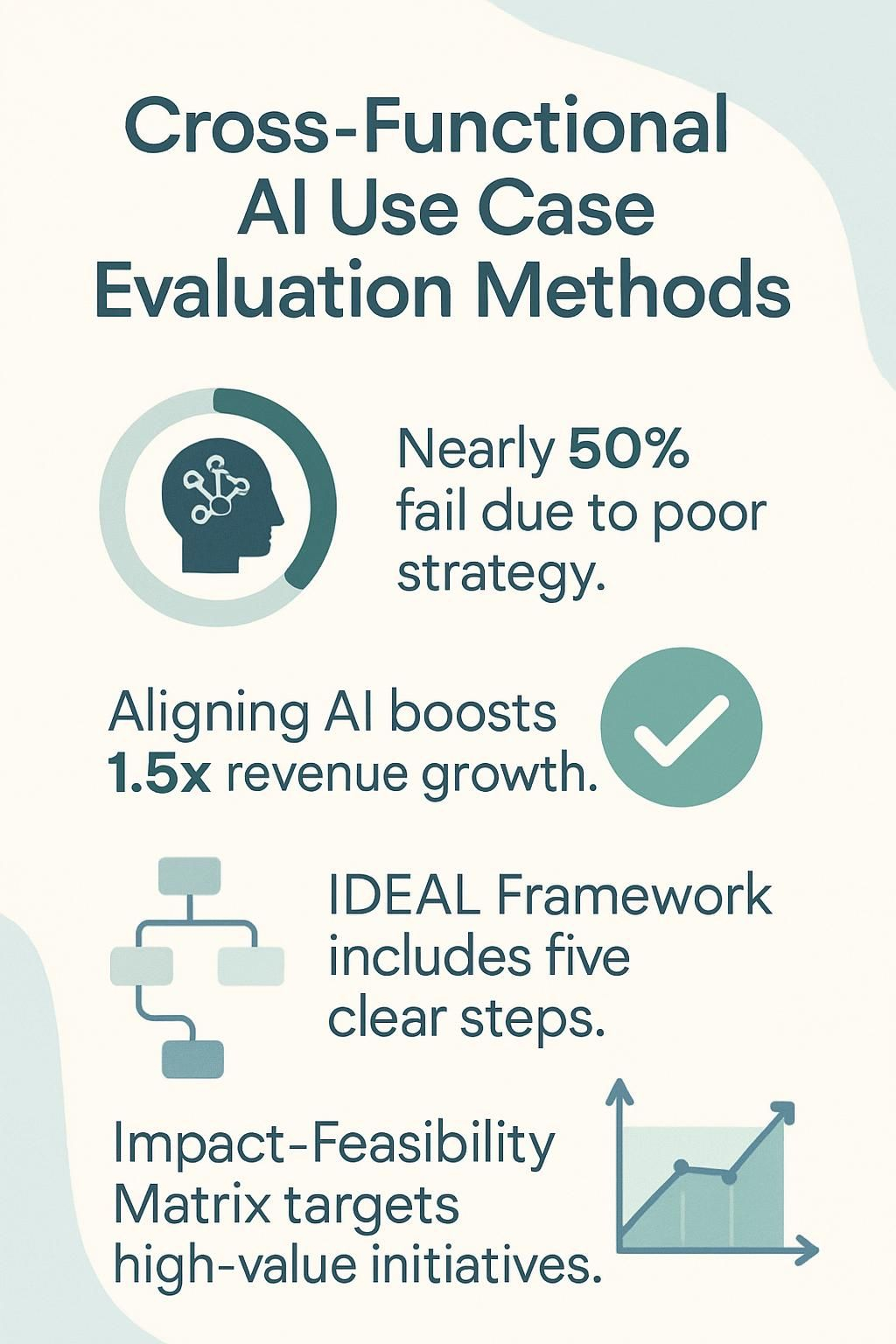

- Nearly half of companies struggle with AI projects due to poor strategy, wasting thousands of dollars on initiatives that deliver no value.

- Successful AI evaluation requires input from multiple departments to match business needs rather than pursuing trendy technology solutions.

- Companies that align AI with specific business goals see 1.5x faster revenue growth and 1.6x higher returns for shareholders.

- The IDEAL Framework helps teams identify use cases through five steps: Identify Use Case, Determine Data requirements, Establish Model needs, Architect Infrastructure, and Launch Experience.

- Smart prioritization uses tools like the Impact-Feasibility Matrix to focus on high-value, low-effort AI initiatives first while balancing short-term wins with long-term strategy.

Understanding the Importance of Cross-Functional AI Use Case Evaluation

Cross-functional AI evaluation separates game-changing initiatives from expensive tech toys gathering dust. Smart leaders recognize that AI projects need input from multiple departments to match real business needs, not just what looks cool in a demo.

Aligning AI Initiatives with Business Goals

AI projects fail when they lack clear business goals. I have seen it countless times: companies rush to adopt AI because it is trendy, not because it solves actual problems. This aimless approach wastes resources and creates fancy tech solutions nobody asked for.

Think of it like buying a Ferrari when what you really need is a pickup truck to haul lumber. Companies that align AI with specific business objectives see 1.5x faster revenue growth and 1.6x higher returns for shareholders.

The most successful AI isn't about having the fanciest algorithms; it's about solving the right business problems with the right tools. - Reuben Smith, WorkflowGuide.com

Start with your business challenges first, then find AI solutions that fit. Ask yourself: "What specific pain points can AI fix in my operation?" Maybe it is cutting customer service response times or spotting inventory issues before they happen.

Set clear KPIs to track if your AI actually delivers results. The magic happens when your AI tools directly support what matters most to your bottom line. We explore how cross-functional teams can boost this alignment through diverse viewpoints and better collaboration.

Addressing Organizational Pain Points

Pain points lurk in every business like bugs in legacy code. They drain resources, frustrate teams, and block growth. Smart AI adoption starts with spotting these pain points where automation can make the biggest splash.

I have seen companies waste months on flashy AI projects while ignoring the paper cuts bleeding their productivity dry. The trick? Map your organization's daily headaches before jumping into solutions.

Your customer service team might spend hours on repetitive queries that a simple AI assistant could handle, freeing humans for complex problems that actually need their brainpower.

Business leaders must dig beyond surface complaints to find root causes. A manufacturing client once complained about quality control issues, but our assessment revealed the real problem was data silos preventing early defect detection.

We built an AI system that connected these isolated data points and cut defects by 23%. Cross-functional teams provide crucial perspectives here, as pain points often cross department boundaries.

The most successful AI implementations I've guided started with stakeholder interviews across all levels, not just executive wishful thinking. This approach aligns automation with strategic goals while solving real problems that impact your bottom line.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Identifying Potential AI Use Cases

Finding AI opportunities across your company doesn't have to feel like searching for a needle in a digital haystack. Smart businesses spot AI use cases by looking at repetitive tasks that eat up valuable time or complex problems where humans get bogged down in data overload.

Frameworks for Use Case Discovery

Finding the right AI use cases can feel like searching for a specific LEGO piece in a mountain of bricks. These frameworks act as your sorting trays, helping you organize possibilities and spot the perfect pieces for your business puzzle.

- IDEAL Framework breaks discovery into five manageable steps: Identify Use Case, Determine Data requirements, Establish Model needs, Architect Infrastructure, and Launch Experience. This step-by-step approach prevents you from getting lost in technical details too early.

- BXT Framework evaluates potential use cases through three critical lenses: business viability (can it make money?), user experience (will people actually use it?), and technological feasibility (can we build it?). This balanced view stops you from chasing cool tech that lacks practical application.

- Horizon-Based Framework sorts opportunities into three buckets: Horizon 1 for quick wins within 3-6 months, Horizon 2 for projects that enhance market position in 6-18 months, and Horizon 3 for transformative business model changes over 18+ months. This helps create a balanced portfolio of AI initiatives.

- Problem-First Discovery focuses on identifying organizational pain points before considering AI solutions. Start with what hurts rather than with what is shiny and new in the tech world.

- Brainstorming Techniques use open-ended questions, mind maps, and role-playing sessions to unlock use cases that structured frameworks might miss. The goal is a quantity of ideas before quality filtering begins.

- Business Envisioning workshops bring cross-functional teams together to articulate problems, objectives, and success metrics. This shared vision reduces implementation friction later.

- Value Stream Mapping identifies bottlenecks in current processes where AI could create the most impact. This visual approach helps spot inefficiencies that might be invisible in daily operations.

- Data Inventory Assessment catalogs existing data assets to spot low-hanging fruit where you already have the information needed for AI applications. No need to build new data pipelines if you've got gold sitting in your databases.

- Competitive Intelligence Review examines how similar companies deploy AI to gain insights without reinventing the wheel. Learn from others' successes and failures in your industry.

- Stakeholder Journey Mapping traces the experience of customers, employees, or partners to find friction points where AI could smooth interactions. This human-centered approach keeps solutions focused on real needs.

Frameworks Summary:

- IDEAL and BXT Frameworks offer structured evaluations.

- Horizon-Based and Problem-First Discovery ensure balanced opportunity assessment.

- Brainstorming and Journey Mapping emphasize user-focused problem solving.

Key Challenges in Identifying Use Cases

- Knowledge gaps between technical and business teams create communication breakdowns. Data scientists speak in algorithms while marketing teams talk ROI, leading to misaligned expectations and wasted resources.

- The AI hype cycle generates unrealistic expectations about what AI can actually deliver. Business owners chase shiny objects rather than solving real problems, resulting in fancy tech demos with little business impact.

- Lack of clear success metrics makes it impossible to evaluate potential use cases. Without defined KPIs, companies chase vague goals like "improved customer experience" without ways to measure actual progress.

- Data quality and availability issues often surface too late in the process. Many promising AI projects crash when teams discover their data is messy, incomplete, or stuck in legacy systems.

- Cultural resistance to innovation blocks adoption before projects even start. Employees fear automation will replace their jobs, creating an uphill battle for change management.

- Difficulty scaling beyond pilot projects leaves many AI initiatives stranded in "proof-of-concept purgatory." What works in a controlled test often fails in real-world conditions.

- Transparency and explainability challenges create adoption barriers. Business users will not use AI systems they do not understand, especially for critical decisions.

- Resource constraints force tough choices between competing priorities. Most organizations lack enough data scientists, engineers, and budget to pursue all potential use cases.

- Regulatory and compliance concerns create extra hurdles for AI deployment. Privacy laws, industry regulations, and ethical guidelines vary across sectors and regions.

- Integration with existing systems proves more complex than anticipated. Legacy technology stacks were not built for AI, creating technical debt that slows implementation.

Summary of Key Challenges:

- Communication gaps between technical and business teams.

- Unrealistic expectations fueled by AI hype.

- Unclear success metrics and data issues.

- Cultural resistance and limited resources.

Best Practices for Use Case Discovery

Moving beyond the hurdles of AI use case identification, smart companies follow proven discovery methods that yield results. The most effective approach starts with cross-departmental workshops where front-line staff can highlight actual pain points.

These sessions often reveal gold mines of opportunity that executives might miss. Map your processes visually before these meetings to spot bottlenecks where AI could help. Many tech leaders jump straight to solutions without this crucial step, akin to building a house without blueprints.

Data readiness checks must happen early in your discovery process. I have seen too many projects fail because someone assumed data was clean and accessible. Focus your search on tasks that are both repetitive and high-value, as these typically offer the best ROI.

For example, a manufacturing client found that automating quality control image processing saved 15 hours weekly while improving accuracy by 23%. Document each potential use case with clear business goals, required data sources, and expected outcomes.

This documentation helps teams compare options objectively rather than chasing the shiniest new tech toy.

Best Practices Summary:

- Conduct cross-department workshops.

- Perform early data readiness assessments.

- Document use case details and expected outcomes clearly.

AI Use Case Discovery and Prioritization Strategies

Finding the right AI projects to tackle first can feel like trying to pick the best character in a video game without knowing the level ahead. Many companies stumble here because they chase attractive tech instead of solving real problems.

Smart discovery starts with the IDEAL Framework: Identify the use case, determine your data needs, establish your model requirements, architect the infrastructure, and launch the user experience.

This systematic approach prevents the classic "solution looking for a problem" trap that drains budgets faster than a leaky bucket. Tech clients using this method typically spot 30% more viable use cases than those who rely on random brainstorming.

Prioritization requires brutal honesty about what matters most to your business. The Impact-Feasibility Matrix acts like a cheat code, helping focus on high-value, low-effort initiatives first.

Plot your potential AI projects on this grid, then start with the upper-right quadrant winners. Risk-Reward Analysis forces you to weigh benefits against potential pitfalls.

One manufacturing client used this approach to rank 15 possible AI applications and discovered their "obvious first choice" actually had hidden data quality issues that would have doomed the project.

The BXT Framework offers another angle by examining business viability, user experience, and technical feasibility together. This triple-check prevents scenarios where a technically perfect solution fails because it does not meet user needs or deliver money.

Prioritization Strategies Summary:

- Utilize frameworks like IDEAL and BXT for structured evaluation.

- Prioritize projects based on strategic impact and feasibility.

- Consider risk-reward to guide decision-making.

Prioritizing AI Use Cases for Maximum Impact

Smart AI prioritization focuses on business impact, technical feasibility, and strategic alignment—like choosing the perfect character build in an RPG where every skill point matters. Many companies chase every new tool instead of honing in on what truly moves the needle.

Evaluating Business Value and ROI

Money talks, especially when pitching AI projects to finance teams. We measure AI's business value with clear metrics that turn tech jargon into tangible results. The traditional ROI formula [(Net Profit / Investment Cost) x 100] offers a baseline, yet smart leaders look deeper.

Emerjs Trinity Model splits ROI into three buckets: measurable financial returns, non-financial benefits that can be tracked, and strategic advantages that set up future growth.

Avoid vanity metrics that look nice in presentations but mean little for your bottom line. Focus on what matters: cost savings, revenue growth, customer satisfaction scores (like NPS), employee productivity gains, and error reduction rates.

Real-world wins tell the story best. DataRobot boosted warehouse operations by 8% through targeted AI implementation, proving that well-evaluated use cases deliver results that count.

Your investment analysis should balance immediate financial returns with long-term strategic value to build a compelling business case even for the most skeptical CFO.

Business Value and ROI Summary:

- Track cost savings and revenue growth.

- Measure non-financial benefits like customer satisfaction.

- Balance short-term wins with long-term strategy.

Considering Technical Feasibility

While ROI drives business decisions, technical feasibility determines if an AI project can work in the real world. Technical feasibility acts as a reality check before committing resources to an AI initiative that might fail.

Data readiness forms the backbone of any AI project. You must assess if your data has enough quality and quantity to build effective models. Services like DataRobot offer tools for geospatial feature engineering and bias analysis to evaluate your data landscape.

Examine your computational resources as some AI models consume significant processing power. Model transparency matters as well; tools like SHAP help stakeholders understand how AI makes decisions.

I have seen many projects fail when organizations overlooked internal skill gaps. Without proper expertise to implement and maintain AI, even the best strategies can collapse.

A thorough technical feasibility study prevents the classic "eyes bigger than stomach" problem in AI adoption.

Technical Feasibility Summary:

- Ensure data quality and resource availability.

- Assess computational needs and model transparency.

- Review internal skill gaps to support implementation.

Balancing Short-Term Gains with Long-Term Strategy

AI projects need both quick wins and long-term vision, much like playing chess where planning several moves ahead is crucial while making smart immediate choices. Many tech leaders fall for the allure of every new AI tool without a proper roadmap.

Starting with small pilot projects that deliver fast value builds credibility and momentum. A retail client tested an inventory prediction model that saved $50K in three months, later expanding into a full-scale supply chain overhaul.

This approach builds momentum while keeping the long view in focus. Your AI strategy should remain flexible, adapting as you learn what works best. Focusing on a few high-priority opportunities prevents spreading resources too thin.

The most successful implementations follow this pattern: test assumptions with small pilots, measure results deliberately, then scale proven solutions.

Strategy Balancing Summary:

- Start with pilot projects for quick, measurable wins.

- Maintain alignment with long-term business goals.

- Scale successful projects gradually and efficiently.

Cross-Functional Collaboration in AI Use Case Evaluation

Cross-functional teams bring different viewpoints to AI evaluation, creating stronger solutions than any department could develop alone.

Engaging Stakeholders Across Departments

- Hold regular cross-department workshops where IT professionals explain technical concepts while business units share their challenges. These sessions build a shared vocabulary and prevent misunderstandings during development.

- Create a stakeholder map identifying who should be involved from each department. This visual tool helps spot gaps in representation early.

- Assign department champions who act as liaisons between technical teams and business units. These champions need not be AI experts but must be respected voices that build support.

- Set up a straightforward feedback system that is easy for everyone to use. Valuable insights may be missed if the feedback process is overly complicated.

- Document all use case requirements in common language understood by both technical and business teams. Avoid technical jargon that might hinder clarity.

- Schedule regular demo sessions where technical teams show progress in ways that relate to business outcomes. This practice can build confidence and alignment.

- Develop shared metrics that are relevant to every department involved. Find common ground between different performance indicators.

- Use collaborative tools that allow input from various skill levels without adding extra steps to established workflows.

- Rotate meeting leadership among departments to avoid any impression that AI projects belong solely to IT or data science teams.

- Create a glossary of terms that clarifies technical and business language. This tool can preempt many misunderstandings.

- Prepare for resistance by addressing concerns openly. Some stakeholders might worry about job changes or learning curves.

- Focus on a few high-priority opportunities rather than trying to include every department's ideas. Quality takes precedence over quantity in AI implementation.

- Start small with pilot projects to test assumptions before expanding. This builds confidence across teams and provides valuable learning.

Stakeholder Engagement Summary:

- Conduct regular cross-department workshops.

- Establish clear stakeholder maps and champions.

- Use simple language and visual tools for feedback and demos.

Addressing Communication Gaps Between Teams

- Create a shared AI vocabulary to prevent misunderstandings. Technical teams may use terms like "neural networks" while business teams focus on "ROI."

- Schedule regular cross-functional standups where each team reports progress in everyday language. These brief meetings focus attention on what matters.

- Develop visual communication tools, such as dashboards that display project status in clear terms that everyone can grasp.

- Assign communication liaisons from each department to relay information accurately and efficiently.

- Document decisions and action items in a centralized repository accessible to all teams. This serves as a common reference point.

- Host cross-training sessions where technical and business teams share insights about their fields. Such sessions build mutual understanding.

- Create feedback loops that allow team members to voice concerns safely and without judgment.

- Use collaboration tools that integrate well with existing workflows, ensuring they are adopted naturally.

- Establish clear escalation paths to resolve communication issues quickly.

- Celebrate successful collaboration to reinforce the value of teamwork.

- Implement a "no blame" policy that keeps dialogue open and honest during communication challenges.

Communication Gaps Summary:

- Establish shared vocabulary and regular standups.

- Utilize visual tools and assign dedicated liaisons.

- Document decisions and celebrate effective collaboration.

Building an Agile and Collaborative Workflow

Agile workflows thrive when teams break down silos between departments. Modern AI tools serve as digital bridges, automating routine tasks so employees can focus on problem solving rather than repetitive updates.

At LocalNerds, teams have boosted efficiency by 30% through shared dashboards that connect marketing insights with product development goals. The true progress happens when data experts, marketers, and operations teams speak a common language—centered on business outcomes.

Cross-functional teamwork requires more than just advanced tools; it demands a cultural shift. Even if some teams roll their eyes at another collaboration platform, they will join in when they see how AI can predict customer needs before the first call.

Regular stand-ups help maintain alignment without causing meeting fatigue. Improving data literacy across teams strengthens overall performance, allowing even small companies to challenge larger competitors.

Agile Workflow Summary:

- Break departmental silos with shared digital dashboards.

- Foster continuous collaboration through regular stand-ups.

- Enhance team data literacy with easy-to-understand insights.

Frameworks and Methodologies for AI Use Case Evaluation

Effective AI evaluation frameworks act as a roadmap through the challenge of use case assessment. They cut through technical detail with clear metrics and defined decision criteria.

AI Use Case Intake Framework

- Structured Documentation System that captures all essential details of proposed AI use cases in one place, making evaluation more efficient.

- Risk Level Categorization aligned with EU AI Act standards to classify AI applications as minimal, limited, high, or unacceptable risk.

- Data Security Assessment tools that help teams identify potential vulnerabilities in how AI systems will handle sensitive information.

- Privacy Compliance Checklist to verify that all AI proposals meet relevant data protection regulations before moving forward.

- Cross-Functional Accountability Matrix that clearly assigns responsibilities to specific departments and stakeholders throughout the evaluation process.

- Step-by-Step Assessment Worksheet guiding teams through each phase of evaluation from initial proposal to final approval.

- Business Impact Scoring that weighs potential ROI against implementation costs and resource requirements.

- Technical Feasibility Rating system to determine if your organization has the necessary infrastructure and expertise to support the AI use case.

- Ethical Guidelines Integration that incorporates responsible AI practices into the evaluation process rather than treating them as an afterthought.

- Implementation Timeline Projector to map out realistic deployment schedules based on complexity and resource availability.

- Stakeholder Feedback Mechanism that collects input from all affected departments to identify potential roadblocks early.

- Success Metrics Definition tools to establish clear KPIs for measuring the effectiveness of implemented AI solutions.

- Scalability Assessment to determine how well the AI solution can grow with your business needs over time.

- Vendor Evaluation Criteria for organizations planning to use third-party AI solutions rather than building in-house.

- Pilot Testing Framework that outlines how to run small-scale trials before full implementation.

Intake Framework Summary:

- Standardize documentation and risk assessment.

- Integrate security, privacy, and compliance checks.

- Score business impact and technical feasibility to guide selection.

Now we explore how to prioritize these AI use cases for maximum impact across your organization.

Metrics and Decision-Making Criteria

- Performance accuracy metrics track how well your AI model performs its core functions. Models with 95%+ accuracy might sound impressive on paper, but this number means little if they fail on edge cases that matter to your business.

- Bias detection tools help identify unfair patterns in AI decisions, scanning for discriminatory outcomes across different user groups.

- ROI calculation frameworks quantify both direct financial gains and indirect benefits. Smart businesses track cost savings, revenue increases, and productivity boosts to justify continued AI investment.

- Time-to-value measurements track how quickly your AI solution delivers actual business results, distinguishing between quick wins and projects needing longer-term investment.

- User adoption rates reveal whether your team actually uses the AI tools implemented. Low adoption often signals poor training or solutions that do not meet actual needs.

- Explainability scores from tools like SHAP and LIME rate how well humans can understand AI decisions. Greater transparency builds trust with employees and customers.

- Data quality assessments evaluate the reliability of the information feeding your AI system.

- Compliance checklists for regulations like GDPR and HIPAA help avoid costly legal pitfalls.

- Scalability metrics predict how well your solution will handle increased demands, preventing scenarios where lab success fails in production.

- Continuous monitoring protocols track performance drift over time, ensuring models remain effective after deployment.

- Technical debt indicators measure future work created by current implementations, warning against quick fixes that lead to costly rebuilds.

- Cross-functional impact scores rate how an AI solution affects different departments, ensuring benefits ripple across multiple teams.

Performance Metrics Summary:

- Focus on accuracy, user adoption, and scalability.

- Monitor bias and compliance regularly.

- Define clear metrics to evaluate ROI effectively.

Ensuring Responsible and Ethical AI Deployment

Responsible AI deployment is not just a checkbox; it safeguards your business from risks that could harm your reputation. AI governance frameworks offer a structured path to manage risks while keeping technology aligned with core values.

Think of ethical AI as a moral compass that promotes fairness, transparency, and privacy while preventing biased outcomes. The NIST AI Risk Management Framework and ISO/IEC 42001 provide practical tools to navigate regulatory demands such as the EU AI Act and GDPR.

Building accountability into AI systems protects both your business and customers from harm. Many projects falter because teams cannot explain their AI's decision-making processes.

Ensure your AI solutions include guardrails that promote inclusivity and sustainability. Transparent practices lead to higher adoption rates and build lasting confidence.

Ethical Deployment Summary:

- Adopt established AI governance frameworks.

- Ensure transparency and accountability in AI decisions.

- Maintain compliance with international regulations.

Case Studies: Real-World Applications of AI Use Case Evaluation

These real-world case studies showcase how companies like Societe Generale cut risk assessment time by 60% through AI, while Hitachi boosted manufacturing efficiency by 23% using cross-functional evaluation methods.

Explore how these organizations turned theoretical AI concepts into practical business wins.

Financial Services: AI for Risk Management at Societe Generale

Societe Generale made waves in financial services by identifying 100 generative AI use cases for risk management. They built a smart framework that ranked each use case based on governance requirements and strategic alignment.

After deployment, they tracked value with concrete metrics like cost savings and revenue gains, proving that AI drives real business results.

This approach shows that traditional financial institutions can transform with AI while keeping compliance in focus.

Financial Services Summary:

- Leverage AI for risk management and compliance.

- Rank use cases by strategic fit and measurable value.

- Monitor outcomes with clear financial metrics.

Manufacturing: Enhancing Efficiency with AI at Hitachi

While financial institutions use AI for risk management, manufacturing giants like Hitachi enhance operations. Hitachi's warehouses saw an impressive 8% productivity boost after introducing AI-driven optimization systems.

They focused on specific production bottlenecks rather than deploying flashy tech without purpose. Their cross-functional evaluation united operations, data scientists, and strategists to target impactful areas.

Manufacturing Summary:

- Improve efficiency by addressing operational bottlenecks.

- Deploy AI where it significantly boosts productivity.

- Utilize cross-functional collaboration to validate solutions.

Retail: Personalization Strategies in E-Commerce

In retail, AI-powered personalization has shifted from a nice-to-have to a must-have. Online retailers use AI to tailor customer experiences, with 78% now using such solutions.

Amazon generates 35% of its revenue from AI-driven product recommendations. ASOS saw a 75% boost in email click-through rates after implementing dynamic content personalization that adjusts to customer behavior.

Retail Summary:

- Utilize AI to personalize customer shopping experiences.

- Enhance engagement through dynamic, real-time content.

- Drive revenue and customer loyalty with targeted strategies.

Healthcare: Targeted AI Solutions with Tangible ROI

Healthcare organizations face pressures that AI can ease with targeted applications. One UK hospital used AI chatbots to free up over 700 appointment slots weekly, showing visible gains in efficiency.

This demonstrates how targeted AI can deliver tangible ROI without overhauling entire systems. Success depends on strong data governance, security protocols, and the right infrastructure.

Healthcare Summary:

- Implement targeted AI solutions to improve care efficiency.

- Prioritize data security and strong governance practices.

- Measure ROI through operational improvements.

Overcoming Common Challenges in AI Use Case Implementation

Managing Resistance to Change

People resist change. I have observed cases where advanced AI tools remained unused because teams clung to familiar routines. Resistance often stems from worries about job security or discomfort with new processes.

Starting with small pilot projects can demonstrate quick wins. In one case, a "tech buddy" system paired skeptics with advocates, halving resistance in three months. Cross-functional collaboration plays a vital role in easing transitions.

Resistance Management Summary:

- Begin with pilot projects to show early benefits.

- Address workforce concerns directly and openly.

- Use cross-functional teamwork to foster acceptance.

Ensuring Explainability and Transparency

AI systems often function as black boxes, making decisions without clear explanations. This opacity can hinder stakeholder buy-in and hinder regulatory compliance. Tools like SHAP help open up the black box, showing why an AI makes specific choices.

Clear, understandable AI builds confidence and smooths implementation. Transparent explanation of model logic can prevent project delays and build trust across departments.

Explainability Summary:

- Utilize explainability tools to clarify AI decisions.

- Communicate model logic in plain, accessible language.

- Enhance stakeholder trust through transparency.

Mitigating Risks and Ethical Concerns

AI projects come with ethical challenges that require constant attention. Bias mitigation, clear success metrics, and robust data quality are key to avoiding undesirable outcomes.

Implementing human-in-the-loop systems can help identify issues before full deployment. Clear governance protocols and regular performance checks mitigate risks and support ethical AI practices.

Risk Mitigation Summary:

- Establish continuous monitoring and bias checks.

- Implement clear governance procedures.

- Engage stakeholders in addressing ethical concerns.

Strategic Recommendations for AI Use Case Evaluation

Fostering a Culture of Innovation

Building a culture that embraces AI begins with leadership that values creative thinking. Companies sometimes apply AI tools without altering their mindset, and those efforts fall short. Leaders must create safe spaces where teams can experiment with AI without fear of failure.

Organizations that prioritize continuous learning and encourage smart risk-taking see faster adoption rates and greater innovation. Balancing technical training with creative workshops enables team members to find their unique strategic roles alongside AI tools.

Innovation Culture Summary:

- Promote creative thinking and safe experimentation.

- Encourage continuous learning through regular training.

- Support a culture where AI enhances team capabilities.

Investing in Continuous Learning and Skill Development

A culture of innovation benefits from strategic investments in learning. Companies that focus on workforce training see higher productivity and improved employee morale. Structured upskilling programs can transform teams and strengthen AI implementation.

Targeted training does not require everyone to learn coding but focuses on building roles that complement AI. Modern training platforms offer automation solutions that streamline learning and track progress effortlessly.

Learning and Development Summary:

- Create focused pathways for AI upskilling.

- Improve morale with structured and measurable training.

- Use technology platforms to monitor and enhance skill development.

Leveraging Technology Platforms for Scalability

Technology platforms form the backbone of scalable AI initiatives. Many companies try to build everything from scratch, only to face delays while competitors advance with proven platforms. Using established AI solutions lets teams focus on solving business problems rather than technical plumbing.

Platforms that standardize workflows across departments help scale projects efficiently. Establishing a solid data foundation makes datasets accessible to all teams and reduces deployment times significantly.

Technology Platforms Summary:

- Adopt proven platforms for efficient AI implementation.

- Scale successful projects across departments seamlessly.

- Maintain a strong data foundation for ongoing growth.

Conclusion

Cross-functional AI evaluation requires strategic thinking. Frameworks that connect technical capabilities with business goals help teams identify high-value opportunities without following AI hype.

Organizations need clear metrics, honest feasibility assessments, and genuine stakeholder input to succeed.

AI adoption works best as a gradual process, not as a sudden overhaul. Begin with small projects, measure results carefully, and expand successful initiatives while maintaining cross-departmental collaboration as a key advantage for AI that delivers measurable ROI.

To delve deeper into how you can effectively discover and prioritize AI use cases for your business, visit our detailed guide on AI Use Case Discovery and Prioritization Strategies.

FAQs

1. What are Cross-Functional AI Use Case Evaluation Methods?

Cross-Functional AI Use Case Evaluation Methods are structured approaches teams use to assess AI projects across different departments. They help companies pick the right AI applications that bring value to multiple areas.

2. Why should companies use cross-functional teams when evaluating AI use cases?

Different departments offer fresh perspectives for AI evaluation. Marketing sees customer benefits, IT identifies technical hurdles, and finance calculates ROI. This mix avoids blind spots and builds company-wide support for AI initiatives.

3. What metrics matter most in cross-functional AI evaluations?

ROI tops the list, but do not ignore implementation complexity and alignment with business goals. Teams should also measure potential risks, required resources, and scalability. The best metrics vary by company, much like unique fingerprints.

4. How often should cross-functional teams review AI use cases?

Quarterly reviews work well for most organizations. Fast-paced industries might require more frequent check-ins to remain competitive. The tech landscape changes quickly; yesterday's rejected AI use case might turn into tomorrow's breakthrough.

Disclosure: This content is informational and reflects expert insights from WorkflowGuide.com. There are no sponsorships, affiliate links, or conflicts of interest influencing this material.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

References

- https://www.linkedin.com/pulse/aligning-ai-initiatives-business-goals-tactical-approach-sree-hari-gmamf

- https://medium.com/@adnanmasood/identifying-and-prioritizing-artificial-intelligence-use-cases-for-business-value-creation-1042af6c4f93

- https://www.researchgate.net/publication/371506830_Investigating_Practices_and_Opportunities_for_Cross-functional_Collaboration_around_AI_Fairness_in_Industry_Practice

- https://www.sciencedirect.com/science/article/pii/S1467089525000107

- https://cdn.openai.com/business-guides-and-resources/identifying-and-scaling-ai-use-cases.pdf (2025-04-11)

- https://learn.microsoft.com/en-us/microsoft-cloud/dev/copilot/isv/business-envisioning

- https://rtslabs.com/guide-to-conducting-a-successful-ai-feasibility-study

- https://www.linkedin.com/posts/kashyap-raibagi-06b70510b_generative-ai-balancing-short-term-wins-activity-7239424190083145729-X1zk

- https://www.datarobot.com/blog/driving-ai-success-by-engaging-a-cross-functional-team/

- https://abhishek-reddy.medium.com/bridging-the-gap-a-comprehensive-guide-to-cross-functional-collaboration-in-ai-product-development-1fe842fba2c3

- https://yardstick.team/work-samples/effective-work-samples-for-evaluating-cross-functional-ai-project-collaboration-skills

- https://www.linkedin.com/pulse/enhancing-cross-functional-collaboration-ai-bridging-gaps-jatin-arora-tqdvc

- https://www.responsible.ai/ai-use-case-evaluation-form/

- https://www.walturn.com/insights/core-components-of-an-ai-evaluation-system

- https://www.logicgate.com/blog/ensuring-ethical-and-responsible-ai-tools-and-tips-for-establishing-ai-governance/

- https://www.researchgate.net/publication/387170066_Real-Time_Risk_Assessment_Using_AI_in_Financial_Services (2024-12-19)

- https://www.researchgate.net/publication/353224393_AI_Innovation_Through_Design_The_Case_of_Hitachi_Vantara%27_International_Journal_of_Technology_Management

- https://social-innovation.hitachi/en-us/think-ahead/digital/ai-revolution-in-manufacturing-lead-the-charge/

- https://clevertap.com/blog/ai-use-cases-in-e-commerce/

- https://qumea.com/wp-content/uploads/Integration-and-ROI-of-AI-Technology-in-Healthcare-20241129.pdf

- https://www.solita.fi/blogs/common-challenges-in-ai-implementation-and-how-to-overcome-them/ (2025-04-10)

- https://www.researchgate.net/publication/392515912_Resistance_to_Change_and_Implementation_Challenges_of_AI_in_Moroccan_Public_Administrations

- https://www.researchgate.net/publication/384884277_Enhancing_Transparency_and_Understanding_in_AI_Decision-Making_Processes (2024-10-13)

- https://makingsense.com/blog/post/ai-implementation-challenges (2025-04-23)

- https://profiletree.com/overcoming-ai-implementation-challenges/ (2024-05-12)

- https://profiletree.com/culture-of-innovation-and-acceptance-of-ai/

- https://www.researchgate.net/publication/380604518_Developing_AI-powered_Training_Programs_for_Employee_Upskilling_and_Reskilling (2024-05-16)