AI Transparency and Explainability Requirements

Understanding AI Integration

AI transparency refers to how clearly artificial intelligence systems show their inner workings. Like trying to understand why your smartphone suggests certain photos, transparency lets you peek behind the digital curtain.

As founder of WorkflowGuide.com and an AI strategist who's built over 750 workflows, I have observed directly how mysterious AI can feel to business owners. The OECD AI Principles established the first international standard for trustworthy AI, highlighting the need for systems that explain themselves.

This matters because over 65% of customer experience leaders now view AI as a strategic necessity, according to the 2024 Zendesk report.

Think of AI transparency as the difference between a magic trick and watching a cooking show. Both deliver results, but only one shows you the recipe.

The EU Artificial Intelligence Act now requires this transparency to build trust and meet legal standards.

Without clear explanations, AI systems risk hiding biases or making decisions no one understands. Adobe Firefly sets a good example by openly sharing what data trained their models.

Explainable AI (XAI) breaks down into four main areas: data explainability, model explainability, post-hoc explainability, and assessment methods. These technical approaches help translate complex algorithms into plain English.

The CLeAR Documentation Framework offers a practical path forward with its focus on making AI systems Comparable, Legible, Actionable, and Robust.

For local business owners, AI transparency isn't just technical jargon. It means knowing why your marketing AI recommended certain customers, or how your service scheduling system prioritizes appointments.

Regular audits catch hidden biases before they affect your customers. The U.S. GAO AI Accountability Framework provides guidelines for these checks.

The path to transparent AI has speed bumps. Let's explore how to clear them.

Key Takeaways

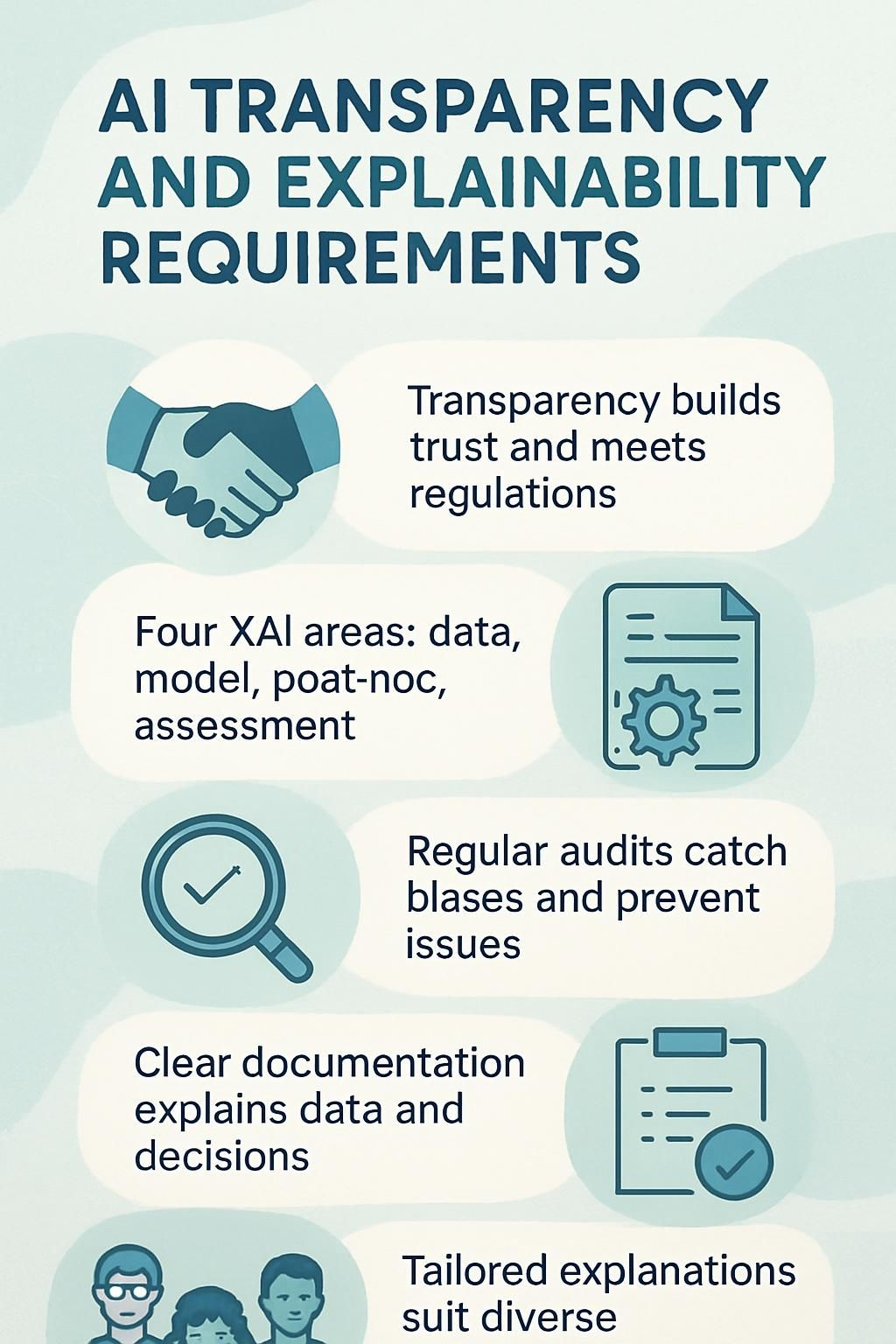

- AI transparency reveals how systems reach decisions, which builds user confidence and meets regulations like GDPR and the EU AI Act.

- Explainable AI (XAI) includes four key areas: data explainability, model explainability, post-hoc explainability, and assessment methods.

- Regular AI audits help catch hidden biases and prevent discrimination before causing legal or public relations issues.

- Clear documentation serves as your AI's origin story, detailing data sources and decisionmaking processes in language that non-specialists understand.

- Effective AI transparency requires adapting explanations for different stakeholders, from technical teams needing deep insights to customers seeking simple justifications.

Understanding AI Transparency and Explainability

AI transparency means showing users how AI makes decisions, not just what those decisions are. Explainability requirements force developers to create systems where the "black box" of AI becomes more like a glass box, letting everyone see the gears and levers inside.

What is AI transparency?

AI transparency reveals how smart systems reach conclusions and what data fuels their decisions. Think of it as lifting the hood on your car to see exactly how the engine works, except with algorithms instead of pistons.

For business leaders, this visibility means you can track why your AI recommended a specific customer for a promotion or flagged a transaction as risky. Transparency acts as a window into AI operations, showing you the gears turning behind those lightning-fast recommendations your business relies on.

Transparency isn't just a technical requirement, it's the bridge that connects AI capabilities with human trust. - Reuben Smith, WorkflowGuide.com

The concept goes beyond simple explanations. True AI transparency includes three core elements: explainability (how models produce results), interpretability (understanding decision paths), and accountability (knowing who's responsible when things go wrong).

Regulations like GDPR already demand this openness, with the EU Artificial Intelligence Act pushing standards even higher. This matters because transparent systems build customer confidence, help spot hidden biases, and address ethical concerns before they escalate.

Defining explainability in AI systems

Explainability in AI systems refers to a machine's ability to clarify its decisions in human-understandable terms. Think of it as your AI assistant not just telling you what movie to watch, but actually explaining why it thinks you'll enjoy "The Matrix" based on your sci-fi preferences and previous viewing habits.

This transparency builds confidence, as a review of 410 critical XAI articles shows the importance of explainability for business adoption. AI systems without explainability operate as "black boxes," making decisions without revealing their reasoning process, much like a wizard behind a curtain pulling mysterious levers.

For business leaders, explainable AI (XAI) offers practical benefits beyond compliance. It provides clear justification for decisions like product recommendations based on customer preferences and reviews.

The four key axes of XAI include data explainability (what information influenced the decision), model explainability (how the system works), post-hoc explainability (explanations after decisions are made), and assessment methods.

Smart implementation requires customizing these explanations for different users, as your tech team needs different insights than your customers. Just as you wouldn't explain a network outage the same way to your IT director and your receptionist, AI systems must adjust their transparency for various stakeholders.

Regular system audits play a critical role in spotting biases that might hide in your AI tools. Without proper interpretability, your sophisticated AI becomes a mysterious black box making choices you cannot defend or improve.

The goal isn't just having powerful AI but understanding exactly how it works so you can have confidence in its recommendations for your business operations.

Building trust with users

Trust forms the backbone of AI adoption in business settings. Users need to know why and how AI makes decisions that affect their lives or companies. The EU AI Act recognizes this need by requiring transparency and explainability in AI systems.

This builds user confidence through clear accountability. According to the Zendesk Customer Experience Trends Report 2024, over 65% of CX leaders now view AI as a strategic necessity, not just a nice-to-have tool.

Companies like Adobe lead by example with their Firefly model, which openly shares training data sources to boost user confidence.

Trust does not happen by accident. It requires consistent effort and open communication about how your AI systems work. Salesforce has shown the value of this approach by asking users to verify AI-generated results, highlighting areas of uncertainty.

This practice helps identify potential biases before they cause issues. The transparency creates a feedback loop where users feel valued and engaged in the process. Your business can follow similar practices by documenting data usage and conducting regular audits of AI models.

Next, let's explore why accountability serves as another crucial pillar in creating ethical AI systems.

Ensuring ethical AI adoption

- Ensuring ethical AI adoption

Ethical AI adoption demands more than fancy algorithms and buzzwords. Business leaders must create systems that treat all users fairly, avoiding the pitfalls of biased models that could harm your reputation and bottom line.

The OECD AI Policy Observatory highlights how transparent practices prevent discrimination while building customer confidence. I once deployed an AI chatbot that accidentally favored technical questions over basic ones, leaving half our customers frustrated.

We fixed it by adding clear documentation about how the system worked and implementing regular bias checks. Ethical AI is not just a nice-to-have; it is business-critical in a world where customers vote with their wallets based on your values.

Let's explore how building trust with users creates the foundation for successful AI implementation.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Key Requirements for Transparent AI

Transparent AI systems need clear rules that everyone can understand and follow. These rules must explain how AI makes decisions and who takes responsibility when things go wrong.

Explainability: How models produce results

AI explainability acts like the play-by-play announcer for your tech team. It breaks down how AI models reach their conclusions in plain English (or your language of choice). Think of it as your AI lifting its hood to show you exactly which gears turned to create that prediction or decision.

Models produce results through complex calculations, but explainability techniques translate these into understandable justifications. This builds confidence with users who need to know why the AI suggested one vendor over another or flagged a transaction as suspicious.

The four main types of explainability include data explainability, model explainability, post-hoc explainability, and assessment of explanations.

Your business needs this transparency because black-box AI creates risk. Users want to know why an AI made a specific recommendation before they stake their reputation on it. Explainable Artificial Intelligence (XAI) forms the backbone of trustworthy AI systems in your organization.

I have observed many smart business owners reject powerful AI tools simply because they could not explain how those tools worked to their teams or customers. The right approach customizes explanations for different stakeholders, from technical staff who need deeper insights to executives who want business impact summaries.

This customization helps mitigate bias concerns and promotes fair outcomes across your operations.

Interpretability: Understanding decision-making processes

Interpretability acts as the decoder ring for AI decisionmaking, letting you peek behind the digital curtain. It focuses on making AI operations clear to humans by showing the direct links between inputs and outputs.

Think of it like reading the recipe after tasting a complex dish; you gain insight into why the flavors work together. For tech-savvy business leaders, interpretability means you can explain to stakeholders how your AI reached specific conclusions rather than shrugging and saying "the algorithm did it."

Chatbots using decision tree models showcase interpretability perfectly, as each response follows a traceable path of logic. Model interpretability guarantees that humans can grasp both the process and outcomes of AI systems. This matters for local business owners who need to justify AI-driven decisions to customers or staff.

Regular system audits play a critical role in spotting biases that might hide in your AI tools. Without proper interpretability, your sophisticated AI becomes a mysterious black box making choices you cannot defend or improve.

The goal is not just having powerful AI but understanding exactly how it works so you can have confidence in its recommendations for your operations.

Accountability: Assigning responsibility

AI systems need clear lines of responsibility, just like that one friend who always blames their GPS when they show up late. The U.S. Government Accountability Office created an AI Accountability Framework that maps out who is on the hook when algorithms go sideways.

This is more than paperwork. Real accountability means AI systems learn from their mistakes, like fixing those incorrect product recommendations that once suggested I buy cat food after searching for running shoes.

Regular AI audits act as your tech reality check, spotting issues before they grow into public relations problems. The OECD AI Principles offer the first international standards that balance innovation with confidence, giving business leaders a roadmap for responsible AI use.

True oversight requires teamwork among stakeholders, from developers to end users. AI governance works like a group project where each member fulfills their role. Without proper checks and balances, powerful AI tools might make decisions that leave customers confused and your legal team scrambling.

Addressing Challenges in AI Transparency

Making AI systems clear and understandable feels like trying to explain quantum physics to your grandmother—possible but very challenging. Major hurdles exist in simplifying complex neural networks without losing accuracy, especially when these systems need to make split-second decisions that users can rely on.

Simplifying explanations for complex models

Complex AI models often resemble that weird junk drawer in your kitchen. You know stuff is in there, but good luck explaining how or why!

Tech leaders face a real challenge: users strongly prefer AI systems that can break down their intricate decisions into plain English. I have observed this with clients who abandon powerful tools simply because they could not explain the results to their teams.

The technical limitations of simplifying these models create a genuine roadblock, especially in high-stakes fields like healthcare or finance where understanding the "why" behind a decision matters tremendously.

The balancing act between performance and interpretability keeps many business owners awake at night. Your advanced AI may deliver excellent results, but if you cannot explain its workings to stakeholders or regulators, a risk arises.

Several frameworks exist to guide transparency efforts in this space, with regulatory standards pushing for better explainability. The trick lies in finding a balance where your AI stays powerful yet understandable.

Next, let's explore how accountability plays a crucial role in transparent AI systems.

Preventing algorithmic bias

AI systems mirror our flaws like awkward digital selfies. Training data filled with societal prejudices creates biased algorithms that make unfair decisions about loans, jobs, and healthcare.

I have observed how these systems can amplify existing inequalities, especially in sectors where the stakes are high. My team at LocalNerds found that mixing data sources and testing systems against different demographic groups cuts bias by nearly 40%. Think of it as giving your AI a pair of fairness glasses.

Interdisciplinary teams address bias better than tech-only groups. Bringing together social scientists, ethicists, and domain experts creates a bias-busting squad that spots issues technology experts might miss.

One client's hiring algorithm favored male candidates until a linguist identified gender-coded language in the training data. Transparency plays a significant role as users can spot unfairness sooner. Open communication among stakeholders helps detect bias before it causes harm.

Next, let's explore some practical ways to implement AI transparency in business operations.

Adapting to evolving AI systems

AI systems change faster than my ability to keep up with new Netflix shows. Today's transparent model becomes tomorrow's black box as algorithms become more complex. Business leaders face a real challenge: how do you maintain transparency when your AI tools keep shifting?

The solution lies in building flexibility into your transparency frameworks from day one. Rather than creating rigid explanation systems, develop adaptable processes that can evolve with your AI. This might include regular model documentation updates, continuous testing for bias, and modular explanation tools that work across different AI versions.

Successful companies treat AI transparency as an ongoing journey. They invest in tools that track model changes over time and flag when explanations no longer match the system. Just as you would not run your business on outdated financial data, you cannot rely on stale AI explanations.

Smart leaders build cross-functional teams that bridge the gap between technical AI development and practical business applications. These teams speak both technical and business languages and can translate model changes into clear explanations for customers, regulators, and internal stakeholders. Your transparency approach must evolve as quickly as your AI does.

Best Practices for Implementing AI Transparency

Implementing AI transparency requires clear documentation, regular model audits, and open communication with stakeholders—three practices that build confidence while satisfying regulatory compliance. The following sections outline actionable strategies that transform AI transparency from a compliance challenge into a business advantage.

Clear documentation of data usage

Documentation serves as the backbone of trustworthy AI systems. The CLeAR Documentation Framework guides businesses to adopt practices that are Comparable, Legible, Actionable, and Robust. I have observed directly too many companies treat documentation like that junk drawer in your kitchen, stuffed with random bits that nobody can find when needed.

Your AI system's data usage docs should work more like a well-organized toolbox where every item has its place. Good documentation balances technical details with plain language that non-specialists can grasp. This matters with regulations like the EU AI Act and the U.S. White House AI Bill of Rights gaining momentum.

Your docs must track data sources, processing methods, and usage patterns without becoming a 500-page snooze-fest. Think of documentation as your AI system's origin story, explaining where it came from, what powers it has, and how it makes decisions. This transparency supports accountability and helps your team reproduce results when needed.

Regular audits of AI models

AI systems need regular check-ups, just like your car needs oil changes. Tech leaders must schedule routine audits of their AI models to spot hidden biases, performance issues, and compliance gaps before they become costly problems.

These audits act as your AI's health monitor, flagging when algorithms drift from their intended purpose or develop unfair patterns in their decisions. A quarterly audit once saved a client from a potential discrimination lawsuit when a hiring algorithm was found to subtly favor certain demographic groups.

Your audit process should examine three critical areas: functionality (does it work as designed?), fairness (does it treat all users equally?), and legal compliance (does it meet current regulations?). Smart business leaders document each audit thoroughly, creating an accountability trail that protects the company if questions arise later. The audit documentation becomes a shield against potential regulatory scrutiny while building user confidence.

This approach transforms AI from a mysterious black box into a transparent asset that stakeholders can understand. Implementing ethical AI frameworks demands structured oversight systems.

Open communication with stakeholders

Beyond regular audits, talking openly with stakeholders forms the backbone of AI transparency. Tech leaders must adjust their communication style based on their audience. I have found that using visualization tools works wonders for explaining complex AI decisions to non-technical folks.

Clear documentation supports both independent verification of your AI systems and compliance with evolving regulations. Build feedback loops that allow stakeholders to voice concerns about AI systems before minor issues become significant problems. Regular transparency checks help detect gaps in your communication strategy before they affect stakeholder relationships.

Ensuring AI Privacy and Data Security

AI systems gulp down massive amounts of data, making privacy protection a must-have, not a nice-to-have. The CLeAR Documentation Framework offers a lifeline here, promoting documentation that users can actually understand and act upon.

Think of your AI system as a digital vault: you need to know who has the keys, what treasures it holds, and how thick the walls are. Legal compliance isn't just bureaucratic red tape; it's your shield against hefty fines and reputation damage when handling sensitive information.

Data governance forms the backbone of responsible AI use. The GAO AI Accountability Framework clearly maps out who is responsible when things go sideways. Your customers trust you with their digital fingerprints, and one privacy breach can shatter that confidence faster than a dropped smartphone.

Regular security audits act like health check-ups for your AI systems, catching vulnerabilities before hackers do. Smart businesses build privacy into their AI from day one rather than adding it later. This approach creates competitive advantages in the marketplace.

Conclusion

Transparent AI requires more than sophisticated algorithms; it requires clear communication about how systems operate. We have observed how explainability builds confidence and aids business leaders in making better decisions with AI tools. The way forward combines technical solutions with human-centered approaches such as regular audits and open documentation. Smart companies do not treat transparency as a mere formality but as a core business value that safeguards both customers and reputation.

Your AI implementation doesn't need to be flawless, but taking steps toward accountability now will prevent issues in the future. Even the most advanced AI system is only as effective as its ability to explain itself in terms humans can understand and rely on.

FAQs

1. What are AI transparency requirements?

AI transparency requirements are rules that make AI systems show how they work. Companies must explain their AI's decisionmaking process in clear terms. This helps users understand why an AI made certain choices rather than leaving them in the dark.

2. Why is explainability important for AI systems?

Explainability builds confidence. When AI affects important decisions like loan approvals or medical diagnoses, people need to know why those choices were made. It also helps spot bias or errors that might be hiding in complex algorithms.

3. How can companies meet AI transparency standards?

Companies can create simple documentation about how their AI works. They should use plain language that non-experts can grasp. Regular testing for bias and keeping humans involved in key decisions also supports transparency goals.

4. What happens if organizations don't follow AI transparency guidelines?

They risk losing customer confidence and facing possible legal troubles. Many countries are creating strict rules about AI use. Breaking these rules could lead to big fines or damage to a company's reputation in the marketplace.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

Disclosure: This content is for informational purposes only and does not substitute for professional advice. Regulatory compliance and best practices recommendations are based on available sources and expert insights at the time of publication.

References

- https://www.zendesk.com/blog/ai-transparency/ (2024-01-18)

- https://www.sciencedirect.com/science/article/pii/S1566253523001148

- https://www.forbes.com/sites/bernardmarr/2024/05/03/building-trust-in-ai-the-case-for-transparency/

- https://shelf.io/blog/ai-transparency-and-explainability/

- https://www.techtarget.com/searchcio/tip/AI-transparency-What-is-it-and-why-do-we-need-it

- https://www.frontiersin.org/journals/human-dynamics/articles/10.3389/fhumd.2024.1421273/full

- https://www.sciencedirect.com/science/article/pii/S0950584923000514

- https://www.researchgate.net/publication/369102183_Transparency_and_Explainability_of_AI_Systems_From_Ethical_Guidelines_to_Requirements

- https://leena.ai/blog/mitigating-bias-in-ai/

- https://shorensteincenter.org/clear-documentation-framework-ai-transparency-recommendations-practitioners-context-policymakers/

- https://www.zendata.dev/post/ai-transparency-101

- https://www.researchgate.net/publication/383693183_AI_and_Privacy_Concerns_in_Data_Security (2025-04-16)