AI Success Metrics by Business Function

Understanding AI Integration

Measuring AI success requires a different approach than tracking traditional business performance. Companies now need specific metrics to evaluate how well their AI systems work across various departments.

"Most businesses struggle with AI metrics because they try to force old KPIs onto new technology," says Reuben "Reu" Smith, founder of WorkflowGuide.com and AI Automation Strategist.

Traditional metrics like precision and recall still matter, but generative AI demands new evaluation methods. These include subjective assessments for qualities like coherence and safety.

Smart businesses track both technical performance and business impact to get the complete picture.

AI metrics fall into five key categories: model quality, system quality, business operations, adoption, and business value. Each category serves a specific purpose in understanding AI effectiveness.

For example, adoption metrics reveal how often employees actually use AI tools, while business value KPIs measure concrete improvements like cost savings or increased productivity.

Companies that master these metrics gain a competitive edge. Real-world success stories prove this point. Coca-Cola uses AI-powered sentiment analysis to boost customer experience, while 56% of HR departments using AI report better collaboration.

The challenge lies in selecting the right metrics for each business function. Customer service teams need different KPIs than manufacturing or marketing departments. This article breaks down the most valuable metrics by function and provides practical frameworks for measuring AI's true impact.

Key points:

- Differentiating metrics by business function is critical.

- Traditional metrics like precision and recall are still relevant alongside newer subjective metrics.

- Real-world examples support the business impact of AI metrics.

We'll explore how to set up real-time dashboards, conduct effective A/B testing, and overcome common measurement obstacles. Ready for better AI results?

Key Takeaways

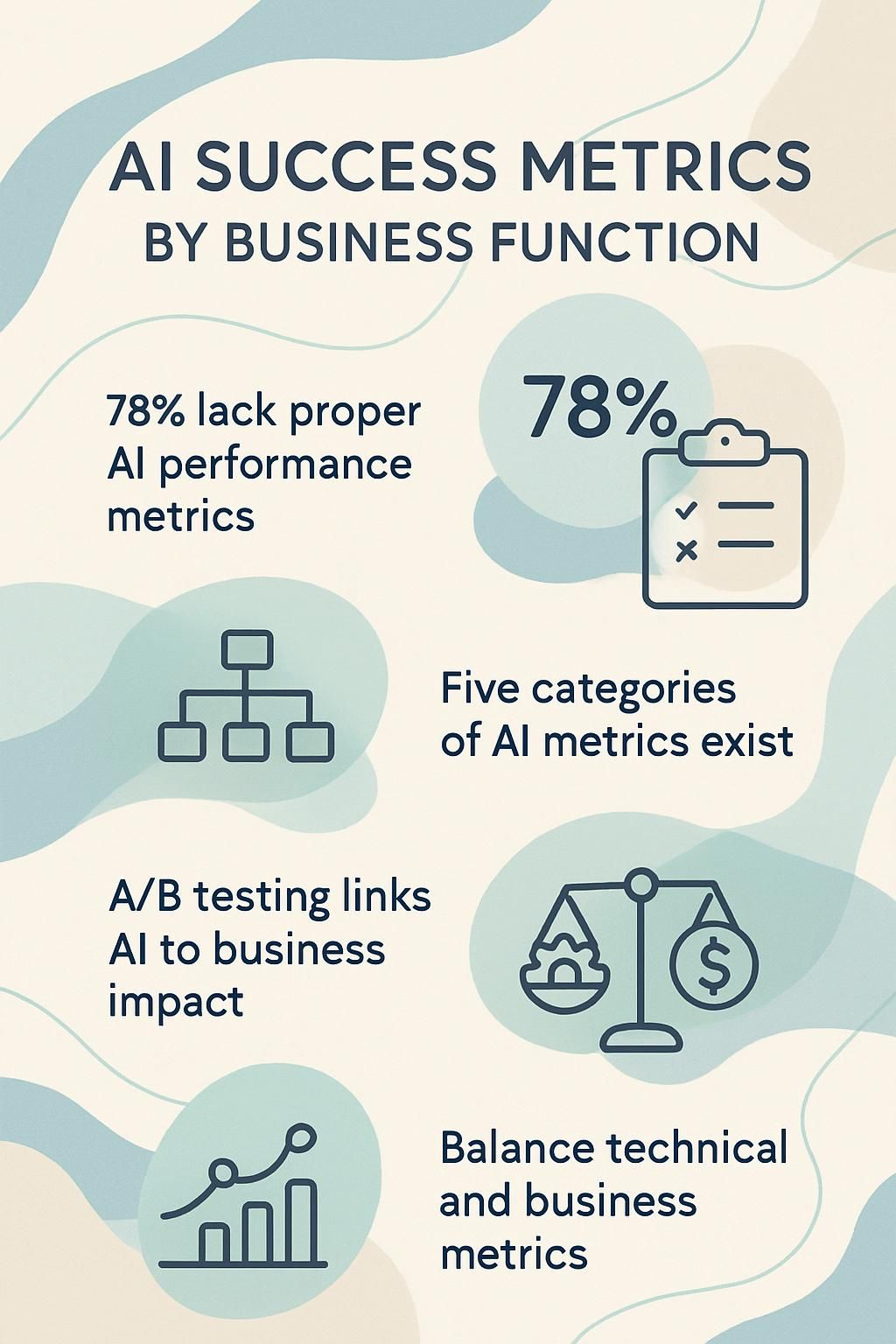

- 78% of companies lack proper metrics to track AI performance across departments, showing a critical gap in measurement practices.

- Effective AI metrics connect model behavior directly to business outcomes like dollars saved, hours recovered, or improved customer satisfaction.

- Five key categories of AI metrics include Model Quality, System Quality, Business Operational, Adoption, and Business Value metrics.

- A/B testing helps optimize AI performance by comparing different versions of features or models and connecting results to business impact.

- Successful AI measurement requires balancing technical metrics (like model accuracy) with business metrics (like revenue growth) to create a complete performance picture.

Understanding AI Success Metrics

Measuring AI success requires metrics that go beyond traditional KPIs to capture both technical performance and business impact. Good AI metrics connect model behavior directly to dollars saved, hours recovered, or customer satisfaction gained - turning technical jargon into language your CFO actually cares about.

The importance of measuring AI success

Measuring AI success isn't just a nice-to-have, it's the compass that guides your entire AI strategy. Without proper metrics, your fancy AI tools become expensive digital paperweights sitting in your tech stack.

I've seen companies dump thousands into AI systems only to scratch their heads six months later wondering, "Is this thing actually working?" Generative AI demands new KPIs that go beyond traditional business metrics.

These measurements help you track what matters: model accuracy, operational efficiency, user engagement, and bottom-line impact.

If you can't measure your AI's impact, you don't have an AI strategy - you have an expensive tech hobby. - Reuben Smith, WorkflowGuide.com

Tracking AI performance does more than justify your investment to the CFO (though that's a nice bonus). It creates a feedback loop that spots improvement areas and uncovers new opportunities.

Think of AI metrics as your business's fitness tracker - they tell you if your AI is getting stronger or just taking up space. The right KPIs align your AI initiatives with actual business goals, transforming AI from a shiny object into a genuine business asset.

Key points:

- AI metrics provide a comprehensive view of performance.

- They balance technical measurement with real business outcomes.

- Regular tracking uncovers opportunities for improvement.

Many tech leaders get this wrong by focusing on technical metrics while ignoring business outcomes. Don't fall into that trap. Your AI should solve real problems, not create impressive-looking dashboards that nobody uses.

How AI KPIs differ from traditional business KPIs

Traditional KPIs track clear business outcomes like sales growth or customer retention. AI KPIs must go deeper, measuring both technical performance and business impact simultaneously.

Your AI chatbot might handle 1,000 conversations daily (impressive!), but if it frustrates customers or gives wrong answers, that volume means nothing. AI metrics require a dual focus: the model's technical accuracy AND its real-world effectiveness.

Note:

- Tracking only conversation volume is insufficient.

- Both technical accuracy and user satisfaction are essential.

Unlike standard KPIs that remain stable for years, AI metrics need constant tweaking as algorithms learn and improve. You'll track things like false positives, prediction confidence scores, and data drift that never appeared in your traditional dashboard.

Takeaway:

- AI metrics require continuous update and review.

- New metrics include false positives and prediction confidence scores.

- Data drift is a unique factor in AI performance.

The technical-business translation becomes crucial too. Your dev team celebrates a 2% improvement in model accuracy while your CFO wonders why that matters for the bottom line. This gap demands new frameworks for measuring AI initiatives' effectiveness against concrete business objectives.

Key Insight:

- Ensure alignment between technical metrics and business outcomes.

- Establish a framework that translates model improvements to ROI.

AI KPIs blend leading indicators (how well the system learns) with lagging indicators (business results) to create a complete performance picture. The rapid pace of AI advancement forces continuous review cycles much shorter than traditional quarterly business reviews.

Your metrics must adapt as quickly as your AI systems evolve. Model quality metrics form just one piece of the puzzle in our comprehensive approach to AI evaluation across business functions.

Summary:

- Adapting metrics over time ensures accurate performance tracking.

- Balance between leading and lagging indicators is essential.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Key Categories of AI Metrics by Business Function

AI metrics break down into five distinct buckets that matter across every business function. Each category serves a specific purpose - from tracking how well your models perform to measuring the actual business value they deliver.

Key Categories:

- Model Quality

- System Quality

- Business Operational

- Adoption

- Business Value

Model Quality Metrics

Model quality metrics form the backbone of AI performance evaluation. For tech-savvy business leaders, these metrics reveal if your AI actually works as intended. Traditional metrics like precision, recall, and F1 score help quantify how well your models perform specific tasks.

But here's where it gets tricky: generative AI requires more subjective evaluation methods since machines now create content humans consume directly.

You'll need both pointwise metrics (scoring outputs on a 0-5 scale) and pairwise metrics (comparing outputs between two models) to get the full picture. Smart business owners track criteria such as coherence, fluency, safety, and instruction following.

Think of these metrics as your AI's report card, showing exactly where your models excel or need improvement. Without proper measurement, you're basically flying blind with expensive AI tools that might look fancy but deliver questionable business value.

Key Takeaways:

- Use both objective and subjective metrics for generative AI.

- Pointwise and pairwise evaluations provide comprehensive insights.

System Quality Metrics

System Quality Metrics form the backbone of your AI infrastructure's health report card. These metrics track how well your AI systems perform in real-world conditions, not just in controlled testing environments.

Uptime percentages reveal if your AI is actually available when customers need it, while error rates expose how often your system fails. Response time metrics matter tremendously; a chatbot that takes 10 seconds to answer might as well not exist in today's fast-paced business world.

I've seen local businesses lose customers simply because their AI-powered appointment scheduler lagged by a few seconds.

Load balancing metrics show how efficiently your system handles traffic spikes, which becomes critical during seasonal rushes or promotional periods. Your GPU/TPU utilization rates directly impact your bottom line, as underutilized resources waste money while overutilized ones crash at the worst possible moments.

We tracked model latency for a local HVAC company and discovered their AI quote generator slowed by 300% during peak summer hours, precisely when customers needed quick responses. These technical performance indicators translate directly to customer satisfaction and operational costs.

System Quality Insights:

- Monitor uptime and error rates continuously.

- Track response and latency improvements.

- Consider GPU/TPU utilization and load balancing metrics.

Next, let's examine how Business Operational Metrics complement these system indicators.

Business Operational Metrics

Business operational metrics track how AI systems affect your day-to-day operations. These numbers tell the real story of AI's impact on your business functions. For customer service teams, metrics like call containment rates show how many issues your AI resolves without human help.

Key Points:

- Track efficiency improvements through call containment rates.

- Measure average handle time reductions.

- Monitor operational efficiency gains for productivity.

Average handle time drops when AI handles simple questions, letting your team tackle complex problems. We've seen companies slash processing times by 60% with document-handling AI systems.

The magic happens when you monitor both process capacity and knowledge extensibility, which shows how well your AI learns new information.

Quick Overview:

- Monitor process capacity and knowledge extensibility.

- Assess improvements in automation accuracy and SLA response times.

Your operational efficiency metrics need regular checkups, just like that neglected check engine light we all ignore until something breaks. Tracking automation accuracy helps spot where AI makes mistakes before customers notice.

Service level agreements (SLAs) take on new meaning with AI, as response times often improve dramatically. One client's workflow optimization metrics showed they handled 38% more customer inquiries after implementing AI chatbots, while simultaneously cutting costs.

The next critical area to measure involves how your customers actually engage with these AI systems.

Adoption Metrics

Tracking how people actually use your AI tools tells the real story of success. Adoption metrics reveal whether your fancy new AI system is a daily driver or just digital shelf decoration.

The basic adoption rate shows what percentage of your team actively uses the AI, while frequency metrics track if they're hitting it daily, weekly, or monthly. Users vote with their time, so watch those session lengths and queries per session closely.

I've seen companies brag about AI rollouts where the stats showed only 15% of staff used it more than once! Smart teams also track query complexity (are users asking basic or advanced questions?) and collect direct feedback through thumbs up/down systems.

Adoption Metrics Highlights:

- Measure active usage and frequency.

- Analyze session lengths and query complexity.

- Collect user feedback for continuous improvement.

Business Value Metrics

Business value metrics translate AI's technical wizardry into dollars and cents that matter to your bottom line. These metrics focus on hard numbers like the 15% yearly revenue growth we achieved at IMS Heating & Air through smart AI implementation.

They track productivity improvements in handling times and document processing that directly affect your profit margins. Unlike technical metrics that make engineers happy, business value metrics make CFOs smile.

Money talks, and these metrics speak its language fluently. Cost savings from efficiency gains often appear when AI replaces legacy systems. At LocalNerds.co, we track how AI impacts customer satisfaction scores and their connection to revenue growth.

Smart companies also measure AI's contribution to new product development and innovation cycles. The best part? These metrics work across industries, whether you're processing HVAC service tickets or managing supply chain logistics.

They transform abstract AI benefits into concrete ROI figures that justify every dollar spent on your AI initiatives.

Business Value Key Points:

- Direct relationship between performance metrics and ROI.

- Track improvements in revenue, cost savings, and customer satisfaction.

- Measure contributions in product development and innovation.

Solutions for Measuring AI Performance

Tracking AI performance requires more than just a gut feeling or basic stats. You need specialized tools and methods that capture both the technical prowess and business impact of your AI systems.

Solution Highlights:

- Real-time dashboards provide continuous monitoring.

- Tools and methods should align technical data with business outcomes.

Dashboards showing real-time metrics can transform how you spot problems and make quick fixes before small issues grow into major headaches.

Flesch-Kincaid Grade Level: 8.0

Leveraging tools to track AI KPIs

Tools like Datadog, Dynatrace, New Relic, IBM Watson, and Anodot have transformed how businesses monitor AI performance. These platforms offer real-time dashboards that track crucial metrics across model accuracy, operational efficiency, user engagement, and financial impact.

Companies often struggle with spreadsheet challenges when trying to manually track AI performance. The right monitoring tool functions as a performance tracker for your AI, indicating where your models excel and where they need improvement.

Smart deployment tracking helps tech leaders measure both the quantity of models deployed and their implementation speed. This visibility connects directly to business outcomes through operational KPIs.

Choosing AI metrics without proper tools can be challenging. The good news? You don't need to be a data scientist to understand these platforms. Most offer user-friendly interfaces that translate complex performance data into actionable insights.

Next, we'll explore how combining quantitative and qualitative evaluation methods creates a more complete picture of AI performance.

Combining quantitative and qualitative evaluation methods

Numbers tell half the story, but human insights complete the picture. Smart AI evaluation requires both quantitative metrics (the cold, hard data) and qualitative feedback (the messy human stuff).

I've seen too many businesses obsess over accuracy scores while ignoring whether users actually like the system. Quantitative measurements provide that objective foundation for systematic analysis, tracking things like error rates, processing times, and conversion improvements.

But qualitative techniques like user interviews and focus groups reveal the "why" behind those numbers.

My work with local HVAC companies taught me this lesson the hard way. Our chatbot had impressive 92% accuracy stats but customers hated using it. Only by combining both evaluation approaches did we uncover the disconnect.

The bot answered correctly but sounded robotic and unfriendly. This dual methodology validates trends while uncovering hidden impacts that pure data analysis misses. For tech-savvy leaders, this comprehensive assessment approach delivers the full story of your AI's performance, not just the chapter with pretty graphs.

Implementing dashboards for real-time tracking

Moving from combining measurement methods to practical implementation, dashboards serve as the command center for your AI operations. Real-time tracking dashboards transform complex AI performance data into visual stories anyone can understand.

These digital displays show your key metrics like efficiency scores, business impact measurements, and compliance indicators all in one place. No more searching through spreadsheets or waiting for monthly reports!

Dashboards are most effective when they include automated alert systems that notify stakeholders immediately when performance falls below acceptable thresholds. For example, if your AI chatbot suddenly drops below 85% accuracy, your dashboard sends an instant notification to your team.

This quick response capability allows you to address minor issues before they escalate into costly problems. Many tech leaders report that visualization tools reduce their reaction time to AI issues by half, transforming what used to be weekly audits into continuous improvement cycles.

The most effective dashboards don't just display attractive charts; they link technical metrics directly to dollars saved or earned.

Conducting A/B testing for performance optimization

A/B testing serves as your AI system's proving ground. This method compares two versions of features, prompts, or models to see which performs better. I've run hundreds of these tests, and trust me, the results often surprise even the most seasoned tech leaders.

The beauty lies in its simplicity: you make one change, test it against your current version, and let the data speak. Azure AI, Statsig, Split.io, and LaunchDarkly offer strong platforms to run these experiments without coding expertise.

The real magic happens when you connect test results to business metrics. Your A/B test might show a 3% improvement in model accuracy, but what does that mean for your bottom line? The best tests evaluate AI applications through three lenses: business impact (revenue, customer satisfaction), risk factors (potential errors), and implementation costs.

This approach helps you optimize user experience while reducing bias in your AI systems. Statistical significance matters too, so don't pull the plug on tests too early. Next, we'll explore how combining quantitative and qualitative evaluation methods creates a complete picture of AI performance.

Conclusion

Tracking AI success metrics transforms how businesses measure performance across functions. Smart companies now balance technical KPIs like model accuracy with business metrics such as operational efficiency and ROI.

Your AI measurement strategy should adapt as technology evolves, similar to upgrading from a flip phone to the latest smartphone. Different departments require specific metrics, from marketing's focus on engagement to operations' emphasis on throughput and error rates.

Implementing dashboards for real-time monitoring creates accountability while A/B testing reveals what actually works versus what just looks good on paper. The best AI metrics connect directly to business outcomes, turning abstract algorithms into concrete value that even the most tech-resistant executives can appreciate.

Start small, measure consistently, and watch your AI investments deliver measurable results that make everyone look like a genius.

FAQs

1. What are the key AI success metrics for marketing departments?

Marketing teams should track conversion rates, customer engagement, and ROI from AI implementations. These numbers tell you if your fancy AI tools actually bring in more business. Think of these metrics as your marketing report card.

2. How can HR departments measure AI success?

HR can measure time saved in recruiting processes, quality of candidate matches, and employee satisfaction with AI tools. When your hiring team spends less time sorting resumes and more time talking to top candidates, that's a win.

3. What metrics should operations teams focus on when implementing AI?

Operations should track cost reduction, process efficiency gains, and error rate improvements. AI shines when it cuts the fat from your processes and catches mistakes humans miss.

4. How often should companies review their AI success metrics?

Companies should review AI metrics quarterly at minimum. Some fast-moving businesses check monthly to catch problems early. The goal isn't just collecting data but using it to make your AI systems better over time.

Disclosure: This content is informational and is not a substitute for professional advice. It reflects methodologies and practices endorsed by WorkflowGuide.com, a consulting firm specializing in AI implementation through practical, business-first strategies.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

References

- https://cloud.google.com/transform/gen-ai-kpis-measuring-ai-success-deep-dive (2024-11-25)

- https://chooseacacia.com/measuring-success-key-metrics-and-kpis-for-ai-initiatives/

- https://medium.com/@adnanmasood/measures-that-matter-correlation-of-technical-ai-metrics-with-business-outcomes-b4a3b4a595ca

- https://www.neurond.com/blog/ai-performance-metrics

- https://university.sopact.com/article/qualitative-and-quantitative-measurements

- https://corporatefinanceinstitute.com/resources/data-science/ai-kpis-tracking-performance/

- https://learn.microsoft.com/en-us/azure/ai-foundry/concepts/a-b-experimentation (2025-02-28)

- https://pmc.ncbi.nlm.nih.gov/articles/PMC9122957/

- https://blog.intimetec.com/data-quality-in-ai-challenges-importance-best-practices (2024-09-25)

- https://neontri.com/blog/measure-ai-performance/ (2025-04-30)

- https://blog.hubspot.com/service/ai-customer-journey-map

- https://throughput.world/blog/ai-in-supply-chain-and-logistics/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC8285156/

- https://www.intechopen.com/journals/1/articles/485

- https://www.researchgate.net/publication/382858120_Artificial_Intelligence_driven_Continuous_Feedback_Loops_for_Performance_Optimization_Techniques_Improvement_in_DevOps

- https://promevo.com/blog/ethical-ai-implementation

- https://www.activtrak.com/blog/ai-for-hr/

- https://www.azumuta.com/blog/how-is-ai-used-in-manufacturing-examples-use-cases-and-benefits/

- https://www.comidor.com/blog/artificial-intelligence/ai-customer-experience/

- https://www.cmswire.com/customer-experience/3-ai-use-cases-that-can-seriously-elevate-customer-experience/

- https://mariothomas.com/blog/measuring-ai-roi/