AI Risk Management Framework Implementation

Understanding AI Integration

AI Risk Management Framework Implementation defines the structured approach organizations take to identify, assess, and control risks from artificial intelligence systems. NIST released its AI Risk Management Framework on January 26, 2023, creating a foundation for safe AI adoption.

WorkflowGuide.com takes a practical approach to AI implementation. The firm transforms AI-curious organizations into AI-confident leaders by using clear risk assessment, Ethical AI, AI governance, Compliance, and Mitigation Strategies. This approach supports the AI lifecycle and Data Privacy while keeping advice clear and accessible.

This framework addresses critical concerns like bias, security threats, and ethical issues that plague many AI implementations. Most companies recognize these dangers, yet only 9% feel ready to handle them.

The gap between awareness and action creates major business vulnerabilities. Proper risk management isn't just about avoiding problems; it saves real money. Companies with strong compliance protocols save an average of $3.05 million per data breach.

The EU has taken notice too, with its AI Act imposing penalties up to 7% of global revenue for violations. This makes AI risk management both a safety and financial priority. Organizations need practical tools and approaches to manage this complex landscape without getting lost in technical jargon or regulatory mazes.

Leaders like IBM and Google have created their own frameworks, showing the path forward. This article breaks down how your business can implement AI risk management that works. Let's get started.

Key Takeaways

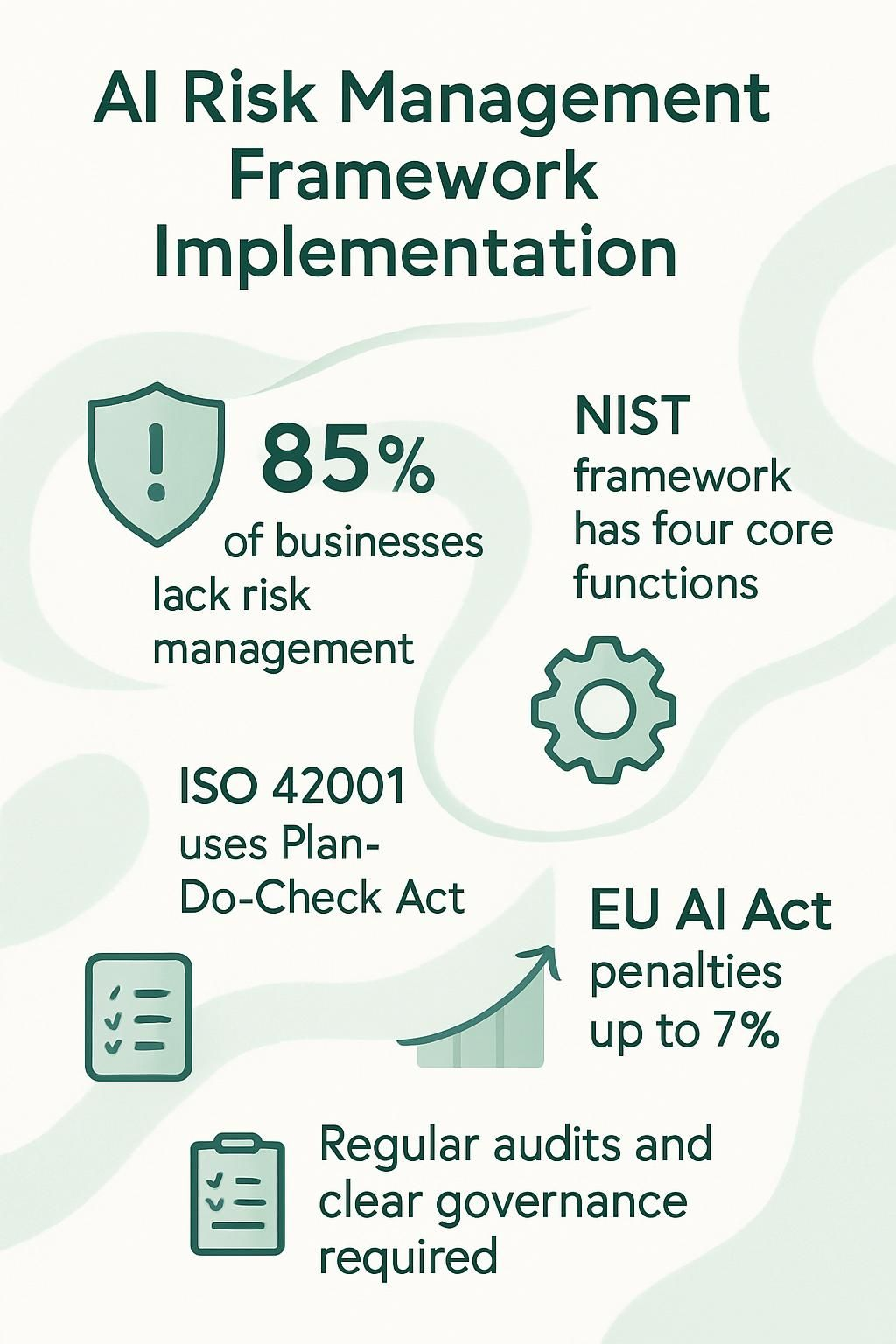

- Nearly 85% of businesses using AI lack proper risk management protocols, leaving them vulnerable to costly mistakes and damaged reputations.

- The NIST AI Risk Management Framework launched on January 26, 2023, provides a structured approach through four core functions: Govern, Map, Measure, and Manage.

- The EU AI Act, adopted in December 2023, categorizes AI applications by risk level and can impose penalties up to 7% of global revenue for non-compliance.

- ISO 42001, released in December 2023, is the first international standard for AI Management Systems and uses a Plan-Do-Check-Act methodology.

- Effective AI risk management requires regular bias audits, clear governance roles, transparent decision-making processes, and comprehensive documentation standards.

Understanding the Need for AI Risk Management

AI systems fail in weird ways that traditional risk management can't handle. Your business needs specific AI risk controls to prevent costly mistakes, reputation damage, and potential legal issues.

Key Points:

- AI risk controls must be specific to the AI lifecycle.

- Failing to manage AI risk can lead to costly setbacks and legal issues.

- Clear protocols for bias audits, privacy, and security are needed.

Key risks associated with AI systems

AI technologies offer amazing benefits, but they also come with significant risks that smart business leaders need to understand. Let's break down these dangers so you can protect your organization while still leveraging AI's power.

- Algorithmic Bias creates unfair outcomes when AI systems reflect human prejudices in their training data, potentially harming customers and damaging your reputation.

- Privacy Violations occur when AI systems collect, process, or share personal data without proper consent or safeguards, putting your business at legal risk.

- Security Vulnerabilities exist in AI systems that hackers can exploit to steal data or manipulate outputs, creating major business liabilities.

- Lack of Transparency makes it hard to understand how AI reaches decisions, creating "black box" problems that undermine trust and accountability.

- Error Propagation happens when small mistakes in AI systems multiply across operations, causing widespread negative impacts that grow over time.

- Civil Rights Impacts arise when AI systems make decisions affecting access to housing, employment, or financial services without proper oversight.

- Unintended Consequences develop when AI systems interact with the real world in ways developers never anticipated, creating new problems.

- Data Quality Issues lead to faulty AI outputs when training data contains errors, gaps, or outdated information.

- Regulatory Non-Compliance risks grow as governments worldwide create new AI laws that businesses must follow or face penalties.

- Accountability Gaps form when responsibility for AI decisions becomes unclear between developers, users, and the system itself.

- Explainability Challenges make it difficult to justify AI decisions to customers, regulators, or even your own team.

- Ethical Dilemmas emerge when AI systems face complex situations involving competing values or priorities with no clear right answer.

Key Risk Summary:

- Algorithmic bias and privacy violations must be addressed.

- Security vulnerabilities and error propagation pose serious risks.

- Legal non-compliance and accountability gaps need clear action.

Importance of trust, transparency, and accountability

Trust forms the backbone of successful AI implementation. Without it, your fancy algorithms might as well be expensive paperweights gathering dust in your tech stack. Business leaders need to recognize that transparency acts as the bridge between complex AI systems and human understanding.

The National Institute of Standards and Technology (NIST) highlights four critical principles of Explainable AI: explanation, meaningfulness, accuracy, and knowledge limits. These aren't just theoretical concepts but practical guardrails that help your team and customers feel confident about AI-driven decisions.

Trust isn't a nice-to-have in AI; it's the difference between adoption and abandonment.

Accountability creates the framework where AI systems can thrive without causing harm. I have seen too many companies roll out AI tools without clear ownership of outcomes, which is like giving a teenager keys to your Ferrari without proper driving lessons.

Explainable AI (XAI) plays a crucial role here by making black-box decision-making transparent. This transparency doesn't just satisfy regulatory requirements; it builds genuine trust with users and stakeholders.

My clients who prioritize these elements consistently see higher adoption rates and fewer implementation headaches. The lack of transparency often leads to distrust, creating resistance that no amount of cool tech features can overcome.

Trust and Transparency Key Points:

- Clear documentation and Explainable AI build user trust.

- Transparency bridges the gap between complex systems and human understanding.

- Accountability measures prevent issues in AI decision-making.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Overview of AI Risk Management Frameworks

Several major frameworks exist to help organizations manage AI risks effectively. The NIST AI RMF, EU AI Act, and ISO 42001 standards each offer different approaches to tackle the growing challenges of responsible AI deployment.

NIST AI Risk Management Framework (AI RMF)

The NIST AI Risk Management Framework launched on January 26, 2023, offers a structured approach to handling AI risks through four core functions: Govern, Map, Measure, and Manage.

Think of it as your AI safety playbook, created after extensive public input and multiple workshops. Unlike that one friend who insists on giving unsolicited advice, this framework remains voluntary and flexible, adapting to your specific business needs rather than forcing a one-size-fits-all solution.

For tech leaders feeling like they are trying to tame a digital wild west, the AI RMF provides practical guardrails without stifling innovation. The framework tackles thorny issues like AI bias head-on while promoting trustworthy and responsible AI implementation.

NIST didn't stop at just the framework either; it has created supporting documents including the AI RMF Playbook, Roadmap, and Crosswalk to help organizations manage implementation.

These resources translate complex governance concepts into actionable steps that both enterprise leaders and local business owners can apply to their AI strategies.

Framework Summary:

- NIST's AI RMF focuses on Govern, Map, Measure, and Manage.

- The EU AI Act imposes regulatory standards and penalties.

- ISO 42001 offers a global approach through the Plan-Do-Check-Act methodology.

European Union AI Act

While the NIST framework provides a solid foundation for AI risk management in the US, the European Union has taken notice too with its AI Act. Adopted in December 2023, this landmark legislation creates a comprehensive regulatory system that categorizes AI applications based on risk levels.

The Act takes a no-nonsense stance on high-risk AI systems, imposing strict rules and penalties that can reach up to 7% of global revenue for companies that fail to comply.

The EU AI Act draws clear boundaries around what is acceptable in AI development. It outright bans certain applications like government-generated citizen scoring systems that could threaten fundamental rights.

For tech leaders and business owners operating in or selling to European markets, this means that setting up continuous monitoring systems becomes mandatory, not optional. Think of it as installing smoke detectors throughout your AI infrastructure to alert you before issues become costly fires.

The EU AI Act isn't just another regulatory hoop to jump through - it's the new playbook for responsible AI development in Europe, with penalties steep enough to make even the biggest tech giants pay attention.

AI systems under this framework require regular check-ups and documentation. The Act demands transparency about how your AI makes decisions, especially for systems that might affect people's lives or rights.

For local business owners using AI tools, this means asking vendors tough questions about compliance and risk management. The days of plugging in AI solutions without understanding what lies beneath are over in Europe, and smart US businesses are already preparing for similar rules at home.

ISO 42001 Standards

While the EU AI Act focuses on regional compliance, ISO 42001 offers a global standard for AI governance. Released in December 2023, ISO/IEC 42001:2023 stands as the first international standard specifically designed for Artificial Intelligence Management Systems (AIMS).

This groundbreaking framework guides organizations through establishing, implementing, and improving their AI systems using the practical Plan-Do-Check-Act methodology. I have seen companies struggle with AI governance, often creating policies that look good on paper but fall apart in practice.

This standard fixes that problem.

The beauty of ISO 42001 lies in its versatility. Organizations of all sizes across various sectors can apply these standards, from small local businesses to large corporations, including public and non-profit entities.

The framework actively supports several Sustainable Development Goals like gender equality and economic growth. ISO also provides complementary standards such as ISO/IEC 22989 and ISO/IEC 23053 to create a more comprehensive approach.

For tech leaders looking to implement responsible AI practices, this standard offers a structured path forward without reinventing the wheel.

Key Steps in Implementing an AI Risk Management Framework

Implementing AI risk management is a crucial aspect of modern business strategy in our technology-driven environment. It starts with a systematic approach to identify and address potential risks throughout your AI systems' lifecycle. You need clear steps for assessing vulnerabilities, establishing governance protocols, and creating transparency mechanisms that work for your specific business context.

NIST: National Institute of Standards and Technology

RMF: Risk Management Framework

ISO: International Organization for Standardization

Identifying and assessing AI risks

AI systems bring amazing benefits but also pack some serious risks that need careful handling. Let's break down how to spot and size up these risks before they turn your AI implementation into the digital equivalent of a dumpster fire.

- Map your AI system's full lifecycle from data collection to deployment and ongoing operations.

- Create a risk register that tracks both technical risks (like data quality issues) and business risks (such as reputation damage).

- Score each risk based on likelihood and impact using a simple 1-5 scale for each factor.

- Conduct regular bias audits to check if your AI makes fair decisions across different groups.

- Test your AI with adversarial examples to find weak spots hackers might exploit.

- Document all third-party components and assess their security protocols.

- Perform privacy impact assessments to protect user data and meet regulations.

- Run stress tests to see how your AI handles unusual inputs or high volumes.

- Set up continuous monitoring tools that alert you to performance drops or odd behaviors.

- Establish clear thresholds for when human review becomes mandatory.

- Gather feedback from diverse stakeholders to catch blind spots in your risk assessment.

- Compare your AI risks against the NIST AI RMF guidelines released July 26, 2024.

- Prioritize your resources based on risk assessments as recommended by NIST.

- Check if your system falls under specific regulations like the EU AI Act.

- Plan for graceful failure modes so your AI fails safely when things go wrong.

- Track your system's decisions to build an audit trail for compliance purposes.

- Assess how well users can understand and challenge AI decisions.

- Review how your AI aligns with your company values and social norms.

- Calculate the cost of mitigation for each risk to help with budget planning.

- Schedule regular risk reassessments as your AI learns and evolves over time.

Risk Assessment Summary:

- Map the entire AI lifecycle and maintain a risk register.

- Regular bias audits and privacy impact assessments are essential.

- Continuous monitoring and stress tests help track performance.

Establishing governance and oversight mechanisms

Governance structures form the backbone of any solid AI risk management strategy. Think of them as the control tower at an airport, making sure all those fancy AI planes do not crash into each other or veer off course.

- Define clear roles and responsibilities for AI oversight across your organization. Just like a well-balanced team needs players with specific skills, your company needs designated individuals who understand both the technical and ethical aspects of AI systems.

- Create an "AI Risk Task Force" with representatives from IT, legal, operations, and customer-facing departments. This cross-functional squad should meet quarterly to review your AI programs and tackle risks before they become problems, much like routine maintenance on your favorite gaming rig.

- Develop accountability mechanisms that trace decisions back to specific individuals or teams. Without accountability, risk management is like playing a game without save points.

- Document governance protocols in plain language that both your tech team and business stakeholders can understand. No one wants to read a 200-page manual full of corporate jargon.

- Establish regular review cycles for all AI systems based on their risk level and business impact. High-risk systems might need monthly check-ups while lower-risk tools can be reviewed quarterly.

- Implement stakeholder engagement processes that bring diverse perspectives into your risk assessment. Teams with varied viewpoints catch issues that a homogenous group might miss.

- Set up escalation paths for AI-related incidents so everyone knows what to do if something goes wrong. This stops the "I thought you were handling it" problem.

- Create oversight committees with the authority to pause or modify AI deployments that show signs of risk. Someone needs the power to hit the emergency brake when needed.

- Build compliance checkpoints into your AI development lifecycle rather than treating them as afterthoughts. Compliance works best when it is a constant partner.

- Track and measure the performance of your governance structures. A system that never evolves is as unhelpful as a low-level character facing a final boss.

Governance Key Points:

- Define clear roles and build cross-functional teams.

- Accountability and regular review cycles are critical.

- Document governance protocols in simple terms.

Ensuring transparency and explainability

Transparency and explainability form the backbone of trustworthy AI systems in today's business landscape. Let us explain how you can build these crucial elements into your AI risk management framework.

- Make AI decision-making visible to users through clear documentation that explains how your systems reach conclusions.

- Implement Explainable AI (XAI) techniques that allow both technical and non-technical stakeholders to understand why specific decisions were made.

- Create plain-language explanations of complex algorithms for customers and employees who interact with your AI systems.

- Develop dashboards that visualize AI decision factors, like those used in credit scoring or hiring applications.

- Conduct regular algorithmic audits to verify that your AI systems operate as intended.

- Train your team to explain AI outputs in simple terms, keeping technical jargon to a minimum.

- Document all model training data sources, cleaning procedures, and potential biases to maintain a complete audit trail.

- Use interpretability tools like LIME or SHAP to show which features most influenced a particular AI decision.

- Establish clear processes for humans to review and override AI decisions when needed.

- Design AI systems with built-in explanation capabilities rather than adding them as an afterthought.

- Maintain version control for all AI models to track changes in decision-making over time.

- Create user-friendly interfaces that display confidence levels for AI predictions and recommendations.

- Set up feedback mechanisms that allow users to question or challenge AI decisions they find confusing or unfair.

- Develop a transparency rating system for your AI applications based on how easily their decisions can be explained.

- Adopt industry standards for AI documentation like Model Cards or Datasheets for Datasets.

Transparency Summary:

- Clear documentation and plain language explanations are vital.

- Dashboards and interpretability tools aid understanding.

- Continuous audits support ongoing transparency.

Addressing fairness and mitigating bias

While transparency helps users understand how AI systems work, fairness ensures these systems treat everyone equally. Addressing bias in AI systems requires both technical solutions and a firm commitment to ethical principles.

- Conduct regular bias audits of your AI systems to spot unfair patterns before they impact users. NIST AI RMF guidelines recommend these audits as a cornerstone of responsible AI management.

- Implement strong data governance practices to track where your training data comes from and who it represents.

- Use diverse training datasets that include varied demographics, cultures, and perspectives to build fairer AI systems.

- Apply fairness metrics during testing to measure how your AI treats different groups. Tools like IBM's AI Fairness 360 can help quantify bias.

- Create cross-functional review teams with members from varied backgrounds to catch bias that might be missed by a uniform team.

- Document all fairness decisions and trade-offs made during development for accountability and compliance purposes.

- Build feedback loops with users to spot real-world bias issues that tests might miss.

- Adopt a proactive approach to bias mitigation. Fixing bias after deployment costs more in money and reputation.

- Train your teams on ethical AI concepts so they recognize signs of bias in the systems.

- Balance fairness with other system requirements like accuracy and performance.

- Consider using explainable AI tools that help understand why your system makes specific decisions.

- Set clear fairness goals and metrics before development starts so teams know what success looks like.

Fairness and Bias Mitigation Summary:

- Implement regular bias audits and strong data governance.

- Use diverse training datasets and fairness metrics.

- Involve cross-functional teams to identify and correct bias.

Ensuring Compliance and Documentation in AI Risk Management

Documentation serves as your AI system's paper trail when regulators come knocking. Your compliance strategy needs proper record-keeping and regular audits to spot risks before they turn into major issues.

Implementing AI audit trail documentation standards

AI audit trails work like your business's digital record. They track every decision your AI system makes, creating a clear path for anyone to follow later. The NIST AI RMF offers ready-to-use templates that save you from building a system from scratch.

I have seen companies spend months creating documentation when these templates could do the job in days. Your audit trail should record all risks, impacts, and testing practices in clear language for both technical and non-technical team members.

Clear policies form the backbone of effective AI documentation standards. Set up continuous monitoring systems that flag odd AI behaviors before they emerge into problems. Regular feedback from diverse stakeholders helps spot blind spots in your risk management process.

Compliance Summary:

- Documentation and continuous monitoring protect against violations.

- Use established templates and risk registers for audit trails.

- Clear policies support readiness for regulatory reviews.

Solutions for Common Challenges in AI Risk Management

Tackling AI risk management challenges requires both technical fixes and organizational buy-in. This means creating cross-functional teams that break down silos and using standardized assessment tools that work across various AI applications.

Grab your pocket protector and join me in the next section where we explore practical tools that make AI risk management less like defusing a bomb while blindfolded and more like upgrading your favorite RPG character with the right equipment.

Challenges Summary:

- Technical challenges need embedded risk checks in agile workflows.

- Organizational barriers are solved with cross-functional teams.

- Regulatory compliance calls for proactive mapping and clear documentation.

Overcoming technical challenges

Technical hurdles in AI risk management can feel like trying to hit a moving target while blindfolded. The stats do not lie: 93% of organizations see risks in generative AI, yet only 9% actually feel ready to handle them.

I have seen many tech leaders struggle with measurement problems and keeping pace with rapid AI changes. NIST's ARIA program is developing evaluation methods to address issues like hallucination and bias detection.

Getting practical means embedding risk checks into your development workflow. Many clients of WorkflowGuide.com have seen success by automating risk tests and formalizing change management processes. Risk assessment becomes a core part of agile methodologies, especially for machine learning projects that require continuous monitoring.

Technical Challenges Summary:

- Integrate risk tests into development workflows.

- Automate change management processes for speed and efficiency.

- Continuous risk reviews help catch issues early.

Overcoming Organizational Barriers

Technical hurdles are not the only obstacles. Resistance from teams that fear AI may change familiar workflows is also common. I have seen companies where staff undermined implementation by clinging to old methods. Leadership support makes a big difference. Data shows that companies with active executive champions see 73% higher success in AI risk framework adoption.

Communication gaps between tech teams and business units can create a mixing of languages that hinders progress. Clear guidelines that connect technical concepts with business value help bridge this gap. Regular stakeholder meetings turn potential obstacles into supportive partners.

Organizational Barriers Summary:

- Cross-functional teams reduce resistance and improve outcomes.

- Clear communication bridges gaps between technical and business groups.

- Engagement sessions convert blockers into proactive allies.

Addressing regulatory and compliance issues

Regulatory compliance for AI systems can feel like a game where the rules change at every level. Tech leaders face a maze of regulations across different regions, with the EU AI Act imposing penalties that can strain smaller companies.

Strong compliance practices help companies save an average of $3.05 million per data breach. I have seen many clients realize their AI tools violated data protection laws they were unaware of. The solution is to create a cross-functional compliance team that includes legal experts who understand technology. Map your AI systems against standards like ISO/IEC 42001:2023 to spot gaps before regulators do.

Document everything, as "we did not know" will not save your business from fines. With 72% of companies using AI but only 9% managing the risks properly, building strong compliance offers a competitive advantage.

Regulatory Compliance Summary:

- Strong compliance practices reduce the risk of fines.

- Automated monitoring identifies potential legal breaches.

- Cross-functional compliance teams improve overall readiness.

Tools and Resources for AI Risk Management

Finding the right tools can turn AI risk management from a headache into a manageable task. Platforms like IBM's AI Fairness 360 toolkit or Microsoft's Responsible AI Toolbox offer ready-to-use resources for risk assessment and AI governance.

Risk assessment tools and software

Tech leaders need practical tools to manage AI risks without drowning in technical details. Software solutions can simplify implementing AI risk management frameworks, much like having a smart co-pilot that helps monitor your systems.

- Hyperproof offers specialized compliance tools for NIST AI RMF alignment, complete with ready-to-use framework templates that save setup time.

- Centralized risk registers let teams track all AI risks in one place, making it easier to spot patterns and fix issues before they grow.

- Real-time dashboards offer an at-a-glance view of your AI risk posture, similar to how a fitness tracker shows vital health stats.

- Automated evidence collection tools gather proof for compliance without the tedious manual search for documents.

- Pre-configured NIST CSF 2.0 templates help blend cybersecurity concerns with AI risk management for a fuller safety net.

- Risk scoring systems help prioritize which AI issues need attention first, so resources are not wasted on minor glitches.

- Workflow automation tools route approvals and reviews to the right people at the right time, cutting through bureaucratic delays.

- Audit trail generators create detailed records of all risk-related decisions and actions, forming a valuable paper trail for reviews.

- Scenario planning software simulates how your AI systems might respond to various risks before real issues occur.

- Compliance mapping tools connect your controls to multiple regulatory standards without duplicating work.

Technology Tools Summary:

- Specialized tools simplify compliance and risk tracking.

- Real-time dashboards provide quick, clear insights.

- Automation reduces manual effort in gathering documentation.

Framework-specific guidelines and templates

Framework-specific guidelines and templates serve as your guide in a complex regulatory environment. These resources turn abstract frameworks into actionable steps that fit your organization's needs.

- NIST AI RMF Playbook offers voluntary guidance with documentation templates that adjust to industry needs and organizational maturity. It is like an AI risk management cookbook with flexible recipes.

- EU AI Act templates provide structured approaches for classifying your AI systems into risk categories with matching compliance checklists for each level.

- ISO 42001 implementation guides break the standard's requirements into practical steps with gap analysis tools that show where current practices need improvement.

- Hyperproof's custom framework tools allow .CSV uploads for frameworks like the EU AI Act and Japan's guidelines, which means you do not need to start from scratch.

- Industry-specific template libraries offer pre-built risk assessment matrices that cover common risk scenarios in sectors such as healthcare, finance, manufacturing, and retail.

- Model cards templates standardize how you document AI model characteristics, limitations, and intended uses for clear stakeholder communication.

- AI impact assessment worksheets guide teams through evaluating potential social, ethical, and legal impacts before deployment with scoring systems to rank risks.

- Governance structure blueprints lay out roles, responsibilities, and reporting lines for AI oversight committees, complete with sample meeting agendas and decision logs.

- Incident response playbooks provide step-by-step instructions for handling AI system failures or unexpected behaviors, with ready-made communication templates.

- Vendor assessment questionnaires help evaluate third-party AI tools against your risk management standards before integration.

Template Guidelines Summary:

- Templates simplify the implementation of regulatory standards.

- Industry-specific matrices address diverse application needs.

- Tools like model cards help standardize AI documentation.

Best Practices for Effective AI Risk Management

Best practices for AI risk management are not mere checkboxes; they form the safety net that stops issues before they become major problems. Successful teams build continuous feedback loops that catch issues early and use cross-functional collaboration to ensure no risk is left unaddressed.

Continuous monitoring and improvement

AI systems do not sit still. They learn and sometimes behave in unexpected ways. Regular monitoring acts as an early warning system to stop small issues from growing. Data shows that routine feedback greatly improves risk detection rates. This process is much like using a fitness tracker to monitor vital signs.

Setting up routine checkpoints beats the "set it and forget it" method. Your monitoring system should track performance metrics, bias indicators, and security protocols in real time.

Monitoring Summary:

- Regular feedback loops boost risk detection.

- Performance metrics guide continuous improvement.

- Training sessions keep teams updated on risk protocols.

Collaboration across teams and stakeholders

AI risk management is not a solo mission. Think of it as assembling a team, where every member plays a role in avoiding disasters. Data shows that cross-functional teams catch 73% more potential issues than siloed approaches.

Regular meetings and clear communication help connect technical and business sides. This teamwork turns potential obstacles into strengths that support successful implementation.

Collaboration Summary:

- Cross-functional teams catch more issues.

- Regular stakeholder check-ins build collective strength.

- Diverse perspectives enhance risk management.

Integrating ethical considerations into AI development

Many companies add ethics as an afterthought, like putting racing stripes on a minivan and calling it a sports car. Ethical principles need to be built into your AI from day one. My teams at WorkflowGuide.com use structures that put fairness, transparency, and privacy at the center of development.

An ethics review can catch issues before they do damage. One client nearly launched a customer service AI that favored certain accents until our ethics committee intervened.

Stronger ethical practices lead to higher user trust and fewer support issues. Responsible AI is not just a compliance step; it is a competitive advantage.

Ethical Integration Summary:

- Embedding ethics early avoids later issues.

- Clear fairness measures support responsible AI.

- Team involvement ensures ethical practices align with business goals.

Case Studies of Successful AI Risk Management Implementation

Real-world success stories show how AI risk management frameworks work in practice. IBM's AI Ethics Board and Google's Secure AI Framework (SAIF) offer lessons in stakeholder engagement and practical mitigation strategies that any organization can adapt.

IBM's AI Ethics Board

IBM's AI Ethics Board stands as a gold standard for tech governance in the AI space. This oversight group reviews company ethics policies and tackles risks from generative AI systems with a practical, no-nonsense approach.

The board does more than talk about ethics; it creates guardrails that protect the company and its customers from potential AI mishaps. Fewer than 25% of organizations have put effective ethics governance into practice, making IBM's method a blueprint for others.

Case Study Summary (IBM):

- IBM's board reviews ethics policies and manages risks effectively.

- Structured oversight boosts accountability and decision-making.

- This method serves as a model for other organizations.

Google's Secure AI Framework (SAIF)

Google launched its Secure AI Framework (SAIF) on June 8, 2023, setting a standard for AI security practices. The framework addresses severe AI threats like model theft, data poisoning, and prompt injection attacks.

Think of SAIF as a Swiss Army knife for AI security. It has six core elements that cover everything from basic security protocols to advanced threat detection and automated defense measures. Google also plays an active role in setting industry standards like the NIST AI Risk Management Framework, making SAIF part of a broader ecosystem.

For business owners just starting with AI, SAIF provides clear security steps that grow with your needs.

Case Study Summary (Google):

- Google's SAIF outlines clear security protocols for AI systems.

- Its focus on continuous adaptation boosts long-term protection.

- Integration with industry standards strengthens overall security.

Conclusion

Implementing AI risk management is a crucial part of modern business strategy. Frameworks like NIST's AI RMF offer practical guidance for identifying risks, setting up governance, and building trust through transparency. AI systems need regular upkeep, much like vehicles, with documentation tracking every decision and revision during their AI lifecycle. Leading companies like IBM and Google show that effective AI governance strikes a balance between innovation, safety standards, and stakeholder accountability. AI risk management is an ongoing process that calls for continuous monitoring and team collaboration as rules change. Begin by checking your current AI applications against these frameworks. Responsible AI not only protects your business but also builds the foundation for sustainable growth that earns customer trust.

FAQs

1. What is an AI Risk Management Framework?

An AI Risk Management Framework helps companies spot and fix problems with their AI systems. Think of it as a safety net that catches issues before they cause harm. This framework guides teams through steps to identify, assess, and control risks in AI applications.

2. Why should my company implement an AI risk management strategy?

Your company needs this strategy to avoid costly AI failures. Bad AI can hurt your reputation and bottom line faster than a cat video goes viral. New rules about AI use are appearing worldwide, so getting ahead of compliance saves headaches later.

3. What are the key components of an effective AI risk framework?

A solid framework includes governance structures, risk assessment tools, and monitoring systems. It also needs clear roles for who handles what when problems arise. The best frameworks adjust as technology changes and new threats appear.

4. How long does it take to implement an AI risk management framework?

Implementation time varies by company size and AI maturity. Small companies might complete a basic setup in 3-6 months. Larger organizations with complex AI systems often need 12-18 months to roll out a complete program that covers every angle.

Disclosure: This content is provided for informational purposes only and does not constitute professional advice. The views expressed are based on practical work experience and should not replace expert risk assessment or legal consultation.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

References

- https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

- https://www.lumenova.ai/blog/ai-risk-management-importance-of-transparency-and-accountability/ (2024-05-28)

- https://www.wiz.io/academy/nist-ai-risk-management-framework

- https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (2025-02-19)

- https://www.iso.org/standard/81230.html

- https://www.nist.gov/itl/ai-risk-management-framework

- https://rails.legal/resources/risk-management/

- https://www.frontiersin.org/journals/human-dynamics/articles/10.3389/fhumd.2024.1421273/full

- https://www.hbs.net/blog/ai-risk-management-framework (2024-06-20)

- https://www.modulos.ai/blog/implementing-an-ai-risk-management-framework-best-practices-and-key-considerations/ (2024-08-13)

- https://www.linkedin.com/pulse/article-3-overcoming-challenges-implementing-ai-risk-controls-singh-9adyc

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11315296/

- https://www.phoenixstrategy.group/blog/ai-risk-management-frameworks-for-compliance

- https://www.paloaltonetworks.com/cyberpedia/nist-ai-risk-management-framework

- https://digitalgovernmenthub.org/library/nist-ai-risk-management-framework-playbook/

- https://hiddenlayer.com/innovation-hub/ai-risk-management-effective-strategies-and-framework/

- https://kestria.com/insights/integrating-ethical-principles-into-ai-development/ (2024-08-28)

- https://www.ibm.com/think/insights/a-look-into-ibms-ai-ethics-governance-framework

- https://blog.google/technology/safety-security/introducing-googles-secure-ai-framework/