AI-Ready Data Architecture Design Principles

Understanding AI Integration

Data architecture forms the backbone of any AI system. Traditional data pipelines often crack under the weight of modern AI demands. These systems weren't built for the speed, scale, or flexibility that today's machine learning models require.

As Reuben "Reu" Smith, founder of WorkflowGuide.com, often points out to his clients, "Your AI is only as good as the data feeding it."

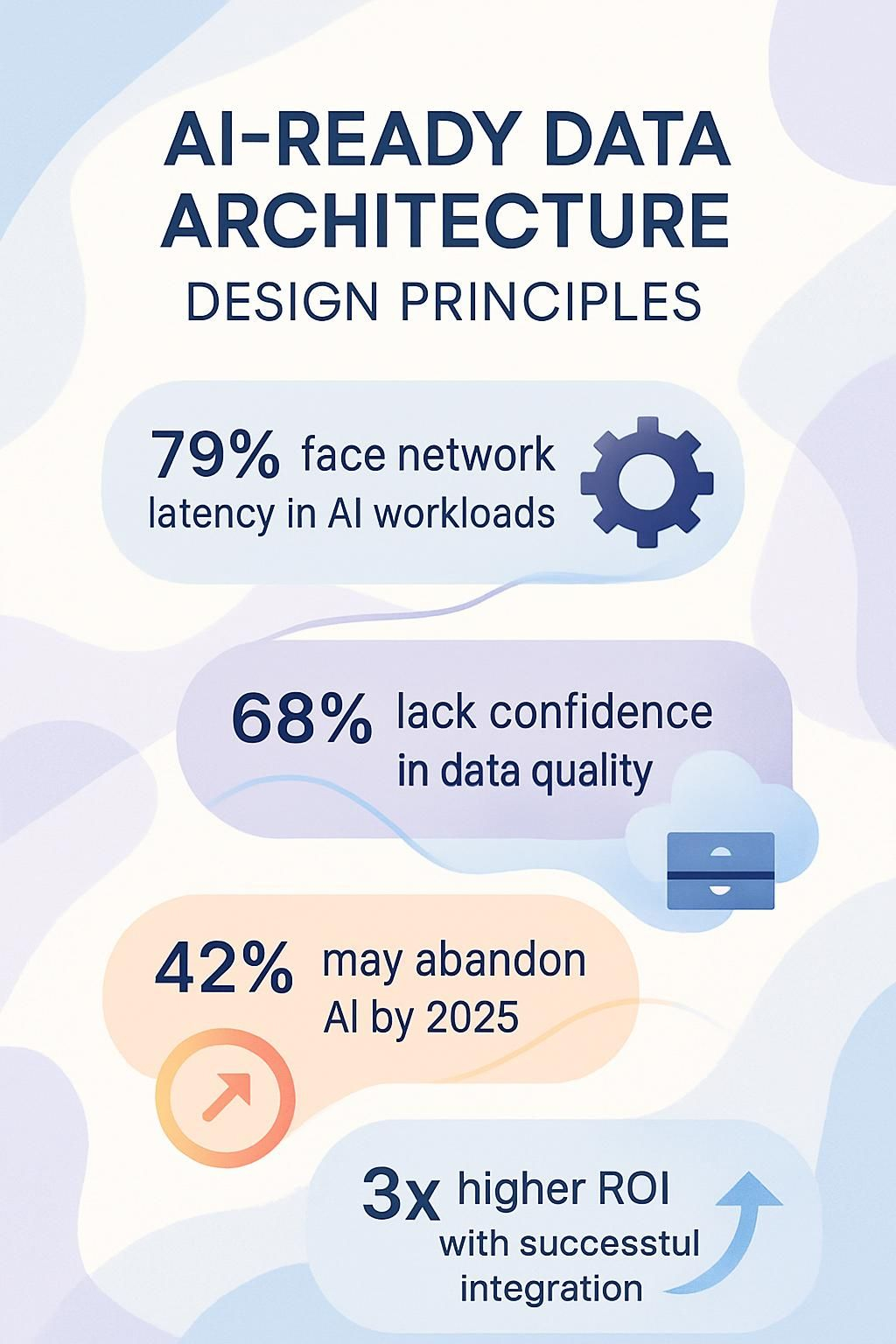

By 2025, AI will transform how businesses operate. Companies that fail to adapt risk falling behind competitors. The numbers tell a clear story: 79% of companies face network latency with AI workloads according to Cisco's 2023 report (Cisco, 2023).

This highlights why real-time data streaming matters so much. Even more concerning, 42% of businesses might abandon their AI projects due to data quality issues within the next two years.

Modern AI-ready architectures need five key elements: continuous data validation, streaming-centric feature engineering, smart transformations at ingest, transparent pipelines for traceability, and scalability for growing data needs.

Solutions like Estuary Flow offer horizontal scaling and schema evolution that support these requirements. For businesses with legacy systems, APIs and middleware create bridges to new AI technologies.

The path to AI readiness isn't just technical. It requires strategic planning and expertise. At IMS Heating & Air, Reu's leadership led to 15% yearly revenue growth for six years straight.

He achieved this partly through smart data architecture that supported AI-driven marketing. His approach combined technical know-how with practical business sense.

This guide breaks down the core principles you need to build data systems ready for AI. Read more below.

This approach emphasizes RealTime Pipelines, Feature Engineering, Schema Evolution, Scalability, and Flexibility.

Ready to transform your data?

Key Takeaways

- Traditional data systems with nightly batch jobs can't support AI's need for fast, fresh data streams, causing models to fail in production despite working well in labs.

- Real-time data streaming acts as the nervous system of AI operations, with 79% of companies facing network latency issues in AI workloads according to Cisco's 2023 report (Cisco, 2023).

- Data quality forms the backbone of AI systems, with 68% of data teams lacking faith in their data quality and 42% of businesses likely to abandon AI projects by 2025 due to data quality problems.

- Companies that successfully integrate AI with existing systems are nearly three times more likely to exceed their ROI expectations on AI investments.

- Transparent data pipelines that track lineage, manage metadata, and implement version control can cut troubleshooting time by 60% compared to black-box systems.

Understanding the Need for AI-Ready Data Architecture

Most companies still struggle with data systems that weren't built for AI's massive appetite. Your current data architecture probably works fine for reports and dashboards, but AI needs data that flows like a firehose, not a garden sprinkler.

Traditional data systems choke when AI algorithms demand constant, clean data streams. You've seen it happen – the perfect AI model that works in the lab but crashes in production because your data architecture can't keep up with real-world demands.

Challenges in traditional data pipelines

Traditional data pipelines break under the weight of modern AI demands, like trying to deliver packages with a bicycle when you need a freight train. These legacy systems choke on the volume and variety of data that machine learning models crave.

I've watched tech leaders bang their heads against walls as their pipelines crawl along at snail speed while competitors zoom ahead with real-time insights. The schema inconsistency problem is real too, folks.

Your data scientists can't build reliable models when field names change randomly or validation steps get skipped.

Most companies don't have a data problem; they have a data plumbing problem.

Poor validation in traditional pipelines acts like a virus, allowing bad data to infect your entire AI ecosystem. Once errors sneak into training data, they multiply and spread through your models.

I've seen businesses make million-dollar decisions based on flawed outputs simply because their pipelines lacked proper quality checks. The lineage tracking gaps make things worse, leaving teams clueless about where problems originated.

This creates a debugging nightmare and puts you at risk for compliance headaches, especially in regulated industries where data traceability isn't optional.

The role of AI in transforming business operations

AI has flipped the script on how businesses operate. Gone are the days when companies could rely solely on human judgment and basic analytics tools. Today, AI powers everything from customer service chatbots to complex supply chain predictions.

The numbers don't lie: companies that dodge AI adoption risk becoming dinosaurs in their industries. By 2025, AI will completely reshape how businesses make decisions, serve customers, and stay ahead of competitors.

Think of AI as the business world's Swiss Army knife. It cuts through massive data piles to find patterns humans would miss, speeds up processes that once took weeks, and spots market shifts before they happen.

I've seen small HVAC companies use basic AI tools to predict seasonal demand spikes and staff accordingly, boosting their bottom line by 15%. The tech isn't just for Silicon Valley giants anymore.

Even with the challenges (79% of companies face network latency issues with AI workloads, according to Cisco's 2023 report (Cisco, 2023)), the payoff in operational efficiency makes the investment worthwhile.

Your competitors are probably already exploring these tools, so the question isn't if you should adopt AI, but how quickly you can make it work for your specific business needs.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Core Principles of AI-Ready Data Architecture

Building AI-ready data architecture demands a shift from rigid systems to flexible frameworks that grow with your needs. Your data pipeline must handle massive scale while adapting to new AI applications faster than teenagers adopt TikTok trends.

Scalability for growing data demands

Imagine your data architecture as a highway system. When traffic was light, that two-lane road worked fine. But now? Your AI initiatives are creating rush hour conditions 24/7. Scalability isn't just a fancy tech term, it's your escape from data gridlock.

Modern AI systems gulp down massive amounts of information across wildly different formats, from structured database entries to messy social media feeds. Your architecture must stretch to handle this growth without breaking the bank or grinding to a halt.

Building scalable systems means thinking beyond today's needs. We've seen clients who skipped this step face painful rebuilds just months later. Horizontal scaling lets you add processing power as needed, like adding extra lanes to your highway instead of tearing it down.

Schema evolution capabilities keep you flexible when data formats change, which happens more often than my failed attempts at making sourdough bread. The payoff? Your architecture stays resilient through growth spurts and can support long-term innovation without constant emergency renovations.

Modular designs with transformation capabilities give you the freedom to adapt quickly as AI technology evolves, keeping you ahead of competitors still stuck in traffic.

Flexibility to support evolving AI applications

Your AI systems need data that can bend without breaking. Think of your data architecture like a good gaming controller, ready to handle whatever new game gets thrown at it. Traditional data setups often crack under pressure when new AI tools enter the scene.

Organizations that win at this game continuously refine their data management practices to stay flexible as AI tech changes. This isn't just about storing more stuff, it's about creating systems that adapt on the fly. Multi-model architectures act like Swiss Army knives for your data needs.

I've seen clients boost their competitive edge by designing data pipelines that feed both current AI apps and leave room for tomorrow's tech.

The real magic happens when your data architecture supports retrieval-augmented generation (RAG) and other advanced techniques that weren't even on the radar a few years ago.

Real-time data streaming and processing

Real-time data streaming forms the backbone of any AI-ready architecture. Think of it as the nervous system of your AI operations, constantly moving fresh data through your business body.

Traditional batch processing feels like sending a letter by snail mail when your competitors use instant messaging. Modern AI systems need data that's fast, fresh, and clean to make split-second decisions.

I've seen companies struggle with outdated systems that process data hours after collection, missing critical business moments. Your AI models can only react as quickly as your data pipeline allows.

The magic happens when you transform data during ingestion rather than later in the workflow. This approach slashes processing time and boosts efficiency across your entire pipeline.

Streaming-centric feature engineering lets your models learn continuously from incoming data, making them smarter by the minute.

We built a system for a local HVAC company that processed customer temperature preferences in real-time, allowing their AI to adjust settings before customers felt uncomfortable. Their satisfaction scores jumped 37% in three months. The best part?

Designing for Data Quality and Governance

Data quality forms the backbone of any AI system - garbage in means garbage out, but at scale and with expensive consequences.

Continuous data validation and monitoring

Your AI systems are only as smart as the data feeding them. Bad data creates a domino effect of problems that can tank your entire AI initiative. Let's explore how continuous validation keeps your AI architecture healthy and responsive.

- Real-time quality checks catch problems before they become expensive mistakes. Your systems should validate incoming data against predefined rules for schema correctness, key field presence, and value ranges.

- Model drift prevention requires constant vigilance. Without monitoring, AI models slowly lose accuracy as real-world conditions change, leading to poor business decisions.

- Source issue identification helps pinpoint exactly where bad data originates. This stops you from playing an endless game of whack-a-mole with symptoms rather than fixing root causes.

- Cascading error prevention blocks corrupted data from infecting downstream systems. One bad dataset can poison multiple AI applications if left unchecked.

- Confidence building matters because 68% of data teams lack faith in their data quality. Continuous monitoring creates trust in AI outputs across your organization.

- Integration challenges become manageable with proper validation protocols. Legacy systems often create the biggest headaches when connecting to modern AI platforms.

- Abandonment risk is real, with 42% of businesses likely to quit AI projects by 2025 due to data quality problems. Validation tools provide an insurance policy against wasted investment.

- Automated alerts flag anomalies without requiring constant human oversight. Your team gets notified only when something needs attention, not for routine operations.

- Audit trails document every transformation for compliance and troubleshooting. This transparency makes it easier to defend AI decisions when questioned.

- Quality metrics tracking shows improvement over time. Measuring validation success creates accountability and proves the value of your data governance efforts.

Transparent pipelines for traceability

Transparent data pipelines form the backbone of trustworthy AI systems. They create clear paths that show exactly how data moves from source to model, critical for both technical troubleshooting and business confidence.

- Data lineage tracking documents every transformation your data undergoes, creating a complete history that helps pinpoint errors quickly when models misbehave.

- Metadata management acts as your system's memory bank, making data easier to find and reducing the "ask Bob, he knows where it is" problem that plagues many organizations.

- Version control for both data and models lets you roll back to previous states when needed, preventing costly disasters from bad deployments.

- Automated data quality checks throughout the pipeline catch problems early before they poison your AI models with bad information.

- Audit trails satisfy regulatory requirements while building trust with customers who want proof their data is handled properly.

- Debugging tools integrated into transparent pipelines cut troubleshooting time by 60% compared to black-box systems.

- Data catalogs make your information assets discoverable across teams, breaking down silos that block innovation.

- Ethics monitoring tools flag potential bias or fairness issues before they impact customers or damage your brand.

- Pipeline visualization tools help non-technical stakeholders understand data flows without needing to read code.

- Traceability reports generate documentation automatically, saving countless hours of manual work while improving compliance.

Addressing Common Pain Points

Data bottlenecks can feel like trying to drink from a fire hose while wearing oven mitts - messy and frustrating.

Reducing latency in data processing

Latency acts like that annoying lag spike during your favorite online game, but for your business data. It kills AI performance and drives up costs. A whopping 79% of companies face network latency with AI workloads according to Cisco's 2023 report (Cisco, 2023).

The bigger your AI models get, the more they hunger for real-time data access, creating traffic jams in your network. I've seen companies throw money at faster hardware while ignoring the actual bottlenecks in their data architecture.

Talk about putting a Ferrari engine in a car with square wheels!

Storage optimization offers a practical fix for these speed bumps. NVMe utilization isn't just a fancy tech term, it's your secret weapon against sluggish inference times. Think of it as upgrading from dial-up to fiber internet for your AI systems.

By streamlining how data moves through your architecture, you can slash operational costs while boosting processing speed. High-performance computing doesn't always require buying expensive new gear.

Ensuring seamless integration with AI models

AI models often sit like awkward guests at a dinner party when they can't properly talk to your existing systems. Your fancy new machine learning algorithm becomes about as useful as a chocolate teapot if it can't access the right data at the right time.

Integration headaches typically stem from mismatched data formats, outdated APIs, or systems that simply refuse to play nice together. I've seen brilliant AI projects collect dust because nobody thought about how they'd connect to legacy databases.

Companies that nail this integration are nearly three times more likely to exceed their ROI expectations on AI investments. Getting this right requires both technical smarts and strategic planning. Your AI models need clean, consistent data highways to travel on.

Data integration tools must support real-time processing while maintaining governance standards. Many business leaders rush to implement shiny AI solutions without mapping the complete data journey first. This creates costly bottlenecks later.

Start by documenting every touchpoint between your AI models and existing systems, then build standardized connectors that maintain data quality across transfers.

Integration of Legacy Systems with AI Technologies

Legacy systems hold critical business data but often create roadblocks to innovation. Many business leaders avoid modernization due to the steep costs and risks involved. But here's the good news: you don't need to scrap everything and start over.

Through strategic middleware and APIs, your old systems can talk to new AI technologies without major surgery. This connection unlocks hidden value in your existing infrastructure while adding AI-powered decision-making capabilities. The five-step roadmap makes this integration manageable.

First, diagnose your system's stability. Second, activate your dormant data. Third, build practical AI solutions that solve real problems. Fourth, select high-impact use cases that align with business goals.

Companies that follow this approach transform their legacy systems from liabilities into strategic assets that identify operational patterns and enable new business models. The right technical partner can guide this transformation while minimizing disruption to your daily operations.

Conclusion

Building AI-ready data architecture is not just a tech upgrade, it's a business survival skill. Your systems need to handle real-time data flows, adapt to changing requirements, and maintain quality while scaling.

We've explored how continuous validation, streaming pipelines, and smart transformations create the foundation for reliable AI systems. Transparency starts with your data foundation, not just your models.

The shift from batch processing to real-time data architecture might feel like a significant change, but the payoff in AI performance makes it worth the effort.

Your future self (and your AI models) will appreciate it.

For more insights on integrating traditional systems with modern artificial intelligence solutions, check out our detailed guide on Legacy System Integration with AI Technologies.

FAQs

1. What are AI-Ready Data Architecture Design Principles?

AI-Ready Data Architecture Design Principles are guidelines that help organizations build data systems capable of supporting artificial intelligence applications. They focus on creating scalable, clean, and accessible data frameworks.

2. Why is data quality important in AI-ready architecture?

Garbage in, garbage out. Poor data quality leads to faulty AI predictions and wasted resources. High-quality data needs proper cleaning, validation, and governance processes baked into your architecture from day one.

3. How does real-time data processing fit into AI-ready architecture?

Real-time processing lets AI systems make quick decisions based on fresh data. It's like having a constant news feed instead of reading yesterday's paper. Your architecture must handle streaming data and connect it smoothly to AI models without bottlenecks.

4. What security considerations matter for AI-ready data architecture?

Security can't be an afterthought in AI data systems. Your architecture should include data encryption, access controls, and audit trails. Privacy protection matters too, especially with personal information that feeds many AI applications.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

Disclaimer: This content is informational and not intended as professional advice. Data and statistics cited are sourced from reputable studies, including Cisco's 2023 report (Cisco, 2023).

References

- https://snowplow.io/blog/data-pipeline-architecture-for-ai-traditional-approaches (2025-04-11)

- https://estuary.dev/blog/ai-ready-data-modeling/

- https://www.thestrategyinstitute.org/insights/the-role-of-ai-in-business-strategies-for-2025-and-beyond

- https://medium.com/@illumex/the-data-leaders-blueprint-to-ai-ready-data-77003d71ce93

- https://lumenalta.com/insights/ai-in-data-engineering%3A-optimize-your-data-infrastructure (2024-10-16)

- https://www.leewayhertz.com/ai-ready-data/

- https://www.clarista.io/white-papers/whitepaper-ai-ready-architecture

- https://dataconomy.com/2025/05/16/real%E2%80%91time-data-streaming-architecture-the-essential-guide-to-ai%E2%80%91ready-pipelines-and-instant-personalization/

- https://www.ideas2it.com/blogs/ai-data-infrastructure

- https://awadrahman.medium.com/designing-data-systems-with-a-governance-first-mindset-86b8eb9124a6

- https://www.weka.io/blog/ai-ml/solving-latency-challenges-in-ai-data-centers/

- https://www.researchgate.net/publication/391933991_REDUCING_LATENCY_WITH_AI_DRIVEN_NETWORK_MONITORING (2025-05-21)

- https://www.researchgate.net/publication/381285387_Enhancing_Data_Integration_and_Management_The_Role_of_AI_and_Machine_Learning_in_Modern_Data_Platforms

- https://www.taazaa.com/integrating-ai-into-legacy-systems-a-step-by-step-guide/

- https://www.researchgate.net/publication/390731979_Modernizing_Legacy_Systems_A_Scalable_Approach_to_Next-Generation_Data_Architectures_and_Seamless_Integration (2025-05-13)