AI Privacy Protection and Data Security Protocols

Understanding AI Integration

AI privacy protection refers to safeguarding personal data used by artificial intelligence systems. Like putting a force field around your digital self, these protections stop unwanted access to your information.

Data security has become critical as AI systems process massive amounts of personal details daily. According to recent stats, over 25% of American startup investments in 2023 went to AI companies, showing how important this technology has become in our lives.

The privacy landscape gets tricky when AI enters the picture. Many systems work like black boxes, making it hard to see how they use our data. This lack of transparency creates problems when personal information gets used without permission or leads to unfair outcomes for certain groups.

Thankfully, solutions exist. Privacy-preserving techniques like differential privacy and federated learning allow AI to analyze data without exposing personal details. These approaches will be crucial as regulations tighten worldwide.

The EU AI Act, for example, can fine companies up to $10 billion for violations.

Reuben "Reu" Smith, founder of WorkflowGuide.com, has seen these challenges directly. With experience building over 750 workflows and generating $200M for partners, he understands how proper AI implementation protects both businesses and customers.

His approach focuses on problem-solving first, not just throwing technology at issues.

Leading tech companies now emphasize ethical frameworks for AI development. Google, Microsoft, and IBM have created guidelines centered on transparency, fairness, and privacy protection.

By 2024, synthetic data will make up 60% of all AI training data, creating new opportunities and risks.

This article explores how businesses can protect privacy while leveraging AI's power. We'll cover practical strategies to secure your data. Read on for more.

Key Takeaways

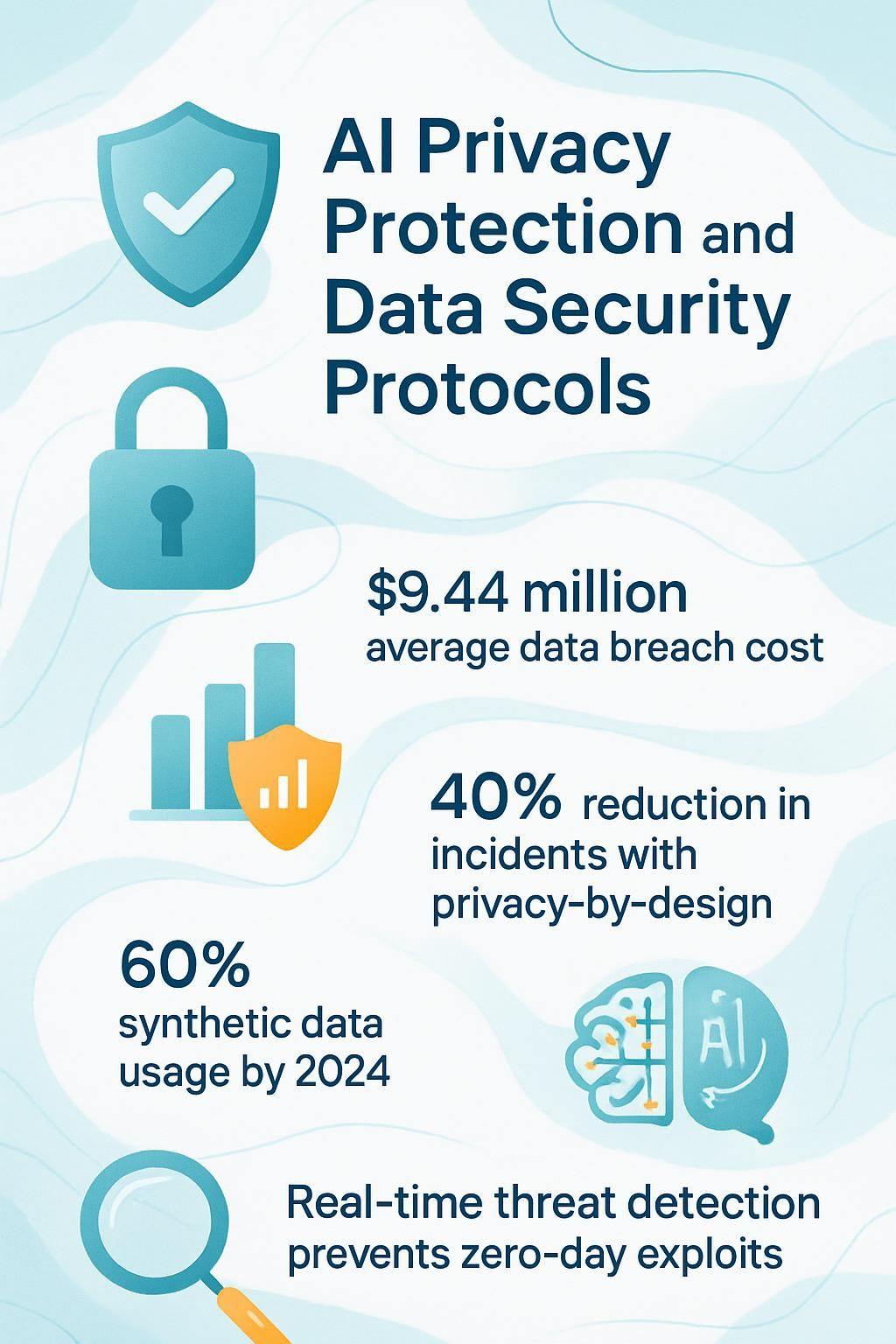

- AI privacy protection forms the backbone of trust between users and intelligent systems, with data breaches costing American companies an average of $9.44 million per incident.

- Privacy-by-design approaches save headaches by building protection into AI systems from day one, with some companies cutting security incident rates by 40% using these principles.

- Most AI models retain user input data indefinitely, creating permanent digital footprints that pose serious risks including identity theft and loss of anonymity.

- Synthetic data models that mimic real patterns without exposing actual user information are projected to make up 60% of AI training data by 2024.

- AI-powered security tools provide real-time threat detection by learning normal business patterns and spotting deviations, protecting against zero-day exploits without requiring advanced cybersecurity knowledge.

Understanding AI Privacy Protection

AI privacy protection forms the backbone of trust between users and intelligent systems, guarding personal information from misuse or exposure. Your data deserves Fort Knox-level security protocols that adapt to evolving threats while respecting your right to control what gets shared and with whom.

What is AI privacy?

AI privacy refers to the set of practices that protect personal data used by artificial intelligence systems. Think of it like a digital shield that guards your information from prying eyes.

AI systems gobble up massive amounts of data, including your personal details, shopping habits, and even health records. Without proper safeguards, this data could end up in the wrong hands or be used in ways you never agreed to.

Privacy-preserving techniques like differential privacy act as bouncers at the club of your personal information. These bouncers let AI analyze patterns without seeing individual details, similar to knowing a party's vibe without knowing each guest's secrets.

Legal frameworks such as GDPR and NIST Privacy Framework establish the rules of engagement, while methods like federated learning allow AI to learn from your data without actually taking it from your device.

For business owners, implementing these protections isn't just ethical, it's becoming a competitive advantage as customers grow more privacy-conscious.

The importance of data security in AI systems

While AI privacy sets the foundation for ethical data handling, data security forms the backbone of trustworthy AI systems. Data security in AI doesn't just protect information; it safeguards your entire business reputation.

Think of it like the difference between knowing the rules of a game (privacy) and actually having a strong defense strategy (security). For tech-savvy business leaders, this distinction matters because data breaches cost American companies an average of $9.44 million per incident.

Your AI systems process massive amounts of sensitive information daily, creating what I call "digital gold mines" that hackers are constantly trying to raid.

Data security isn't just a technical requirement; it's the invisible shield that protects your AI's decision-making integrity.

Security protocols in AI systems go beyond basic encryption. They maintain the integrity of decision-making processes that your business relies on. Without proper security measures, your AI could make recommendations based on compromised data, leading to poor business decisions.

GDPR and CCPA compliance isn't optional anymore, and AI actually helps meet these complex regulatory demands through automated monitoring. The most effective AI systems use layered protection approaches, including data minimization techniques that limit exposure risks.

This strategic approach reduces your liability while building customer trust, which translates directly to your bottom line.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Key Challenges in AI Privacy and Data Security

AI systems often hide their inner workings, making it hard to know how your data is being used or protected. Personal information can be scooped up without permission and used to make decisions that hurt certain groups of people.

Lack of transparency in AI algorithms

AI algorithms often operate as mysterious "black boxes" where inputs go in and outputs come out, but nobody can explain what happens in between. This lack of clarity creates serious trust issues for businesses trying to adopt AI tools.

The complex math and logic inside these systems make it hard to track how decisions get made. Many tech-savvy leaders find themselves stuck between wanting AI's benefits and fearing its hidden risks.

In 2023, over 25% of investment in American startups went to AI companies, yet accountability remains a major concern.

The transparency problem worsens as algorithms grow more intricate. Business owners can't defend decisions they don't understand, especially when those decisions affect customers or employees.

Imagine trying to explain why your AI denied someone a loan or flagged a transaction as fraudulent without knowing the actual reasons! This mystery creates accountability gaps that can damage your reputation and even lead to legal troubles.

Clear guidelines and regular audits help shine light into these dark corners of AI decision-making. Now let's explore how unauthorized use of personal data compounds these privacy challenges.

Unauthorized use of personal data

Companies gather your data like squirrels collect nuts, but unlike squirrels, they don't always ask permission first. Tech-savvy business owners face a growing threat: personal information collected through AI systems gets used without proper consent.

Your customers' names, browsing habits, and purchase history become corporate assets without their knowledge. This unauthorized data usage creates serious risks including identity theft and loss of anonymity.

A recent study showed that most AI models retain user input data indefinitely, turning casual interactions into permanent digital footprints.

Privacy isn't just a checkbox on your compliance form. It's the trust your customers place in your digital hands every time they interact with your business. - Reuben Smith

The dangers multiply when sensitive information enters the mix. Medical details, financial records, and location data harvested through AI interactions become valuable commodities on both legitimate and dark markets.

Small business owners often assume their operations are too modest to attract data thieves, but this dangerous misconception leaves systems vulnerable. Your customer database represents a gold mine to the right buyer, regardless of company size.

This discussion shows how these privacy risks manifest in real-world AI systems and what practical solutions exist.

Risks of discriminatory outcomes

AI systems often mirror biases found in their training data, creating an echo chamber of unfairness. These systems don't decide to discriminate on their own; they amplify patterns from historical data that may contain prejudice against certain groups.

For example, an AI recruitment tool might favor male candidates if trained on data from an industry historically dominated by men. The "black box effect" makes it harder to detect these biases until they affect many people.

This lack of transparency means discriminatory outcomes might go unnoticed until they've impacted thousands. A loan approval algorithm might reject applicants from specific zip codes, reinforcing existing economic divides without overtly stating race or income.

Such unintentional biases can harm individuals while damaging brand reputation and increasing legal liability. The fairness of your AI systems directly influences both customer trust and your bottom line.

AI-powered security protocols offer solutions to these bias risks while still protecting valuable data.

Solutions for Mitigating AI Privacy Risks

Protecting your data from AI privacy risks doesn't need to feel like trying to beat the final boss with a wooden sword. Our toolbox contains practical fixes like privacy-by-design approaches and smart data anonymization techniques that work in real business settings.

Embed privacy in AI design

Privacy by design isn't just a fancy catchphrase. It's the digital equivalent of building a fortress before you store the treasure, not after the dragons show up. Smart business leaders bake privacy directly into their AI systems from day one of development.

This approach saves countless headaches down the road. Rather than treating privacy as a bolt-on feature, successful companies weave protection mechanisms into the core architecture of their AI tools. The payoff is substantial for those who get it right. Your AI systems become naturally resistant to data breaches, and you dodge the compliance challenges that trouble your competitors.

Ask yourself: "Does this AI really need this specific data point to function?" If not, don't collect it. This simple question has saved our clients millions in potential regulatory fines and preserved customer trust, which as we all know, is harder to rebuild than your teenager's gaming PC after a power surge.

Privacy-by-Design Checklist:

- Define the essential data elements needed for the AI system.

- Evaluate the necessity of each data point and secure explicit User Consent.

- Integrate privacy controls into the design from inception.

- Test data minimization functions to confirm only required data is collected.

- Document implementation steps to meet Compliance regulations and Information Governance standards.

Anonymize and aggregate data

- Strip personal identifiers from datasets before AI processing to prevent individual tracking while maintaining analytical value.

- Apply data masking to replace sensitive details with fictional placeholders that preserve data structure but remove personal connections.

- Add statistical noise to datasets through differential privacy techniques, which protects individual records while enabling meaningful analysis.

- Implement k-anonymity by grouping data points so no single record can be distinguished from at least k-1 other records in the dataset.

- Create synthetic data models that mimic real data patterns without exposing actual user information, projected to make up 60% of AI training data by 2024.

- Aggregate information into summary statistics rather than storing individual data points to reduce privacy risks.

- Establish clear data minimization protocols to collect only necessary information, reducing both liability and security risks.

- Set automatic deletion timelines for raw data after aggregation to limit long-term exposure risks.

- Store aggregated insights rather than raw data whenever possible for business intelligence needs.

- Document your anonymization methods for compliance with regulations like GDPR and CCPA.

- Test anonymized datasets against re-identification attacks to verify their security before use in AI systems.

- Train staff on proper data handling procedures specific to anonymization techniques.

- Use secure computing environments for the anonymization process itself to prevent leaks during transformation.

Limit data retention periods

- Delete what you don't need immediately. Many businesses hoard data like digital pack rats, but this creates unnecessary risk exposure. Only keep what serves a current business purpose.

- Create a tiered retention schedule based on data sensitivity. Your customer's favorite ice cream flavor might stay longer than their credit card details.

- AI systems often store information indefinitely by default, which violates several privacy regulations. Regular purging of outdated data reduces compliance headaches and potential fines.

- Implement automatic deletion protocols in your AI systems. Your tech team can program these "digital janitors" to clean up data that has exceeded its useful life.

- Document your retention decisions and reasoning. If regulators come knocking, you'll need to justify why you kept certain information for specific periods.

- Short retention periods minimize damage from potential breaches. Hackers can't steal what you've already deleted.

- Train your team to recognize the value of data minimization. Many staff members save everything "just in case," creating privacy liabilities.

- Review retention policies quarterly as regulations evolve rapidly. What was compliant last year might put you at risk today.

- Consider using storage limitation tools that flag data approaching its expiration date. These reminders help maintain good data hygiene across your organization.

- Balance business needs with privacy requirements. Some records must be kept for tax or legal purposes, so work with your legal team to find the right balance.

Increase transparency and user control

- Implement clear consent mechanisms that explain exactly how user data will be collected and used in plain language, not buried in legal jargon that requires a law degree to decode.

- Create user-friendly dashboards where customers can view what personal information you store and modify their privacy settings with simple toggles rather than through complicated menus.

- Regulations like GDPR and CCPA demand explicit consent for data usage, so build systems that document user permission in ways that satisfy legal requirements while not driving users crazy.

- Develop AI audit trails that track how algorithms make decisions about user data, making it possible to explain outcomes when customers ask, "Why did your system do that?"

- Set up role-based access controls (RBAC) to limit who in your organization can view sensitive customer information, preventing the "everyone can see everything" problem many companies face.

- Offer data deletion options that actually work, unlike those frustrating "unsubscribe" buttons that seem to do nothing except generate more emails.

- Use plain English privacy notices instead of walls of text that nobody reads but everyone clicks "agree" to anyway.

- AI audit solutions can monitor your compliance with data protection standards, catching issues before they become problems that end up on the front page of tech blogs.

- Provide regular privacy reports to users summarizing what data you've collected and how it's been used, similar to those monthly credit card statements but hopefully less alarming.

- Continuous monitoring of AI outputs helps spot potential data leaks before they turn into full-blown breaches that require awkward apology emails.

AI-Powered Security Protocols for Data Protection

AI-powered security protocols act as your data's personal bodyguard, standing watch 24/7 with superhuman vigilance. These smart security systems can spot unusual patterns and potential threats faster than any human team, flagging suspicious activities before they become full-blown data breaches.

Automate data classification and monitoring

Data classification feels like playing "Where's Waldo?" with your sensitive information, except Waldo is your customer's credit card numbers hiding in a sea of spreadsheets. Smart businesses now deploy AI-powered classification tools that automatically spot and tag sensitive data across your systems.

These tools scan your databases in real-time, flagging items that need protection based on custom rules you create. No coding required! I've seen companies cut their compliance workload by 60% after implementing these systems.

The beauty lies in how these tools adapt to regulatory changes without breaking a sweat. Your system can automatically apply GDPR, HIPAA, or PCI DSS rules through intelligent tagging.

Think of it as having a security guard who never sleeps and knows exactly which documents need extra protection. The no-code interface lets your team create and test classification rules without bothering your IT department.

This means faster response to new regulations and fewer late-night concerns about data governance. Real-time monitoring catches issues before they become problems, giving you peace of mind while focusing on growing your business.

Strengthen access control with behavioral analytics

Gone are the days when a simple password kept your business data safe. Modern access control systems now leverage behavioral analytics to spot unusual activity before damage occurs. AI tools track how users normally interact with your systems, creating a digital fingerprint of typical behaviors.

The system flags unusual patterns like logging in at 3 AM when someone typically works 9-5, or suddenly downloading massive files from accounting when they work in marketing. These smart systems continuously assess user actions and can automatically trigger extra security steps like multi-factor authentication when something seems off.

The real magic happens in real-time threat detection. Your security system becomes like that friend who notices when someone at the party doesn't quite fit in. AI monitoring tools can spot and respond to suspicious activities as they happen, not days later during a security review.

For example, if an employee's account suddenly tries accessing sensitive customer data they've never needed before, the system can block the attempt and alert your security team immediately.

This proactive approach stops problems before they grow into full-blown security challenges. Next, we examine how AI applies differential privacy techniques to further shield your valuable data.

Detect threats in real time with AI-powered tools

AI-powered security tools now act as your digital night watchmen, spotting threats while they happen instead of after the damage is done. These systems continuously scan your network traffic, user behaviors, and data access patterns for anything suspicious.

I once set up real-time monitoring for a local HVAC company that caught a ransomware attempt before it locked up their customer database. The system flagged unusual file encryption activities at 3 AM, automatically isolated the affected workstation, and sent alerts to the security team.

The tools learn what is normal for your business and spot deviations effectively. Research shows AI security solutions detect potential threats with high accuracy, giving you precious time to respond before data gets compromised.

Unlike traditional security that relies on known threat signatures, AI adapts to new attack patterns through machine learning. This proactive approach keeps your business protected against zero-day exploits and sophisticated attacks that bypass conventional defenses.

For small businesses without dedicated security teams, these automated guardians provide enterprise-level protection without requiring advanced cybersecurity expertise.

Apply differential privacy techniques

Differential privacy acts like your data's personal bodyguard, adding just enough noise to protect individual information while still letting you extract valuable insights. Think of it as placing your customer data in protection mode; their identity stays hidden, but their story still helps solve the case.

The Gaussian and Laplace mechanisms serve as the secret sauce in this privacy recipe, mathematically scrambling personal details to thwart re-identification attempts. Many businesses struggle to balance data utility and protection, often leaning too far toward either useless data or dangerous exposure.

Your customers now demand both personalization and privacy. By implementing differential privacy, you secure sensitive information without sacrificing analytical power.

This approach directly boosts customer trust and helps you meet regulations like GDPR and CCPA. Its flexibility allows you to adjust privacy levels based on your specific needs.

For small business owners, this means you can finally compete with big tech's data practices without their massive security budgets.

Encrypt data throughout the processing cycle

While differential privacy masks individual data points, encryption acts as your data's invisible shield throughout its entire journey. Think of encryption as putting your sensitive AI data in an unbreakable safe that only authorized users can unlock.

Data encryption transforms your information into scrambled code that remains protected during collection, processing, storage, and analysis. This protection matters because AI systems face threats at every stage, from data supply chain vulnerabilities to compromised datasets that can corrupt your models.

I have seen many businesses focus on securing data only during storage, leaving it exposed during processing. Your encryption strategy must cover all touchpoints in the AI lifecycle.

This means implementing end-to-end encryption protocols that safeguard data from the moment it enters your system until it's deleted. Pairing strong encryption with verified data sources creates a double layer of protection.

The result is AI systems that gain significant protection against external attacks and internal data leaks, keeping your company safe from data breach headlines that cause sleepless nights.

Ensuring Regulatory Compliance in AI Systems

Regulatory compliance in AI systems demands a proactive approach rather than reactive fixes after problems occur. Smart companies use AI-powered tools to scan for compliance gaps across GDPR, CCPA, and the EU AI Act before violations occur.

Automate compliance monitoring

AI systems in healthcare need constant compliance checks, but who has time to manually review thousands of data points? Smart businesses now deploy AI-powered compliance monitoring tools that work 24/7.

These digital watchdogs scan for privacy issues, flag potential problems, and generate detailed audit reports without human intervention. I once tried tracking compliance manually for a client's AI system, and I aged about five years in one month! Automated systems reduced their workload by 85% while catching vulnerabilities human reviewers often miss.

Compliance monitoring tools do not need breaks. They continuously analyze your AI operations against current regulations like GDPR and CCPA, providing real-time alerts when something seems off.

For local business owners, this means less time worrying about regulatory headaches and more time focusing on growth. These systems adapt as regulations change, creating a solid foundation for your AI privacy framework.

Regular audits of AI privacy models form the next critical step in maintaining a bulletproof compliance strategy.

Conduct regular audits of AI privacy models

Regular privacy audits act as your business's digital immune system. Just like you check security cameras or lock your doors, AI systems need routine checkups too. These audits verify that your AI tools follow regulations and ethical standards, which helps protect your company from hefty fines and reputation damage.

Too many business owners skip this step, only to face compliance challenges later. The audit process involves understanding current regulations, reviewing data collection methods, validating AI models, and spotting potential risks early.

Documentation proves accountability and transparency if regulators come knocking. As regulations change, continuous monitoring works better than a once-a-year review.

Your AI systems evolve daily, so your compliance strategies should adapt too. The goal is progress toward responsible AI use that protects both your business and your customers.

Planning for GDPR, CCPA, and EU AI Act Compliance

- Map your data flows to identify where personal information enters your AI systems and how it moves through your organization.

- Classify your AI systems according to the EU AI Act risk levels (unacceptable, high, limited, or minimal) to determine compliance requirements.

- Document the legal basis for processing personal data in your AI applications under GDPR and CCPA frameworks.

- Create clear data retention policies that limit storage periods to what is necessary for current business purposes.

- Build automated systems to handle data subject requests such as access, deletion, and portability within required timeframes.

- Implement technical safeguards including encryption, access controls, and data minimization techniques across all AI operations.

- Develop transparent AI disclosures that explain system operations in plain language for users and regulators.

- Train your team on compliance requirements specific to each regulation to avoid costly mistakes.

- Set up consent management systems that capture and honor user preferences about data usage.

- Conduct Data Protection Impact Assessments (DPIAs) for high-risk AI applications before deployment.

- Create incident response plans for potential data breaches involving AI systems.

- Track regulatory changes across jurisdictions to stay ahead of compliance requirements.

- Budget for potential penalties, which can reach up to $10 billion for serious violations of the EU AI Act.

- Disclose copyrighted sources used in generative AI applications to avoid intellectual property issues.

- Implement measures to prevent the generation of illegal content in AI systems.

- Establish regular compliance reporting to leadership with clear metrics and improvement plans.

Building an Ethical AI Framework

Building an ethical AI framework starts with asking tough questions about data usage before any code is written. Your team needs clear guidelines on privacy-respecting practices that protect user data while still delivering value—no superhero cape required for this everyday responsibility.

Cultivate a culture of ethical AI use

Creating an ethical AI culture starts with daily habits, not just fancy policies. Tech leaders who excel at this incorporate transparency and fairness into their operations. Companies have faced setbacks when ethics are treated as an afterthought. Your team needs clear guidelines about how AI systems should protect privacy and avoid bias.

Google, Microsoft, and IBM did not become leaders in AI ethics by chance; they integrated these principles into their development processes from the start. A multidisciplinary approach helps catch potential issues before they affect your customers. Team members from various departments should work together to assess if AI systems explain their decisions clearly, avoid discrimination, and handle data in ways customers expect.

Understand the impact of regulations

Regulations like the EU AI Act provide a framework for addressing AI privacy concerns. The Act categorizes AI systems into risk levels, from "unacceptable" to "minimal," which guides compliance planning. Business leaders must grasp these distinctions to prevent costly penalties and reputation damage.

Regulations serve as safeguards rather than obstacles. True compliance involves integrating these requirements into AI systems early on. Privacy-enhancing techniques such as differential privacy and homomorphic encryption allow data analysis without exposing personal details.

AI-driven compliance monitoring tools automatically scan for issues, reducing manual oversight while advancing security. Companies gain a competitive edge by viewing regulations as opportunities for innovation rather than barriers to growth. The aim is to build trust with customers who value privacy.

Conclusion

AI privacy protection isn't just tech jargon; it's your business's digital armor in an increasingly vulnerable landscape. We explored how embedding privacy into AI design, limiting data retention, and applying encryption throughout processing cycles can shield sensitive information from prying eyes.

Smart businesses now use AI-powered tools to automate security monitoring while maintaining regulatory compliance with GDPR and CCPA. The stakes are high, but the path forward does not demand a computer science degree.

Start small by auditing your current data practices, then gradually implement these protocols to build customer trust. Your business deserves protection that works as hard as you do, and these AI security measures offer exactly that without straining your resources or sanity.

The future belongs to companies that protect data while harnessing AI's full potential.

FAQs

1. What are AI privacy protection protocols?

AI privacy protection protocols are rules that shield your data from prying eyes. They work like a digital fortress around your personal info when AI systems process it. These safeguards limit who can see your data and how companies use it.

2. How can I tell if an AI system has good data security?

Look for systems with encryption, access controls, and regular security audits. Good AI vendors will be open about their security measures and will not hide behind fancy jargon. They should also have clear policies about data storage and deletion.

3. What rights do I have over my data used in AI systems?

You have the right to know what data is collected, request copies, and ask for deletion in many places. Laws like GDPR in Europe and CCPA in California back these rights. Companies must get permission before using your data for training their AI models.

4. Why should businesses care about AI security protocols?

Data breaches can be very costly and damage customer trust quickly. Strong AI security helps protect your company's reputation and assists in avoiding hefty fines from regulators. Sound data practices also support innovation by offering a secure foundation for AI development.

Disclaimer: This content is informational and not a substitute for professional advice. The recommendations are based on best practices in Data Protection, Privacy Enhancing Technology, Cybersecurity, Data Governance, Compliance, Anonymization, Encryption, Responsible AI, Risk Mitigation, AI Regulation, Information Security, Risk Management, and Machine Learning Ethics.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

References

- https://www.digitalocean.com/resources/articles/ai-and-privacy (2023-12-15)

- https://hai.stanford.edu/news/privacy-ai-era-how-do-we-protect-our-personal-information (2024-03-18)

- https://www.leewayhertz.com/data-security-in-ai-systems/

- https://www.zendesk.com/blog/ai-transparency/ (2024-01-18)

- https://www.eweek.com/artificial-intelligence/ai-privacy-issues/ (2024-11-27)

- https://ovic.vic.gov.au/privacy/resources-for-organisations/artificial-intelligence-and-privacy-issues-and-challenges/

- https://www.trigyn.com/insights/ai-and-privacy-risks-challenges-and-solutions (2024-02-21)

- https://www.ey.com/en_fi/insights/consulting/mitigating-ai-privacy-risks-strategies-for-trust-and-compliance

- https://jis-eurasipjournals.springeropen.com/articles/10.1186/s13635-025-00203-9

- https://blog.qualys.com/product-tech/2025/02/07/ai-and-data-privacy-mitigating-risks-in-the-age-of-generative-ai-tools

- https://www.getprimary.com/ai-classification

- https://lumenalta.com/insights/the-impact-of-ai-in-data-privacy-protection

- https://www.researchgate.net/publication/386277073_AI-driven_threat_intelligence_for_real-time_cybersecurity_Frameworks_tools_and_future_directions

- https://dialzara.com/blog/privacy-preserving-ai-techniques-and-frameworks/ (2024-05-23)

- https://www.exabeam.com/explainers/ai-cyber-security/ai-cyber-security-securing-ai-systems-against-cyber-threats/

- https://www.ic3.gov/CSA/2025/250522.pdf

- https://www.researchgate.net/publication/390742929_Automated_Compliance_Monitoring_in_AI_Healthcare_Systems (2025-04-15)

- https://dialzara.com/blog/ai-compliance-audit-step-by-step-guide/

- https://www.researchgate.net/publication/384227053_Data_Protection_and_AI_Navigating_Regulatory_Compliance_in_AI-_Driven_Systems/download (2024-09-21)

- https://transcend.io/blog/ai-ethics (2023-10-20)

- https://www.researchgate.net/publication/383664652_Data_Ethics_and_AI_Regulation_Developing_Comprehensive_Frameworks_for_Protecting_Human_Rights

- https://community.trustcloud.ai/docs/grc-launchpad/grc-101/governance/data-privacy-and-ai-ethical-considerations-and-best-practices/