AI Infrastructure Requirements Planning Guide

Understanding AI Integration

Creating AI infrastructure is similar to building a gaming PC with numerous modifications. You need the appropriate components, adequate cooling, and sufficient power to run resource-intensive applications.

I'm Reuben "Reu" Smith, founder of WorkflowGuide.com, and I've observed businesses grappling with AI implementation for over a decade. Many companies adopt AI hastily without proper infrastructure planning.

The outcome? Costly systems that underperform or fail entirely.

AI infrastructure combines specialized hardware like GPUs and TPUs with high-speed storage, networking solutions, and software frameworks such as TensorFlow and PyTorch. This technical foundation supports everything from simple automation to complex machine learning models. For modern enterprises, strong AI infrastructure represents the difference between competitive advantage and digital stagnation.

An interactive diagram outlines key elements of IT Infrastructure, System Architecture, Performance Optimization, and Technology Strategy to support an effective AI Strategy and guide Infrastructure Optimization.

Companies encounter significant challenges when building these systems. Scalability issues emerge as data volumes increase. Cost management becomes complex without cloud-based resources.

Legacy system integration often creates technical debt. Mirantis k0rdent virtualization offers a solution through unified management of VMs and containers. Flexential provides another option with cloud computing solutions that support AI-driven growth.

The skills gap presents an additional challenge. Cross-functional collaboration among data scientists, engineers, and DevOps teams requires ongoing upskilling. Learning platforms and expert recruitment help address these knowledge deficits.

This approach reinforces a comprehensive AI Strategy and supports Infrastructure Optimization through skill development.

Real-world examples demonstrate how automated retail analytics, autonomous vehicle fleets, and financial fraud detection all benefit from scalable multi-stack approaches.

This guide covers practical steps for AI infrastructure planning: assessing use cases, evaluating data requirements, planning architecture, selecting deployment models, and implementing security measures.

This guide also addresses essential Security Considerations that help safeguard sensitive data in your systems.

We'll explore how to avoid common pitfalls while maximizing your AI investment. Let's create something effective.

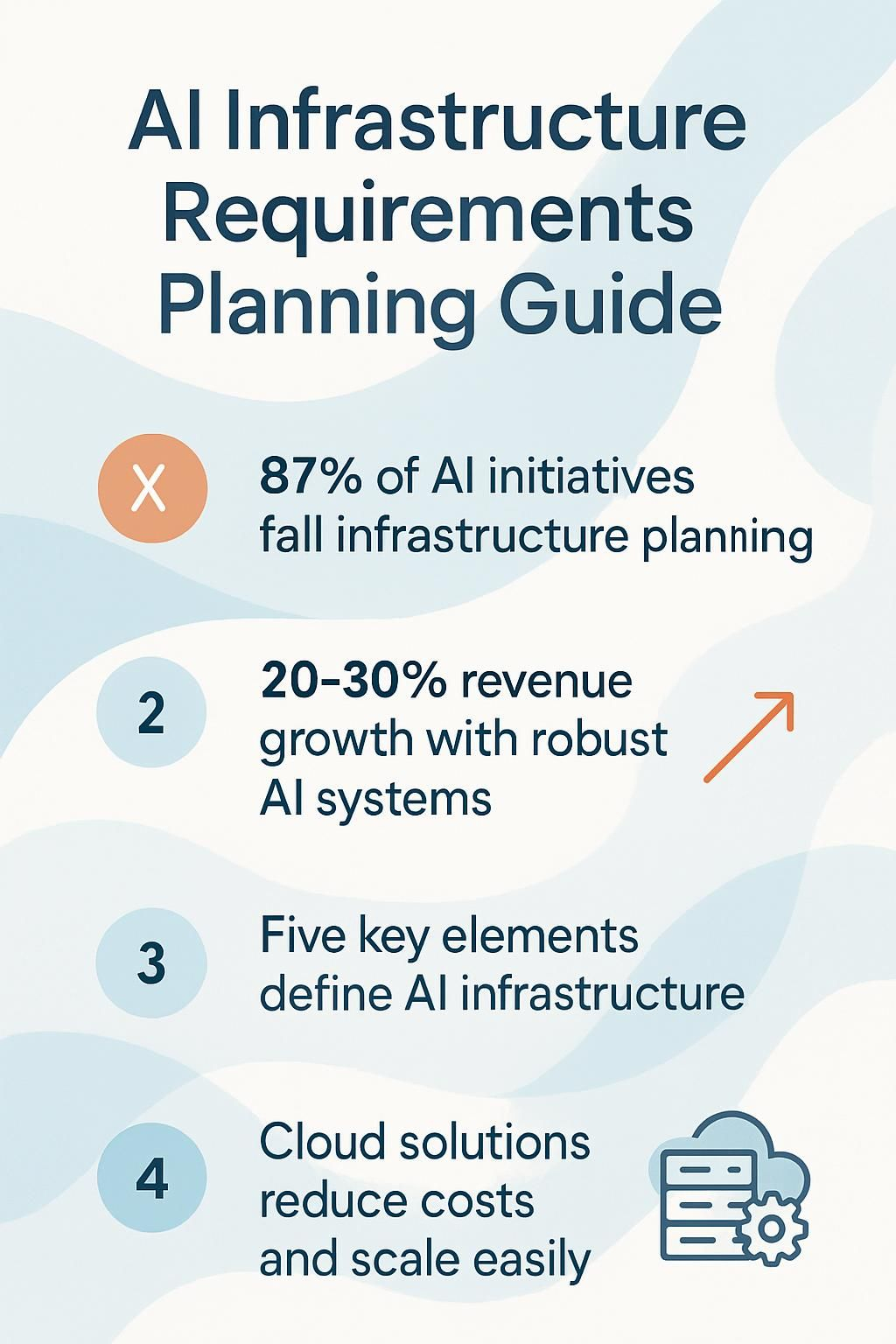

Key Takeaways

- 87% of AI initiatives fail due to poor infrastructure planning, making proper setup crucial for success.

- AI infrastructure combines five key elements: specialized computing hardware, container solutions, orchestration platforms, data processing frameworks, and monitoring tools.

- Companies with strong AI systems outperform competitors by 20-30% in revenue growth according to McKinsey research.

- Cloud-based solutions offer pay-as-you-go options that reduce upfront costs while providing the flexibility to scale resources based on actual usage.

- MLOps frameworks help teams deploy and monitor machine learning models in production by combining DevOps principles with AI workflows.

An interactive diagram highlights IT Infrastructure, System Architecture, and Performance Optimization strategies that support a comprehensive AI Strategy and guide Infrastructure Optimization efforts.

Understanding AI Infrastructure

AI infrastructure forms the backbone of any successful machine learning project, just like a gaming PC needs the right components to run Cyberpunk 2077 without crashing. Think of it as building your dream gaming rig, but instead of running games, you're training models that can predict customer behavior or spot manufacturing defects before they happen.

This section covers key aspects of IT Infrastructure and System Architecture that lay the foundation for a strong Technology Strategy and guide Infrastructure Optimization for peak performance.

Definition and Core Concepts

AI infrastructure forms the foundation that powers all your artificial intelligence initiatives. Think of it as the digital nervous system that connects specialized hardware like GPUs and TPUs with software frameworks such as TensorFlow and PyTorch.

This backbone supports everything from basic machine learning models to complex real-time recommendation engines. Much like how a gaming PC needs specific components to run the latest titles smoothly, AI systems require purpose-built architecture to process massive datasets efficiently.

Building AI infrastructure is like constructing a race car. You need specialized parts working in perfect harmony, not just a souped-up family sedan.

At its core, AI infrastructure combines five critical elements: specialized computing hardware, container solutions like Docker, orchestration platforms such as Kubernetes, data processing frameworks, and monitoring tools.

These components work together to create a system that can train models, make predictions, and scale as your needs grow. The magic happens when these elements integrate seamlessly, allowing your data scientists to focus on solving business problems rather than wrestling with technical limitations.

This synergy drives Infrastructure Optimization and supports a forward-thinking Technology Strategy.

Importance of AI Infrastructure in Modern Enterprises

Now that we've covered what AI infrastructure actually is, let's talk about why your business can't afford to ignore it. Modern enterprises face brutal competition in every market.

AI infrastructure serves as the backbone that powers intelligent applications, data analysis, and automated decision-making across your organization. Companies with strong AI systems process customer data faster, spot market trends earlier, and adapt their strategies before competitors even notice changes.

This competitive edge translates directly to your bottom line. A McKinsey study showed businesses with advanced AI infrastructure outperform others by 20-30% in revenue growth, simply because they can scale operations and respond to market shifts with lightning speed.

Your AI infrastructure isn't just another IT expense, it's your business's nervous system. Think of traditional infrastructure as a pickup truck, reliable but limited. AI infrastructure is more like a transformer that changes to meet each new challenge.

This flexibility allows you to handle complex models and massive data volumes without crashing your systems or your budget. The right setup optimizes resource usage while maintaining fault tolerance, keeping your services running even when individual components fail.

For local business owners, this means you can finally compete with bigger players who previously had all the technological advantages. Cloud-based options now give you enterprise-level capabilities without requiring a second mortgage on your building to pay for it.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Key Components of AI Infrastructure

Building AI infrastructure requires specific components that work together like a well-oiled machine. These building blocks form the foundation of your AI systems and determine how effectively your models will run in production.

Compute: AI Infrastructure Requirements Planning Guide

Building AI infrastructure requires specific components that work together like a well-oiled machine. These building blocks form the foundation of your AI systems and determine how effectively your models will run in production.

Compute Resources and Processing Units

The beating heart of any AI system lies in its compute resources. GPUs and TPUs now dominate the AI landscape, offering massive parallel processing power that standard CPUs simply can't match.

These specialized processors crunch through complex machine learning algorithms at lightning speed, turning what used to be week-long training sessions into hours or even minutes. I've seen local businesses waste thousands on overpowered hardware because someone convinced them they needed "enterprise-grade everything" - don't fall into that trap! Your infrastructure needs should align with your actual workloads, not what looks impressive in a server room.

Building AI infrastructure is like assembling a gaming PC - except instead of maximizing frame rates, you're optimizing for model training throughput.

Computational power requirements vary dramatically based on your AI goals. For basic predictive analytics, you might leverage cloud-based IaaS solutions with on-demand resources rather than investing in expensive on-premises hardware.

High-speed networking creates the backbone that moves data between storage and processing units, with bandwidth often becoming the hidden bottleneck in many setups. Resource management tools help balance workloads across your infrastructure, preventing the all-too-common scenario where half your expensive hardware sits idle while the other half melts down under pressure.

Smart virtualization strategies can also maximize hardware utilization, giving your AI initiatives room to grow without breaking the bank.

Data Storage and Management Solutions

Data storage forms the backbone of any serious AI infrastructure. Think of it as your digital pantry, but instead of storing snacks, you're storing petabytes of training data that feed your hungry AI models.

At WorkflowGuide.com, we've seen businesses struggle with makeshift storage solutions that buckle under the weight of machine learning workloads. Your AI systems need high-speed storage that allows quick data retrieval without bottlenecks.

This isn't just about buying bigger hard drives; it requires strategic planning around data lakes, cloud storage options, and big data architectures that scale with your growing needs.

I once tried running complex models on standard business storage, and my computer gave me the digital equivalent of "I can't even.".

Security and governance can't be afterthoughts in your data management strategy. Many tech leaders focus on processing power but forget that data breaches can sink an AI project faster than you can say "compliance violation." Your storage solutions must maintain data integrity while facilitating real-time processing for those time-sensitive AI applications.

We recommend continuous monitoring systems that catch issues before they become problems. MLOps integration also plays a crucial role, connecting your data pipelines directly to your machine learning workflows.

This creates a seamless environment where data scientists spend less time hunting for datasets and more time building models that drive actual business results. For local business owners, even modest AI implementations require thoughtful data management to avoid the "garbage in, garbage out" syndrome that plagues many projects.

Networking and Connectivity Requirements

Your AI systems need highways, not dirt roads. Networks form the backbone of any AI infrastructure, connecting your compute resources and allowing data to flow freely between systems.

High-speed networks slash latency issues, which matters tremendously when your machine learning models process millions of calculations per second. Think of it like playing an online game with lag, except instead of missing that critical headshot, your business misses critical insights.

Many business owners skimp on networking components, then wonder why their fancy new AI tools crawl along like a turtle with a sprained ankle.

Bandwidth requirements grow exponentially as AI systems scale up. Your network must support distributed computing environments where multiple machines work together on complex problems.

Teams in different locations need to collaborate on models and datasets without frustrating delays. The network also bridges your on-premises systems with cloud resources and edge computing devices.

This connectivity creates a flexible ecosystem that adapts to changing data volumes and processing demands. Smart business leaders plan their network infrastructure with the same care they give to selecting GPUs or storage solutions, recognizing that even the most powerful AI engine stalls without proper data highways connecting everything together.

Security and Compliance Frameworks

AI systems handle mountains of sensitive data, making security frameworks non-negotiable for your business. Think of these frameworks as your digital fortress, complete with role-based access control (RBAC) that limits who touches what data, like giving different security clearance levels to your team members.

Data governance protocols act as your rulebook for handling information, while encryption shields your AI training data from prying eyes. I've seen too many businesses treat security as an afterthought, only to scramble when facing a breach or compliance audit.

GDPR and other regulations don't care if you're "planning to get compliant soon." Your AI infrastructure needs these protections baked in from day one. Smart business leaders implement access management systems that track who interacts with AI systems and why.

This creates an audit trail that proves valuable during compliance checks. Information security isn't just about avoiding fines, it's about maintaining customer trust. Each cybersecurity measure you implement reduces risk exposure and strengthens your governance framework.

The cost of security might seem steep now, but it pales compared to the price of a data breach later.

Challenges in Building AI Infrastructure

Building AI infrastructure comes with major hurdles like scaling systems that grow with your needs, managing costs that don't spiral out of control, and connecting new tech to old systems that refuse to play nice - read on to see how smart planning turns these roadblocks into speedbumps.

Scalability Issues

AI systems face major growing pains as they expand. Your infrastructure might work perfectly with small datasets and simple models, but throw in enterprise-level demands and watch it crumble faster than my attempt at building IKEA furniture without instructions.

Companies often hit a wall when models grow in complexity and data volumes multiply. The infrastructure that handled your initial AI experiments struggles to keep up with production workloads, creating bottlenecks that slow down innovation and frustrate teams.

I've seen startups celebrate early AI wins only to panic when their systems buckle under real-world usage.

Proper scalability planning saves you from the "success disaster" where your AI works too well and crashes your systems. Load balancing becomes critical as demand fluctuates, preventing resource hogging by hungry algorithms.

Smart resource allocation maximizes performance while keeping costs in check, a balancing act that requires both technical know-how and business sense. Tools like Docker and Kubernetes support automation in deployment and scaling, but implementing them requires strategic planning.

The skills gap in managing these scaling challenges creates another hurdle that businesses must address before moving to the next infrastructure concern.

Cost Management Concerns

AI infrastructure costs can hit your budget like a surprise boss battle in your favorite RPG. Many business leaders face sticker shock when they see the price tags for high-performance GPUs and specialized hardware needed to run complex models.

Cloud infrastructure offers a lifeline here, cutting the need for massive upfront investments. Instead of dropping $100K on servers that might be outdated next year, you can pay-as-you-go and scale resources based on actual usage patterns.

The real budget ninja move involves finding the sweet spot between performance and cost. We've seen companies slash computational expenses by 40% through smart resource allocation, spinning up servers only when needed.

Hardware investments require careful planning since that shiny new processing unit might cost a fortune but sit idle half the time. Budget management becomes less about pinching pennies and more about strategic allocation.

Think of it like building a gaming PC, where you put your money toward the components that actually matter for your specific workload rather than maxing out every spec because it looks cool on paper.

Integration with Legacy Systems

Legacy systems often act like that one stubborn relative at Thanksgiving who refuses to try new foods. They just won't play nice with your shiny AI tools! Many businesses face this compatibility roadblock when trying to modernize their operations.

Your decades-old inventory system might store critical data but lacks the APIs or processing power needed for modern AI workloads. This creates a technical tug-of-war between keeping valuable historical systems and embracing new capabilities.

The solution isn't always a complete overhaul. Smart businesses create bridge technologies and middleware that connect old and new worlds. Data migration strategies must balance maintaining business continuity with enabling new AI functions.

I once worked with a manufacturing client who used a clever translation layer to feed their 1990s database into a modern predictive maintenance system. Their approach saved millions compared to starting from scratch.

The next challenge businesses face after solving legacy integration issues involves addressing the skills gap within their teams.

Addressing Team Skills Gap for AI Adoption

Tech leaders often discover their teams lack critical AI skills only after investing in fancy infrastructure. I've seen this movie before, folks. You buy the Ferrari but nobody knows how to drive stick.

Start with a talent assessment to spot gaps in your current workforce. Our clients map existing capabilities against project needs using simple skills matrices. Next, deploy targeted training through platforms like Coursera or Udacity for your team's development.

We helped a local HVAC company upskill their dispatch team with basic ML concepts in just six weeks.

Don't try to train everyone in everything. Strategic talent acquisition fills critical knowledge holes faster than training alone. A maturity assessment helps you understand where your organization stands in the AI capability spectrum.

One manufacturing client scored themselves a "2 out of 5" and focused resources accordingly. Create a structured deployment plan with clear resource allocation. Finally, build a small Proof of Concept that lets your newly trained team apply skills in a low-risk environment.

This practical approach beats theoretical training every time, like learning to swim by actually getting in the pool rather than watching YouTube tutorials.

Optimizing AI Infrastructure for Target Goals

Optimizing your AI infrastructure means aligning tech resources with business goals like a puzzle where all pieces must fit perfectly. You'll need to match your compute power, storage solutions, and network capabilities to your specific AI applications - whether that's real-time recommendations or complex anomaly detection systems.

This section emphasizes Infrastructure Optimization and illustrates key aspects of IT Infrastructure management that align with a strong AI Strategy and support Performance Optimization.

Leveraging Cloud Computing and Hybrid Models

- Cloud platforms provide on-demand compute resources that scale up or down based on your AI workload needs, eliminating the headache of predicting future requirements.

- Organizations can now manage massive AI workloads without massive upfront hardware investments, making advanced AI accessible to businesses of all sizes.

- Hybrid models combine on-premises systems with cloud resources, giving you the best of both worlds for data that needs to stay local and processes that benefit from cloud power.

- Security improvements in cloud AI infrastructure protect your sensitive data while still allowing the computing muscle needed for complex AI operations.

- Resource allocation becomes smarter with cloud-based AI, automatically shifting computing power where it's needed most, like a traffic cop directing cars during rush hour.

- Cloud solutions cut deployment time from months to minutes, letting your team focus on building AI solutions rather than waiting for hardware setup.

- Cost-efficiency comes from paying only for what you use, similar to how you pay for electricity rather than buying your own power plant.

- Performance efficiency improves as cloud providers constantly update their hardware, giving your AI workloads access to the latest tech without replacement costs.

- Workload optimization happens automatically in many cloud environments, with AI systems learning which resources work best for specific tasks.

- Infrastructure flexibility allows quick pivoting between projects or scaling specific applications without disrupting your entire system.

- Cloud resources can be distributed globally, reducing latency for users and creating redundancy that keeps your AI services running even if one data center has issues.

- AI deployment becomes more standardized with cloud platforms, creating consistent environments that reduce "it works on my machine" problems.

Implementing Machine Learning Operations (MLOps)

- MLOps combines DevOps principles with machine learning workflows to create repeatable, reliable systems for AI deployment.

- Teams can use open-source tools like MLflow and Kubeflow to track experiments, manage model versions, and simplify collaboration across departments.

- Automated testing protocols catch model drift early, saving countless hours of troubleshooting and preventing costly prediction errors.

- Data pipelines built with MLOps principles process information consistently, reducing the "garbage in, garbage out" problem that plagues many AI projects.

- Version control for models works like Git for code, letting you roll back to previous versions if a new model misbehaves in production.

- Continuous integration practices help teams merge code changes without breaking existing systems, perfect for businesses that can't afford downtime.

- Model monitoring tools alert teams when performance drops below set thresholds, so you can fix issues before customers notice them.

- Data governance frameworks within MLOps help meet compliance standards while protecting sensitive training information through proper encryption.

- Workflow automation reduces manual steps in the deployment process, cutting the time from model creation to production from weeks to hours.

- Resource optimization tools prevent cloud computing costs from spiraling out of control as models scale up.

- Documentation systems capture knowledge about models, making it easier to onboard new team members or troubleshoot issues months later.

- Feedback loops connect model performance with business metrics, helping prove the ROI of your AI investments to stakeholders.

Now that we've covered implementing MLOps, let's explore how to optimize your entire AI infrastructure to meet your specific business goals.

Conclusion

Building effective AI infrastructure requires careful planning, strategic thinking, and cross-team collaboration. We've covered the essential components from compute resources to security frameworks that form the foundation of successful AI systems.

Your organization can avoid common challenges like scalability issues and skills gaps by following our step-by-step approach. The right infrastructure setup is crucial for AI projects to drive real business value.

AI infrastructure is not just a technical challenge but a business strategy that requires continuous improvement. Take action now to evaluate your current capabilities, identify areas for improvement, and create an infrastructure plan that will support your AI goals for years to come.

Your competitors are moving forward, and you should too.

For further reading on how to navigate the challenges of team skills gaps in AI adoption, visit our detailed guide here.

FAQs

1. What are the basic components needed for AI infrastructure?

For AI setups, you'll need powerful servers with top-notch GPUs, ample storage systems, and strong networking gear. These work together like a well-oiled machine to handle the heavy lifting of AI workloads. Your hardware choices should match your specific AI goals.

2. How much computing power do I need for my AI projects?

It depends on what you're building. Small machine learning models might run on a decent laptop, while deep learning requires serious hardware muscle. Think about your data size, model complexity, and how fast you need results.

3. Should I build on-premises AI infrastructure or use cloud services?

Cloud platforms offer quick startup and flexibility without buying expensive hardware. On-premises gives you total control and might save money long-term for constant workloads. Many companies use both in a hybrid approach for the best of both worlds.

4. How do I plan for AI infrastructure growth?

Start with your current needs but leave room to grow. Pick scalable solutions that won't need complete overhauls later. Watch your usage patterns and upgrade before bottlenecks slow you down. Regular performance reviews help catch capacity issues early.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

Disclosure: This content is informational and may include affiliate relationships. It is not a substitute for professional advice.

References

- https://www.ourcrowd.com/learn/what-is-ai-infrastructure

- https://www.flexential.com/resources/blog/building-future-ai-infrastructure

- https://cloudian.com/guides/ai-infrastructure/ai-infrastructure-key-components-and-6-factors-driving-success/

- https://spot.io/resources/ai-infrastructure/ai-infrastructure-5-key-components-challenges-and-best-practices/

- https://www.researchgate.net/publication/385558741_Impact_of_AI_on_cybersecurity_and_security_compliance

- https://www.mirantis.com/blog/build-ai-infrastructure-your-definitive-guide-to-getting-ai-right/ (2025-04-29)

- https://www.researchgate.net/publication/392272478_Integrating_Artificial_Intelligence_with_Legacy_Systems_A_Systematic_Analysis_of_Challenges_and_Strategic_Considerations

- https://eajournals.org/ejcsit/wp-content/uploads/sites/21/2025/05/Integrating-Artificial-Intelligence.pdf (2025-05-31)

- https://learn.microsoft.com/en-us/azure/cloud-adoption-framework/scenarios/ai/plan

- https://www.leapsome.com/blog/ai-skills-gap

- https://www.researchgate.net/publication/384910257_Integrating_AI_with_cloud_computing_A_framework_for_scalable_and_intelligent_data_processing_in_distributed_environments (2024-10-24)

- https://www.gartner.com/en/documents/6435907

- https://pages.run.ai/hubfs/PDFs/Complete-Guide-to-MLOps.pdf