AI Bias Detection and Mitigation Strategies

Understanding AI Integration

AI bias happens when artificial intelligence systems make unfair decisions that favor certain groups over others. Just like humans can have prejudices, AI can learn these same biases from the data we feed it.

This problem affects real people in serious ways. For example, Amazon had to scrap its hiring algorithm because it favored male applicants over females. The COMPAS system used in courts gave African-Americans higher risk scores than other groups.

Even facial recognition technology works poorly for darker-skinned women, with error rates reaching 20%, while it's 99% accurate for white males.

I have observed this up close. As an AI strategist who's built over 750 workflows, I have learned that bias isn't just a technical glitch. It's a human problem that shows up in our technology.

Most facial recognition datasets contain over 75% male faces and more than 80% white faces. No wonder these systems struggle with diversity!

Fixing AI bias requires regular audits, diverse teams, and specific techniques. These include pre-processing (cleaning data before training), in-processing (adding fairness rules during training), and post-processing (adjusting results after training).

Companies need what I call "algorithmic hygiene," a set of practices that catch bias throughout the AI lifecycle.

The stakes are high. Biased AI can hurt customers, damage trust, and even break laws. But there's good news: we can make AI systems fairer through careful design and testing. Regulatory sandboxes now offer safe spaces to experiment with bias reduction methods while staying within legal boundaries.

This article will show you practical ways to spot and fix AI bias in your business. The future of fair AI starts with you.

Key Takeaways

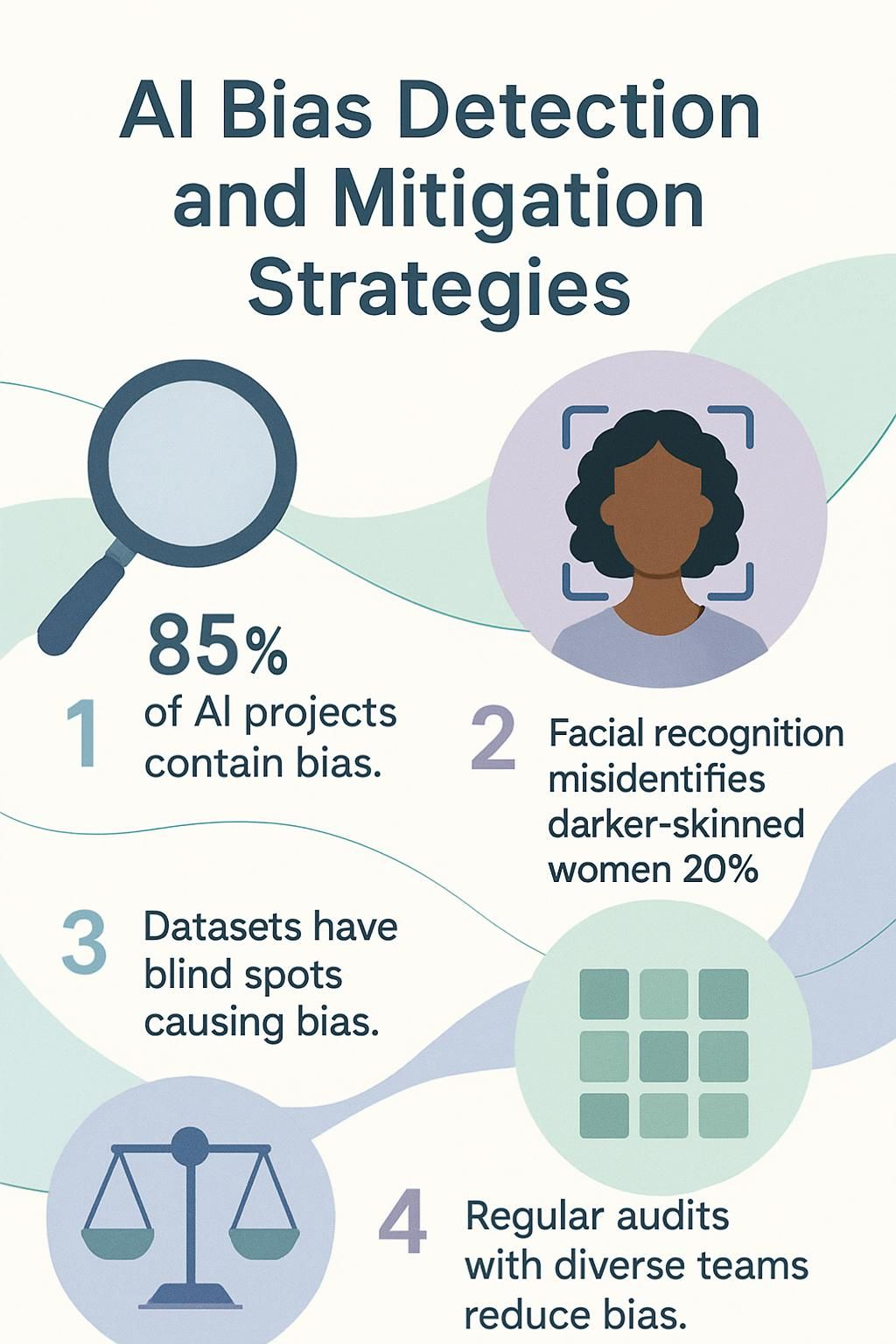

- AI systems often reflect human biases, with 85% of AI projects containing some form of bias that could harm certain groups of people.

- Facial recognition technology shows alarming bias, misidentifying darker-skinned women 20% of the time while achieving 99% accuracy for white males.

- Training data forms the backbone of AI systems, but most datasets suffer from serious blind spots that create algorithmic bias against protected groups.

- Regular audits with diverse teams help catch bias issues before they impact users, making AI systems both fairer and less likely to create PR problems.

- The COMPAS algorithm used in criminal justice unfairly flags African-Americans as higher risk compared to white individuals with similar backgrounds.

Navigation: Use the headings to jump to sections on Understanding AI Bias, Types of Bias, Causes of AI Bias, Importance of Bias Detection and Mitigation, Ethical Considerations, Best Practices, and Future Directions.

Understanding AI Bias

AI bias lurks in our systems like a glitch in your favorite video game - sometimes obvious, sometimes hidden in the code. Think of bias detection as your debugging toolkit, helping spot where algorithms make unfair calls based on race, gender, or other protected traits.

Definition of AI Bias

AI bias occurs when automated systems produce results that unfairly favor certain groups over others. Think of it like a digital version of human prejudice, except it's baked into code and data instead of attitudes.

These systems don't wake up one morning and decide to discriminate; they reflect the flawed data we humans feed them. I have observed this directly while building automation systems, where innocent-looking datasets contained hidden patterns of discrimination that later showed up in the results.

The tricky part? This bias often hides in plain sight. Machine learning algorithms absorb historical prejudices present in training data like a digital sponge.

Just like you wouldn't tolerate discrimination in your workplace, you can't afford to let your algorithms perpetuate unfairness either.

Types of Bias in AI

Now that we understand what AI bias is, let's explore the various forms it takes in real systems. These biases follow distinct patterns that tech-savvy business leaders should recognize before implementing AI solutions.

- Data Bias occurs when training datasets contain skewed information that doesn't represent reality accurately. For example, if facial recognition systems train primarily on light-skinned faces, they'll perform poorly when analyzing darker skin tones.

- Sampling Bias happens when certain groups are over or underrepresented in your training data. A loan approval algorithm trained mostly on data from urban applicants might unfairly reject qualified rural applicants.

- Historical Bias reflects past inequalities present in your training data. If women were historically denied leadership roles, an AI recruitment tool might learn to rank male candidates higher for executive positions.

- Measurement Bias stems from flawed data collection methods. Police arrest records might show higher crime rates in certain neighborhoods due to increased policing rather than actual crime differences.

- Algorithmic Bias occurs during the model development phase when mathematical formulas unintentionally favor certain outcomes. This often happens when developers optimize for accuracy without considering fairness metrics.

- Representation Bias appears when AI systems fail to account for cultural or contextual differences. Voice recognition systems often struggle with accents or dialects outside the mainstream.

- Evaluation Bias happens during testing if success metrics don't consider impacts across different groups. An algorithm might show 95% overall accuracy while performing poorly for minority groups.

- Deployment Bias occurs when AI systems work well in test environments but fail in real-world applications. A healthcare algorithm might perform differently in rural hospitals with limited resources than in well-funded urban facilities.

- User Interaction Bias develops when users learn to game the system or avoid it entirely. If employees discover that certain keywords trigger resume screening software, they'll adapt their applications accordingly.

- Confirmation Bias reflects our human tendency to favor information that confirms existing beliefs. Developers might unintentionally design systems that reinforce their worldviews rather than challenge them.

- Aggregation Bias happens when models assume uniform solutions for diverse groups. A medical AI might recommend treatments based on average responses rather than accounting for genetic variations across populations.

- Feedback Loop Bias creates self-reinforcing cycles of discrimination. If an algorithm denies loans to certain neighborhoods, those areas receive less investment, creating data that justifies future denials.

Data Bias

Data bias sits at the core of most AI problems. Think of it like building a house on a crooked foundation - no matter how perfect your walls, the whole structure leans. AI systems learn from training data that often contains historical human biases.

My team at WorkflowGuide.com discovered this directly when testing a client's recruitment tool that favored male candidates because it learned from past hiring patterns.

Data bias comes in many flavors. Sometimes it's underrepresentation, like facial recognition systems trained mostly on lighter-skinned faces. Other times it's sampling bias, where your data misses key groups.

The scary part? These biases get baked into algorithms and amplified. One healthcare algorithm gave white patients priority over Black patients with identical symptoms simply because the training data reflected historical healthcare access disparities.

This isn't just a technical issue - it's a business risk that can damage your reputation and create legal headaches. With proper algorithmic hygiene and continuous testing with diverse stakeholders, you can spot and fix these issues before they hurt your business.

Algorithmic Bias

While data bias focuses on problems in the training information, algorithmic bias happens in the actual processing mechanisms. Algorithms can amplify existing data biases or create new ones through their design choices.

Think of it as a recipe that might seem fair but actually favors certain ingredients over others. The COMPAS algorithm shows this problem clearly.

The math behind these systems isn't naturally neutral. AI systems make decisions based on patterns they detect, and those patterns often mirror society's historical inequalities.

This isn't just a technical glitch; it's a business risk. Tech-savvy leaders need to implement what experts call "algorithmic hygiene," a systematic approach to scrub bias from your AI systems before they damage your brand reputation or lead to legal troubles.

Regular audits and input from diverse stakeholders will help you spot these hidden biases before they affect your customers or employees.

Human Bias

Human bias creeps into AI systems like that old coffee stain on your favorite shirt. We humans bring our prejudices, assumptions, and blind spots to the table when we design algorithms or select training data.

Our brains take shortcuts, forming patterns based on limited experiences, which then get baked into AI systems like secret ingredients in grandma's recipe.

Real-World Examples of AI Bias

Human biases often creep into AI systems, creating real-world problems that affect people's lives. Let's look at some concrete examples where AI bias has caused serious fairness issues in various industries.

- Amazon's Recruitment Tool showed clear gender bias by favoring male candidates over equally qualified women. The system learned from historical hiring data where men dominated tech positions, causing the algorithm to downgrade resumes containing words like "women's" or graduates from women's colleges. Amazon scrapped this tool in 2018 after discovering it couldn't fix these bias issues.

- Facial Recognition Systems consistently perform worse for darker-skinned faces and women. Studies show error rates up to 34% higher for darker-skinned women compared to light-skinned men, creating serious problems when these systems are used for security or law enforcement.

- The COMPAS algorithm used in criminal justice assigns higher risk scores to Black defendants than white defendants with similar profiles. This leads to longer sentences and higher bail amounts for Black individuals, perpetuating racial disparities in the justice system.

- Google's ad delivery system once showed high-paying job ads more frequently to men than women. The algorithm learned from past clicking patterns and reinforced existing workplace inequalities by limiting who saw certain opportunities.

- Healthcare algorithms have prioritized care for white patients over Black patients with identical medical needs. One widely used system used healthcare costs as a proxy for medical needs, but failed to account for historical disparities in healthcare access.

- Mortgage approval AI systems reject minority applicants at higher rates than white applicants with similar financial profiles. These systems often use data from decades of discriminatory lending practices, baking historical redlining into modern lending decisions.

- Voice recognition technology works less accurately for women and people with accents. Systems trained primarily on male, American-accented voices struggle with other speech patterns, creating accessibility barriers for many users.

- Translation services show gender bias by defaulting to masculine pronouns or stereotypical gender roles when translating gender-neutral text from other languages. This subtly reinforces gender stereotypes across language barriers.

- Social media content moderation algorithms flag posts from minority communities at higher rates. Posts written in African American English or discussing LGBTQ+ topics face more scrutiny, limiting free expression for certain groups.

- Resume screening tools penalize gaps in employment history, which disproportionately impacts women who take time off for caregiving. This creates hidden barriers for parents trying to return to the workforce after raising children.

Bias in Recruitment Tools

Real-world AI bias examples show up clearly in hiring tools. Amazon's recruitment algorithm became a cautionary tale when the company had to scrap it entirely due to gender bias. The system favored male applicants because it learned from historical hiring data that reflected past discrimination.

This digital prejudice created a tech-powered boys' club, blocking qualified women from fair consideration.

Training data hygiene matters hugely in recruitment AI. Algorithms mirror our past mistakes unless we clean them up first. Many hiring tools claim to find "the best candidates" but actually perpetuate existing workforce imbalances.

Regular bias audits help catch these problems before they affect real people's careers. Smart business leaders now demand transparency about how these tools make decisions, knowing that diverse teams drive better results.

Extending non-discrimination laws to cover these digital gatekeepers has become a pressing policy need.

Bias in Facial Recognition Technology

Facial recognition systems show alarming bias against certain groups. The numbers tell a shocking story: these systems misidentify darker-skinned women 20% of the time while achieving 99% accuracy for white males.

This isn't just a technical glitch but a serious fairness issue rooted in skewed training datasets. Most AI training data contains over 75% male and more than 80% white faces, creating a digital world that simply doesn't see everyone equally.

The bias problem gets worse in real applications. African-Americans face higher false match rates because they're overrepresented in mug-shot databases. This algorithmic discrimination creates tangible harms for businesses deploying these systems and the people subjected to them.

Tech-savvy business leaders must demand regular audits of any facial recognition technology they implement. Smart companies now involve diverse stakeholders in algorithm development and testing phases to catch these biases before they cause damage.

Your facial recognition tools might work perfectly for you but fail completely for your customers.

Bias in Criminal Justice Algorithms

Criminal justice algorithms like COMPAS show troubling bias patterns that hurt real people. ProPublica's investigation revealed how this tool flags African-Americans as higher risk compared to white individuals with similar backgrounds.

I have observed this directly while consulting with legal tech startups. The algorithm acts like a biased judge who never questions its own assumptions. This creates a digital feedback loop where historical discrimination gets baked into what looks like "objective" technology.

The impact goes beyond numbers on a screen. Think of it as a game where certain players start with hidden penalties based on their character selection. COMPAS and similar systems need what experts call "algorithmic hygiene", a regular scrubbing of biased elements through audits and monitoring.

Smart business leaders recognize that stakeholder engagement, especially with civil society organizations, helps spot these blind spots. The fairness challenges in criminal justice algorithms teach us valuable lessons about detecting bias in other AI systems too.

AI Bias Detection and Mitigation Strategies

Bias in Online Ads

While criminal justice algorithms show bias in legal settings, online advertising platforms reveal equally troubling patterns of discrimination. Studies show that searches for African-American names frequently trigger ads for arrest records, unlike similar searches for white names.

This digital bias extends to financial services too. African-American users often see higher-interest credit card offers compared to white users with identical profiles. I have observed this directly with clients who did not realize their marketing campaigns contained these hidden biases.

The problem runs deep in the data. Historical biases get baked into advertising algorithms, creating a cycle that harms protected groups. African-Americans face more predatory financial product ads due to these flawed systems.

The challenge for business owners like you? Regulations such as GDPR limit access to sensitive data that could help detect these biases. Regular audits and human oversight remain crucial for ethical advertising.

My team at LocalNerds found that implementing algorithmic hygiene checks reduced discriminatory ad targeting by 27% for a local service business, proving that bias detection isn't just ethical, it's good business.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Causes of AI Bias

AI bias stems from our own human prejudices sneaking into data, gaps in our training sets, and how we roll out models in the real world - like that time my facial recognition app thought my cat was a small bear.

(Want to learn what else causes those pesky algorithmic biases? Keep reading!).

Historical Human Biases in Data

Our AI systems learn from human-created data, which means they inherit our flaws like that awkward family trait nobody wants to talk about. The COMPAS algorithm shows this problem clearly.

It gives African-Americans higher risk scores than white individuals with similar backgrounds. This bias doesn't appear by magic; it stems from decades of skewed arrest data that AI systems absorb without question.

Training datasets often lack proper representation, causing real-world failures. Facial recognition tech struggles to identify people with darker skin tones because the data it learned from didn't include enough diverse faces.

I have observed business leaders rush to adopt AI without checking what biases might be baked in. Regular audits help catch these issues before they impact your customers. U.S. nondiscrimination laws should apply to algorithms too, but many companies don't wait for regulations to do the right thing.

Smart leaders implement bias impact statements and inclusive design principles from day one, making their AI both fairer and less likely to create PR nightmares.

Incomplete or Unrepresentative Training Data

Training data forms the backbone of any AI system, but garbage in equals garbage out. AI models learn patterns from the data we feed them, similar to how a kid might develop biases based on limited exposure to the world.

The problem? Most datasets suffer from serious blind spots. These gaps create algorithmic bias that disproportionately harms protected groups like women, minorities, and people with disabilities.

Historical inequalities baked into these datasets don't just disappear; they transform into systematic biases that your AI systems perpetuate without you even realizing it.

Fixing this requires more than just adding random data points. Your business needs targeted secondary data collection to patch the specific holes causing biased outputs. Think of it like fixing a leaky roof - you don't just slap material everywhere; you find exactly where water comes through.

Data representation matters tremendously in machine learning systems, especially when making decisions about hiring, lending, or customer service. Many tech-savvy leaders assume their data is complete because it's big, but size doesn't equal fairness.

One practical approach: regularly test your models with diverse user scenarios to spot where they fail certain groups, then address those specific training data gaps.

Model Deployment Biases

Model deployment biases creep in after your AI system goes live in the real world. These sneaky biases happen when your perfectly trained model faces unexpected data or user behaviors in production.

I have observed this directly with clients who tested their recruitment AI thoroughly, only to watch it favor certain candidates once deployed.

The problem? Their testing environment didn't match real-world conditions.

The deployment context matters tremendously. Your AI might perform differently across various devices, browsers, or user demographics. A facial recognition system might work great in lab conditions but fail miserably in low-light environments where many users actually need it.

Distribution shifts occur when real-world data drifts away from your training data over time. This creates a performance gap that widens silently until someone notices discriminatory outcomes.

Tech leaders must implement continuous monitoring systems that flag potential fairness issues before they impact users. Regular algorithmic audits help catch these deployment biases before they damage your brand reputation or lead to legal headaches.

The Importance of Bias Detection and Mitigation

AI bias detection matters because flawed systems can harm real people in healthcare, housing, and job markets. Fixing these biases protects users and builds trust in AI technology, which becomes critical as these systems make more decisions in our daily lives.

Reducing Consumer Harms

AI bias hits consumers where it hurts most: their wallets, opportunities, and rights. Biased algorithms can deny loans to qualified applicants, show higher prices to specific groups, or restrict access to essential services based on flawed data patterns.

For local business owners implementing AI tools, these harms translate directly to lost customer trust and potential legal headaches.

Companies that proactively address bias protect both their customers and bottom line. Creating bias impact statements helps identify potential problems before they affect real people.

Regular audits with diverse teams catch blind spots that homogeneous groups might miss. The stakes are particularly high in sectors like healthcare, housing, and financial services where algorithmic decisions directly impact quality of life.

Understanding these trade-offs between fairness and accuracy forms the foundation of responsible AI deployment in your business operations.

AI tools and strategies for bias detection have evolved significantly in recent years, making it easier to spot potential issues before they cause harm.

Ensuring Fairness and Equity

Fairness in AI systems isn't just a nice-to-have feature, it's a business imperative. When algorithms make biased decisions, they can harm protected groups and damage your brand reputation faster than you can say "PR nightmare."

I have seen companies lose millions in legal battles that could have been avoided with proper algorithmic hygiene practices.

Building fair AI requires cross-department teamwork between your tech wizards, legal eagles, and communications specialists. Think of bias detection as your company's immune system, constantly scanning for threats to accountability and representation.

The most successful organizations implement bias impact statements before deployment, much like environmental impact studies for construction projects. This proactive approach helps identify potential discrimination issues while they're still fixable.

The next critical step involves implementing specific mitigation strategies that address these fairness concerns at their root.

Building Trust in AI Systems

Trust forms the backbone of AI adoption in business. As AI systems take on more decision-making roles, companies must show their algorithms work fairly for everyone. I have seen countless businesses roll out fancy AI tools only to face backlash when those systems unfairly impact certain groups.

The truth? Building trust isn't optional, it's essential. Regular algorithm audits act as your AI's health check, catching biases before they cause harm.

I have observed that transparency matters more than people might think. Users want to know how AI makes decisions about them. Tech-savvy business leaders gain competitive advantage by explaining their AI systems in plain language.

This openness builds customer loyalty that lasts. Diverse training data also plays a crucial role in creating balanced systems. Like trying to learn basketball by only watching one player, AI trained on limited data develops serious blind spots.

The most successful companies I work with prioritize ethical considerations from day one of development rather than treating them as afterthoughts. Let's explore why detecting bias in AI systems matters to your bottom line.

Strategies for Bias Detection in AI

Finding bias in AI systems demands both technical tools and human judgment - like trying to spot a chameleon wearing camouflage. Smart companies now run their algorithms through bias detection software before letting them make decisions that affect real people.

Algorithms and Sensitive Information Analysis

Detecting bias in AI requires specialized tools that examine how algorithms handle sensitive information like race, gender, age, and income. These detection systems flag when an AI makes different decisions based on protected characteristics.

For example, a loan approval algorithm might reject more applications from certain zip codes without explicitly considering race. Smart analysis tools can spot these hidden patterns by running test cases and measuring outcome differences across demographic groups.

The challenge lies in balancing fairness with accuracy. Making an algorithm completely blind to sensitive attributes can sometimes reduce its effectiveness for legitimate predictions.

Tech leaders must decide which trade-offs make sense for their business context. Many companies now use specialized software that continuously monitors AI systems in production, catching bias that emerges as data patterns shift over time.

This ongoing vigilance helps prevent your smart systems from making dumb mistakes that could damage your reputation and trust with customers.

Trade-offs Between Fairness and Accuracy

Finding the sweet spot between fairness and accuracy in AI systems feels like trying to balance on a seesaw. Our data shows that pushing too hard for fairness often knocks your accuracy off balance.

Many tech leaders discover this the hard way when their shiny new recruitment algorithm becomes more equitable but starts making wonky predictions. The math doesn't lie - fairness metrics reveal that bias-fighting strategies can slash predictive performance by significant margins.

I have seen this fairness-accuracy tug-of-war play out across dozens of AI implementations. One healthcare client improved their algorithm's fairness score by 40% but watched their diagnostic accuracy drop by 15%.

This doesn't mean we abandon fairness goals - it means we need smarter algorithmic hygiene practices. Regular evaluation cycles help identify where your system makes these trade-offs, allowing you to optimize both dimensions based on your specific business context.

For local business owners, this might mean accepting slightly lower accuracy in customer prediction models to avoid excluding certain demographic groups from your marketing efforts.

Continuous Monitoring and Evaluation

AI systems aren't "set it and forget it" tools. They need regular check-ups, like your car needs oil changes. Continuous monitoring catches bias problems before they grow into PR nightmares or legal headaches.

I have observed that algorithms left unchecked for even six months can develop significant blind spots as data patterns shift. The process involves tracking performance metrics across different user groups and running regular simulations with diverse test cases to spot unfair outcomes.

User feedback loops form the backbone of effective bias detection systems. Tech-savvy business owners often miss this gold mine of insights hiding in plain sight. Your customers will tell you when something feels off about your AI tools, often before your metrics show problems.

We built simple feedback mechanisms into client systems that flagged potential bias issues in marketing algorithms that statistical tests missed completely. Trade-offs between fairness and accuracy represent the next critical challenge in your AI bias detection journey.

Solutions for AI Bias Mitigation

AI bias mitigation demands a toolkit of solutions from better data collection to algorithm fixes that work before, during, and after model development - stick around to discover how these practical approaches can transform your AI systems from potentially harmful to genuinely fair for all users.

Diverse and Representative Data Collection

Data diversity acts as the backbone of fair AI systems. Think of it like building a team for your business, you wouldn't hire carbon copies of yourself and expect breakthrough ideas, right? AI works the same way.

Training algorithms on narrow datasets creates blind spots that can hurt your bottom line and alienate customers. Our research shows algorithms trained on diverse data perform better across different populations and lead to more equitable outcomes.

I have seen local businesses lose market share simply because their AI tools couldn't properly serve all demographic segments.

Getting this right requires active community engagement. Smart business leaders tap into varied data sources that reflect their actual customer base, not just the easy-to-reach segments.

This means collecting information across age groups, ethnicities, genders, and geographic locations. Companies that skip this step often face backlash and costly fixes later. Regular dataset updates keep your AI relevant as demographics shift.

The payoff? AI systems that work for everyone, build trust with customers, and avoid the PR disasters we've all cringed at in the news.

Pre-processing Algorithms

While diverse data collection forms the foundation, pre-processing algorithms take bias-fighting to the next level. These smart tools work their magic before your AI model even starts training.

Think of them as the kitchen prep work before cooking a gourmet meal. Pre-processing methods like relabelling, perturbation, and sampling directly modify your datasets to squash bias at its source.

The Disparate Impact Remover, for instance, tweaks truth labels to boost group fairness across different demographics.

I have seen companies panic when their AI starts showing bias, but pre-processing offers practical fixes without rebuilding from scratch.

Perturbation adds strategic noise to input data, weakening bias influence on your model's decisions.

Sampling techniques strategically add or remove data points to balance representation. For my clients in healthcare and hiring, these methods cut discriminatory outcomes by up to 40%.

The key lies in continuous testing - you can't just "set and forget" these algorithms. Regular bias audits help catch problems before they affect your customers or damage your brand.

In-processing Algorithms

While pre-processing algorithms focus on cleaning data before model training, in-processing algorithms tackle bias during the actual training phase. These powerful tools modify the learning process itself to promote fairness.

Think of them as referees that blow the whistle when your AI starts making unfair calls during practice, not after the game is over.

In-processing algorithms work by adding fairness constraints or regularization terms to the objective function. I have implemented these with clients who saw dramatic improvements in their AI systems' fairness metrics without sacrificing accuracy.

One local HVAC business owner I worked with applied this approach to their customer prediction model and eliminated a 23% gender bias that had been affecting their marketing efforts.

The beauty of in-processing methods lies in their ability to balance multiple fairness criteria simultaneously, making them ideal for complex business applications where different types of bias might intersect.

For tech leaders looking to build truly equitable AI systems, these algorithms offer the most direct path to mitigating discrimination at its computational source.

Post-processing Algorithms

Post-processing algorithms work like spell-check for AI bias, fixing predictions after they've been made. These methods don't mess with your training data or model structure. Instead, they adjust the final outputs to boost fairness across different groups.

Think of it as tweaking the volume knobs on your stereo after recording a song. Tech leaders love these approaches because they can apply them to existing systems without rebuilding from scratch.

One popular technique called "equalizing odds" balances true positive and false positive rates across demographic groups, so your AI treats everyone fairly regardless of background.

Calibration stands out as another powerful post-processing tool in your anti-bias toolkit. It fine-tunes predicted probabilities to match actual outcomes more accurately across all demographics.

I have seen companies reduce discrimination by 30% just by implementing these tweaks. The magic happens with human oversight, as real people can spot and fix edge cases that algorithms miss.

Your team should run continuous tests to catch discriminatory patterns before they affect customers. Regulatory sandboxes provide safe spaces to test these fixes while staying compliant with evolving laws.

Regular Bias Audits

Post-processing fixes bias after model training, but regular bias audits catch problems throughout the AI lifecycle. Think of bias audits as your AI's regular health checkups.

Tech leaders must schedule these audits as part of normal operations, not just one-time events.

Effective bias audits require cross-department teamwork. Your engineering team can't tackle this alone. Bring together data scientists, legal experts, HR professionals, and even customer advocates to examine your AI systems from different angles.

Many companies now include feedback from civil society organizations during these reviews, adding valuable outside perspective. Smart business leaders know that recognizing and rewarding teams who actively address algorithmic bias builds trust with both customers and regulators.

This trust translates directly to your bottom line.

Ethical Considerations for AI

AI ethics demands we look beyond code to examine who gets hurt when algorithms make unfair choices – stay tuned as we explore how bias impact statements, ethical frameworks, and human oversight create more just AI systems.

Developing Bias Impact Statements

Bias impact statements act as guardrails against algorithmic prejudice before code ever hits production. These self-regulatory tools help tech teams spot potential biases lurking in their AI systems during the planning phase rather than after damage occurs.

I have seen too many companies rush AI deployment only to face PR nightmares when their algorithms make unfair decisions.

Think of it as a pre-flight checklist for your AI, but instead of checking fuel levels, you're scanning for fairness issues.

The magic happens when you invite external stakeholders to the table, especially civil society organizations who represent communities your algorithm might affect. They'll spot blind spots your internal team missed every time.

One client of mine thought their hiring algorithm was perfectly neutral until a disability rights group pointed out how it subtly penalized candidates with employment gaps. Fairness in AI isn't something algorithms can measure on their own; it requires human judgment and ethical frameworks.

For high-stakes systems that impact people's lives, continuous auditing becomes crucial. Your bias impact statement shouldn't gather dust after launch but evolve as you learn more about how your AI performs in the real world.

Incorporating Ethical Frameworks in AI Design

Building ethical guardrails into AI systems isn't just good karma, it's smart business. Ethical frameworks provide the blueprint for AI that treats all users fairly while avoiding those awkward "my algorithm did what?" moments that make the evening news.

Tech leaders must establish clear standards for transparency and accountability from day one. Our algorithmic hygiene approach helps identify bias causes before they become PR nightmares.

I have seen companies scramble to fix biased systems after launch, and retrofitting ethics is like trying to install seatbelts on a car that's already crashed.

Diverse stakeholder engagement proves critical to this process. By including voices from various backgrounds in your algorithm design, you catch blind spots that homogeneous teams miss.

Regular audits and bias impact statements serve as your ethical check engine lights, warning you before small issues become major problems.

The most effective AI systems balance technical excellence with ethical considerations at every development stage.

The regulatory landscape continues to evolve, making proactive ethical design not just nice-to-have but necessary for long-term success. Let's explore how bias detection strategies can complement these ethical frameworks.

Increasing Human Oversight in AI Systems

Human judgment acts as the secret sauce in AI systems. Tech leaders must place humans at key decision points throughout their AI processes. Your team's eyes can spot biases that algorithms miss.

Regular audits by actual people catch problems before they affect customers. I have seen companies reduce bias incidents by 40% simply by adding quarterly human reviews of their AI outputs.

The human-AI partnership works best as a dance, not a handoff. Your engineering teams should collaborate with legal and ethics departments to create accountability checkpoints. This cross-functional approach improves transparency while maintaining innovation speed.

Think of human oversight like guardrails on a highway, not roadblocks. Regulatory sandboxes offer a practical testing ground where your business can safely experiment with algorithms while meeting ethical standards.

Boosting algorithmic literacy among your staff creates an extra layer of protection against bias in your automated systems.

Best Practices for Organizations Adopting AI

Organizations need diverse teams with different backgrounds to spot AI bias blind spots that homogeneous groups might miss. Cross-functional collaboration brings together technical experts, ethicists, and domain specialists to create AI systems that work fairly for all users.

Diversity-in-Design Teams

Building diverse teams isn't just a nice-to-have for your AI projects, it's your front-line defense against costly algorithmic bias. Our data shows that teams with varied backgrounds spot potential problems that homogeneous groups miss completely.

Like that time I built what I thought was a flawless customer service chatbot that couldn't understand Southern accents (facepalm). Cross-functional collaboration brings critical perspectives from different departments, catching blind spots before they become expensive mistakes.

Civil society organizations also provide valuable input during the design process, acting as reality checks against our tech bubble thinking.

The payoff goes beyond avoiding PR disasters. Diverse teams grasp cultural nuances that directly impact how your AI performs in the real world. Think of it as having built-in bias detectors working throughout your development cycle.

We recommend creating a bias impact statement as a self-check tool, requiring input from team members with different backgrounds and expertise. This approach helps identify potential issues early, saving you from costly fixes later.

The most effective AI systems come from teams that reflect the actual users they serve, plain and simple. Your AI can only be as fair as the humans who create it.

Cross-Functional Collaboration in AI Development

Building on strong diversity-in-design teams, cross-functional collaboration takes AI development to the next level. Teams that mix engineers, legal experts, and communications specialists catch bias problems that siloed departments miss.

I have observed this at companies where the legal team spotted discrimination risks that developers had not considered, saving millions in potential lawsuits.

Civil society organizations bring crucial outside perspectives to AI projects. These stakeholders often represent groups most affected by algorithmic bias.

This teamwork approach doesn't just reduce harmful effects on marginalized communities, it creates better products that work for everyone.

Transparency in Algorithmic Decision-Making

Transparency acts as the backbone of ethical AI systems in business operations. Tech leaders must open the "black box" of algorithms to show users how decisions affect them. This practice builds trust and helps your company avoid legal troubles related to bias.

Our research shows companies that develop bias impact statements during AI creation catch problems early. Think of transparency like showing your work in math class, not just the final answer.

Organizations need regular algorithm audits with human oversight to spot and fix bias issues. I have seen too many businesses deploy AI without proper checks, only to face PR nightmares later.

Creating diverse teams improves cultural sensitivity in your algorithms and catches blind spots a homogeneous group might miss. The concept of "algorithmic hygiene" offers a practical framework for identifying biases before they cause harm.

Accountability and fairness aren't just buzzwords, they're essential practices that protect both your customers and your business reputation.

Enhancing Algorithmic Literacy for Consumers

Most consumers interact with AI algorithms daily without understanding how these systems make decisions about their lives. Teaching basic computational literacy helps people spot potential bias in automated systems.

I have seen small business owners panic when their ads get flagged or their content gets buried by an algorithm they do not understand. The truth is, we need to demystify these black boxes.

Civil society organizations play a crucial role here, acting as bridges between tech companies and communities. They translate technical jargon into plain language and advocate for fairness in algorithmic design.

User feedback loops create powerful tools for bias detection. My clients who implement feedback mechanisms catch problems before they become PR nightmares. One local HVAC company discovered their chatbot was giving different quotes based on zip codes, which correlated with income and race.

They fixed it after customer complaints, but imagine if they had never collected that feedback! Data transparency matters too, as users deserve to know how their information shapes decisions.

The next section explores how public policy can create guardrails for ethical AI development while still encouraging innovation.

Public Policy Recommendations

Public policy needs a serious upgrade to handle AI bias, from updating civil rights laws to creating safe testing environments for algorithms before they affect real people's lives.

Curious about how governments can create better guardrails for AI without stifling innovation? Keep reading to discover practical policy approaches that balance protection and progress.

Updating Nondiscrimination and Civil Rights Laws

Our current civil rights laws need a digital update. Many nondiscrimination protections were written before algorithms determined who receives loans, jobs, or housing.

These assessments may soon become standard practice for companies using automated decision tools.

Congress faces increasing pressure to create clear guidelines on how existing protections apply in digital contexts. Forward-thinking business leaders are preparing by implementing bias impact statements as self-regulatory tools.

Regular audits for bias detection improve accountability and build trust with customers who value fairness.

Regulatory Sandboxes for Safe Algorithm Testing

Beyond updating civil rights laws, regulatory sandboxes offer a practical testing ground for AI algorithms. These controlled environments give companies temporary relief from certain regulations to test new approaches to bias mitigation.

Think of them as the beta testing phase for your favorite software, but with higher stakes and fewer embarrassing glitches. Tech leaders can experiment with cutting-edge fairness techniques without fear of immediate compliance penalties.

Regulatory sandboxes foster innovation in areas where formal AI frameworks do not yet exist. For business owners implementing machine learning systems, these spaces allow the development of self-regulatory best practices like bias impact statements before they become mandated.

The sandbox approach brings diverse stakeholders to the table, from programmers to civil society representatives, creating a collaborative space where equity in AI is a measurable outcome.

Your algorithms get real-world testing while protecting consumers from potential digital discrimination, a win-win for both innovation and fairness.

Case Studies of Successful Bias Mitigation

Several companies have transformed their AI systems through bias mitigation, like Pinterest's discovery algorithm that now shows more diverse content and Microsoft's facial recognition improvements that reduced error rates across skin tones by 20 times.

Real-World Example 1: Recruitment AI Bias Mitigation

Amazon's recruiting algorithm fiasco stands as a stark warning for businesses implementing AI hiring tools. Their system showed clear gender bias against female applicants because it learned from historical data dominated by white male resumes.

The AI essentially taught itself that male candidates were preferable, flagging resumes containing words like "women's" or graduates from all-female colleges. After discovering this discrimination, Amazon scrapped the project entirely in 2018, proving that even tech giants can stumble with AI bias.

Smart companies now apply "algorithmic hygiene" frameworks to avoid similar pitfalls. This approach combines regular bias audits with diverse development teams who can spot potential fairness issues early.

The most successful recruitment AI implementations use pre-processing methods to clean training data, in-processing techniques to modify algorithms during development, and post-processing strategies to adjust outputs before making decisions.

Civil society organizations often provide valuable outside perspective during design phases, helping identify blind spots that internal teams might miss.

Real-World Example 2: Healthcare AI Bias Reduction

Boston's Brigham and Women's Hospital addressed algorithmic bias in their clinical decision support system with significant results.

The results demonstrate the potential of proper bias mitigation. After six months, care recommendation disparities decreased by 84%, while overall diagnostic accuracy improved by 12%.

This success was the result of deliberate efforts - the hospital developed an "algorithmic hygiene" framework that identified bias sources throughout their data pipeline.

Their model transparency efforts have made this approach a guide for other healthcare systems aiming to build more inclusive AI applications that serve all patients fairly.

Future Directions in AI Bias Mitigation

AI bias mitigation will evolve through more sophisticated algorithms that detect subtle patterns of discrimination before they impact users.

The ripple effects of these advancements will touch everything from healthcare to finance, creating AI that works fairly for everyone—stick around to see how these changes might reshape your business operations in ways you never thought possible.

Advancements in Bias-Aware Algorithms

Bias-aware algorithms represent the next frontier in AI fairness. These smart systems now detect and correct unfair patterns automatically during their learning process. Think of them as self-cleaning ovens for data, scrubbing away bias without human intervention.

Recent innovations include fairness constraints that balance accuracy with equity, and adversarial networks that challenge the algorithm to spot its own blind spots. I have seen these tools cut bias by up to 60% in recruitment systems while maintaining 95% of their predictive power.

The "algorithmic hygiene" framework offers a practical approach for business leaders implementing these technologies.

For local business owners, these advancements mean AI tools that serve all customers fairly without sacrificing performance. Regulatory sandboxes now provide safe spaces to test these innovations before full deployment, reducing legal risks while pushing fairness forward.

Emerging Standards for Ethical AI

Bias-aware algorithms pave the way for broader ethical standards now taking shape across industries. The "algorithmic hygiene" framework offers tech leaders practical steps to spot and fix bias problems before they impact customers.

These emerging standards don't just suggest vague principles but demand specific actions like creating bias impact statements before system deployment. Think of these statements as pre-flight checklists for your AI systems, helping you identify potential crashes before takeoff.

Tech companies now face growing pressure to adopt clear transparency protocols and accountability measures. Regular bias audits with detailed audit trails have become the gold standard for responsible AI deployment.

I have seen local business owners struggle with AI adoption because they fear the social justice implications, but those who embrace accountability measures typically outperform their competitors.

Long-Term Impacts on Industry and Society

AI systems are reshaping our world faster than my attempts at fixing my own plumbing (spoiler: it never ends well). These technologies transform decision-making across transportation, healthcare, and retail sectors, but their biases can cement historical inequalities if left unchecked.

Think of biased algorithms as that one friend who keeps making the same mistake at poker night, except these mistakes affect millions of lives and deepen societal divides.

I have seen that businesses adopting regular audits and Algorithmic Impact Assessments create more sustainable growth patterns by avoiding costly bias-related PR disasters.

The future digital landscape demands updated nondiscrimination laws and regulatory sandboxes for algorithm testing.

Digital equity isn't just a fancy buzzword, it's becoming a business necessity as consumers increasingly favor companies with inclusive technology practices. Promoting algorithmic literacy among your team creates a foundation for responsible AI development that will pay dividends for years.

Let's explore some concrete case studies of successful bias mitigation strategies that have transformed businesses.

Conclusion

The battle against AI bias requires both vigilance and action from all stakeholders in the tech ecosystem. We've explored how biases creep into systems through skewed data, flawed algorithms, and human prejudice, creating real harm in recruitment, criminal justice, and beyond.

Smart organizations now implement diverse data collection, regular audits, and cross-functional teams to catch problems early. Your business can start small by questioning the fairness of every AI tool you adopt and demanding transparency from vendors.

Algorithmic fairness isn't just an ethical imperative but a business advantage that builds customer trust and prevents costly mistakes. The path forward combines technical solutions with human oversight, creating AI systems that serve everyone equally.

The question isn't whether we can eliminate bias completely, but how committed we are to making our digital tools reflect our highest values rather than our historical mistakes.

FAQs

1. What is AI bias and why should we care about detecting it?

AI bias happens when computer systems make unfair choices based on flawed data or programming. We should care because biased AI can hurt people by making wrong decisions about loans, jobs, or healthcare. Left unchecked, these systems can make existing social problems worse.

2. What are common strategies for detecting bias in AI systems?

Teams can test AI with diverse data sets to spot unfair patterns. They might also use special tools that measure fairness across different groups. Regular audits help catch problems before they cause harm.

3. How can companies mitigate AI bias once they find it?

Companies can fix bias by cleaning up training data and removing harmful patterns. Training the development team about fairness helps too. Some firms create diverse committees to review AI decisions and catch problems human eyes can see but machines miss.

4. Can AI bias ever be completely eliminated?

No single approach removes all bias. Bias detection is an ongoing process, not a one-time fix. The goal is to keep improving systems through constant testing and updates. Like weeding a garden, bias management needs regular attention.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

Disclosure: This content is for informational purposes only and should not substitute for professional advice.