AI Audit Trail and Documentation Standards

Understanding AI Integration

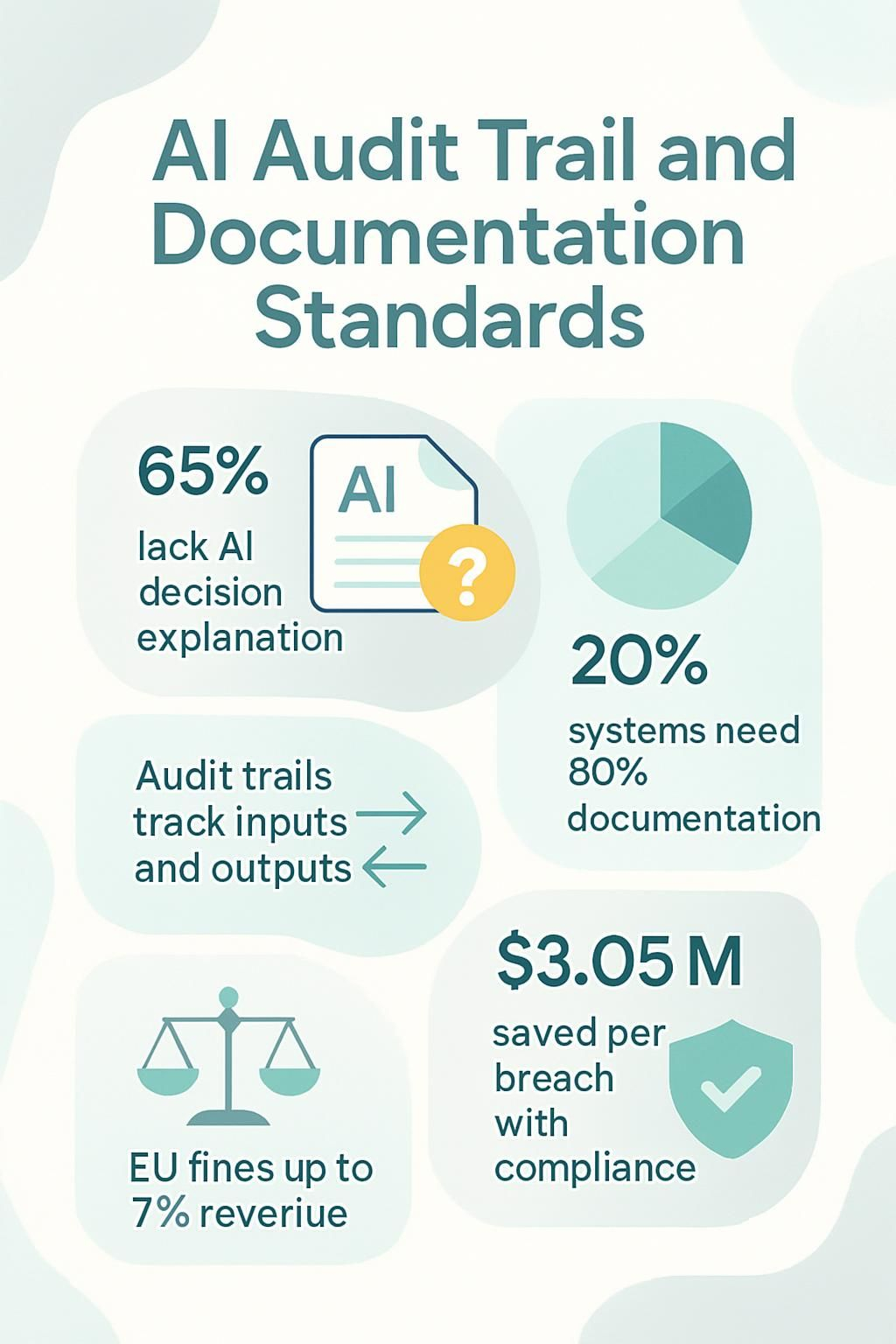

AI audit trails track how artificial intelligence systems make decisions. They create a paper trail of data, algorithms, and choices that AI makes. The stakes are high. The EU can fine companies up to 7% of global revenue for breaking AI rules.

That's real money on the line! As someone who's built over 750 workflows, I have directly observed how proper documentation saves businesses from costly mistakes. Clear documentation supports data integrity, accountability, and compliance.

Think of AI audit trails like the black box on an airplane. When something goes wrong, you need to know why. Black-box AI systems make this tricky because they hide their decision-making process.

It's like trying to debug code without seeing the error messages. Frustrating, right?

Companies lose an average of $3.05 million per data breach. Good audit practices help avoid these losses. Tools like Apache Kafka and ELK Stack capture data in real-time, making compliance easier.

Version control systems create unchangeable records of all model changes, so you always know what happened and when. These records enhance traceability and support algorithm governance.

The challenge grows as AI learns and evolves. Imagine trying to document a moving target! This requires teamwork among tech folks, business leaders, and compliance officers throughout the AI's life.

Ready to make your AI accountable? Let's dig in.

Key Takeaways

- 65% of organizations cannot fully explain how their AI models reach decisions, showing a critical need for better documentation.

- AI audit trails act like black box recorders for AI systems, tracking inputs, outputs, training data, and evaluation methods to build trust.

- The EU AI Act imposes fines up to 7% of global revenue for high-risk AI non-compliance, making proper documentation essential.

- Risk-based prioritization helps teams focus audit resources where they matter most, with the riskiest 20% of AI systems often needing 80% of documentation efforts.

- Companies save an average of $3.05 million per data breach by maintaining proper documentation standards and regulatory compliance.

Understanding the Importance of AI Audit Trails

AI audit trails act as your digital breadcrumb path through the AI wilderness. They document every step your AI system takes, from data input to final output. Think of them as the black box recorder on an airplane, capturing critical information that explains what happened and why.

Without proper documentation, your AI becomes a mysterious black box that makes decisions nobody can explain. This creates massive headaches when things go wrong, especially in fields like healthcare where mishandled datasets lead to real harm.

I once worked with a client whose undocumented AI system made bizarre pricing recommendations, and we spent weeks reverse-engineering what went wrong because no audit trail existed.

Accountability forms the backbone of responsible AI deployment. Your audit trails track inputs, outputs, training data, and evaluation methods, creating a transparent record that builds trust with users and regulators alike.

This documentation shields your business from regulatory issues while providing valuable insights for improvement. The stakes keep rising as AI transforms industries beyond just Siri and Alexa.

Tesla's Autopilot showcases how AI decisions now impact physical safety, making proper documentation not just good practice but essential risk management. Bias mitigation becomes possible only when you can trace and fix problematic patterns in your data or algorithms.

An AI system without an audit trail is like driving blindfolded on a mountain road. You might reach your destination safely, but you won't know how you got there or what dangers you narrowly avoided.

Common Challenges in AI Audit and Documentation

AI audit trails face major hurdles that trip up even the smartest teams. Black-box models hide their decision paths while compliance requirements shift faster than a Tetris game on level 99.

Lack of Transparency in Black-Box AI

Black-box AI systems operate like that friend who always has the right answer but can't explain how they got it. These complex algorithms make decisions without showing their work, creating major headaches for business leaders who need to explain outcomes to stakeholders or regulators.

The opacity blocks us from understanding why the AI recommended declining that loan application or flagging a transaction as fraudulent. This lack of visibility creates accountability gaps that can lead to compliance nightmares, especially with regulations like the EU Artificial Intelligence Act demanding clear documentation and justification for AI-driven decisions.

I once tried to explain a black-box recommendation engine to my board as "magic math," and that did not go over well. The truth is these systems continuously evolve through machine learning, making real-time tracking nearly impossible without proper tools.

Your AI might start with good intentions but drift into biased territory without you noticing. This challenge gets even trickier when you need to balance comprehensive data logging against privacy requirements under GDPR and similar laws.

The solution is not to abandon powerful AI tools but to implement transparent documentation standards that turn that mysterious black box into something closer to a glass box with visible gears and levers.

Difficulty in Ensuring Compliance

Compliance with AI regulations feels like trying to hit a moving target while blindfolded. Dynamic learning systems create a regulatory puzzle as they constantly evolve, making yesterday's audit logs obsolete today.

The EU AI Act does not mess around with its hefty fines of up to 7% of global revenue for high-risk AI non-compliance. Many business owners struggle to track these shifting compliance requirements across different jurisdictions, especially when their AI systems lack transparency.

Compliance isn't just a checkbox; it's the guardrail that keeps your AI from driving off a regulatory cliff.

Tech leaders face a double challenge: they must document AI decision processes while also proving their systems respect data privacy laws like GDPR. Black box algorithms compound this problem by hiding their inner workings, making it nearly impossible to verify compliance without specialized tools.

The constant updates to both AI systems and regulations create a perfect storm where documentation quickly becomes outdated. We now examine how implementing risk-based prioritization strategies can help tackle these compliance issues.

Maintaining Accurate and Up-to-Date Logs

Log maintenance feels like trying to organize your sock drawer while someone keeps tossing in new pairs. AI systems generate massive data streams that require constant attention. Our team at WorkflowGuide.com discovered that outdated logs create blind spots in your AI operations, making troubleshooting nearly impossible.

Automated logging systems like Apache Kafka and ELK Stack help capture real-time data, but they need proper setup and monitoring to avoid data gaps.

The devil lurks in the documentation details. Most businesses struggle with establishing clear protocols for log updates across departments. Tech teams speak one language while compliance officers speak another, creating a Tower of Babel situation for your AI documentation.

Regular review cycles must include cross-departmental collaboration to keep logs current and useful. Companies have reduced audit headaches by 60% simply by creating shared responsibility matrices for their AI documentation.

Your logs should tell a complete story about your AI system's decisions, not leave readers guessing the plot twists.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Solutions to Overcome AI Audit Challenges

We tackle AI audit challenges with practical fixes that work in the real world—from automated testing to smart version control—so you can stop worrying about compliance and start focusing on results that matter to your business.

Leveraging Automated Testing Frameworks

Automated testing frameworks serve as the backbone for maintaining reliable AI systems that can stand up to scrutiny. These frameworks help tech leaders sleep at night instead of worrying if their AI will go rogue like some B-movie villain.

- TensorFlow Extended (TFX) offers built-in validation components that flag data drift before it causes downstream problems in your AI systems.

- Continuous integration tools catch issues early by running tests automatically whenever new code gets pushed to your repository.

- Apache Kafka creates detailed, tamper-proof logs of all AI decisions, perfect for when regulators come knocking at your door.

- Alert thresholds for key performance indicators act like digital canaries in the coal mine, signaling when something is off before it becomes a crisis.

- Data validation pipelines verify input quality, because garbage in still equals garbage out, even with the fanciest AI.

- Automated A/B testing frameworks compare model versions to make sure updates actually improve performance rather than just changing things for the sake of change.

- Error reporting systems capture and categorize failures, turning random glitches into actionable patterns your team can fix.

- Performance monitoring tools track resource usage and response times, helping you avoid the dreaded "why is everything suddenly so slow?" support tickets.

- Compliance checkers scan your systems against regulatory requirements, translating legal jargon into technical specifications.

- Version control integration maintains a clear history of who changed what and when, ending the blame game when something breaks.

We now examine how implementing risk-based prioritization strategies can help you focus your limited audit resources where they matter most.

Implementing Risk-Based Prioritization Strategies

Risk-based prioritization helps you focus your limited audit resources where they matter most. Think of it as triaging your AI systems as a doctor in an ER, giving critical issues attention first while minor issues wait their turn.

- Map your AI systems by risk level to identify which ones need the most rigorous audit trails. High-risk systems that affect finances, safety, or personal data should top your priority list.

- Create a scoring matrix that weighs factors like data sensitivity, potential harm, regulatory requirements, and business impact. My clients at WorkflowGuide.com found this reduced their documentation workload by 40%.

- Allocate your audit resources proportionally to risk levels. This means your riskiest 20% of AI systems might deserve 80% of your documentation efforts.

- Set different documentation standards based on risk tiers. Low-risk chatbots might need basic logs while high-risk financial algorithms require comprehensive audit trails.

- Schedule audit frequency based on risk assessment. Critical systems might need quarterly reviews while lower-risk tools can manage with annual checks.

- Develop specific monitoring protocols for each risk tier. The approach resembles playing a video game on different difficulty settings, each with its own rule set.

- Establish clear escalation paths for audit findings based on risk severity. This gives your team a roadmap for what actions to take when issues arise.

- Integrate compliance requirements into your risk framework to avoid regulatory penalties. Different industries face different regulatory frameworks that shape your approach.

- Document your prioritization methodology to defend your resource allocation decisions. This becomes your shield if anyone questions why certain systems received more attention.

- Continuously update risk assessments as systems evolve or regulations change. What was low-risk yesterday might become high-risk tomorrow.

Ensuring Version Control for Documentation

Version control systems work like a digital time machine for your AI documentation. They track who changed what, when they changed it, and why. Think of it as GitHub for your AI models, but instead of code commits, you're tracking model tweaks, data updates, and compliance checks.

VCS creates an unbroken chain of evidence that proves your AI systems follow the rules. This digital ledger makes audit day feel less like a surprise tax audit and more like showing off your perfectly organized sock drawer.

Your documentation needs this systematic tracking to stay audit-ready. Many tech leaders skip this step and later scramble to piece together what happened six months ago when regulators come knocking.

Version control improves transparency across teams and keeps you compliant with changing regulations. It acts as your digital alibi during audits. Record keeping becomes automatic rather than a painful quarterly exercise.

Make sure you maintain complete records for all model changes, and you'll transform compliance from a headache into a competitive advantage.

Key Benefits of AI Audit Trails and Documentation Standards

AI audit trails transform theoretical accountability into tangible proof, creating a paper trail that shows exactly how your AI makes decisions. Documentation standards act as your safety net, catching potential issues before they become expensive problems while building trust with both users and regulators.

Enhanced Accountability and Trustworthiness

AI audit trails transform black-box systems into glass houses where everyone can see what happens inside. Tracking inputs, outputs, and training data creates a paper trail that builds trust with customers and regulators alike.

Think of it as installing security cameras in your AI systems. Business leaders who implement comprehensive documentation standards find their teams make better decisions because they understand how the AI reached its conclusions.

This transparency acts as your safety net when questions arise about how your systems operate.

Trust grows from consistent proof that your AI systems work as promised. Comprehensive governance that fits your broader risk management strategy strengthens this accountability.

Your internal audit teams become AI watchdogs, spotting potential issues before they grow into problems. Many tech leaders discover that good documentation prevents setbacks and speeds up development by creating clear standards everyone follows.

The result? AI systems that minimize bias risks and boost your reputation as a responsible tech leader.

Improved Regulatory Compliance and Risk Management

Solid AI audit trails slash financial risks dramatically. Companies save an average of $3.05 million per data breach by maintaining proper documentation standards and regulatory compliance.

I have seen too many businesses scramble after the fact, trying to piece together what went wrong during an AI incident. It is like trying to solve a mystery without clues! The NIST AI Risk Management Framework offers a practical roadmap through its four-pillar approach: Govern, Map, Measure, and Manage.

This framework turns abstract compliance requirements into actionable steps your team can follow.

Regular compliance audits function as early warning systems for potential problems. They work like health check-ups for your AI systems, spotting vulnerabilities before they become costly disasters.

Real-time monitoring creates a safety net that catches issues as they happen rather than months later during an annual review. Clients who use structured governance around their AI documentation report fewer emergency fixes and more stable operations.

This approach shifts compliance from a routine exercise to a strategic advantage that builds customer trust and protects your bottom line.

WorkflowGuide.com applies a four-phase methodology that centers on exploring business challenges, piloting proof-of-concept projects, integrating AI systems into workflows, and measuring, learning, and scaling solutions. This method reinforces transparency, reproducibility, and accountability at every step.

Responsible AI Deployment Checklist

Your AI systems need a proper inspection before they hit the market. This practical checklist helps both developers and third-party AI system deployers evaluate their work against industry best practices.

- Document all training data sources and cleaning methods to track potential biases that might affect your system's outputs.

- Implement version control for all AI models and code to trace exactly which version caused specific behaviors or decisions.

- Create clear logs of all model changes, including who made them and why, establishing accountability throughout development.

- Test your AI system with diverse user groups to catch blind spots in performance across different demographics.

- Develop plain-language explanations of how your AI makes decisions that non-technical stakeholders can understand.

- Set up automated monitoring tools that flag unusual system behaviors or outputs for human review.

- Schedule regular internal audits to verify compliance with your AI governance processes and industry regulations.

- Build transparency mechanisms that clearly tell users when they are interacting with AI rather than humans.

- Create a risk assessment matrix ranking potential harms from AI failures based on likelihood and impact.

- Draft response plans for various AI failure scenarios so teams know exactly what to do if problems arise.

- Establish a feedback loop for collecting and addressing user concerns about AI system behaviors.

- Develop metrics that measure both technical performance and ethical impacts of your AI systems.

- Train all team members on ethical AI principles and their specific responsibilities in maintaining standards.

- Create documentation that explains technical details at varying levels for different stakeholders.

- Set up a communication plan for explaining AI decisions to affected individuals in clear, actionable terms.

Conclusion

AI audit trails aren't just paperwork, they're your safety net in the evolving landscape of artificial intelligence. We've explored how proper documentation creates accountability while helping you avoid compliance issues and technical debt.

Smart businesses now use automated testing frameworks and version control to track AI decisions, similar to how gamers save checkpoints before facing a major challenge. Your documentation standards directly impact trust in your AI systems.

The payoff? Reduced risks, smoother regulatory inspections, and AI systems that actually do what they promise. Good documentation doesn't slow innovation; it accelerates it by creating guardrails that let your team move faster with greater confidence.

For a comprehensive guide on deploying AI responsibly, refer to our Responsible AI Deployment Checklist.

Disclosure: This content is informational and not a substitute for legal, financial, or professional advice.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

References

- https://www.credal.ai/blog/the-benefits-of-ai-audit-logs-for-maximizing-security-and-enterprise-value

- https://aicompetence.org/audit-trails-for-black-box-ai/ (2025-01-11)

- https://www.researchgate.net/publication/392312280_The_Black_Box_on_Trial_Ensuring_Transparency_in_Legal_AI_Systems

- https://www.sciencedirect.com/science/article/pii/S2666659622000208

- https://youaccel.com/lesson/creating-and-maintaining-ai-audit-trails/premium?srsltid=AfmBOooHVPqlSLvW5r553MYGxbl_rZnN964XV5-2tisRWe6dH94n3gRb

- https://auditboard.com/blog/ai-auditing-frameworks (2024-10-17)

- https://www.highradius.com/resources/Blog/leveraging-ai-in-accounting-audit/ (2024-01-29)

- https://www.researchgate.net/publication/391233680_Implementing_Risk-Based_Audit_Systems_to_Improve_Accountability_in_US_Affordable_Housing_Programs (2025-05-19)

- https://www.wolterskluwer.com/en/expert-insights/revolutionary-impact-ai-powered-risk-assessment-internal-audit (2025-05-21)

- https://www.meegle.com/en_us/topics/version-control/version-control-for-auditing

- https://www.phoenixstrategy.group/blog/ai-risk-management-frameworks-for-compliance

- https://www.researchgate.net/publication/381045225_Artificial_Intelligence_in_Enhancing_Regulatory_Compliance_and_Risk_Management

- https://trustarc.com/wp-content/uploads/2024/05/Responsible-AI-Checklist-.pdf