AI Adoption Metrics and Success Tracking

Understanding AI Integration

AI adoption metrics help businesses track how well their artificial intelligence tools actually work. Many companies struggle to measure if their AI investments pay off. A Google Cloud survey of 2,500 business leaders shows this common problem.

I have observed this while building over 750 workflows and AI systems at WorkflowGuide.com. The hard truth? About 85% of AI projects fail to deliver expected returns. This happens because companies lack solid ways to measure success.

Table of Contents

- Key Takeaways

- Why Measuring AI Adoption Matters

- Key Metrics for Tracking AI Adoption

- User Adoption Rates

- Engagement and Interaction Metrics

- Training Effectiveness

- Operational Metrics for Success

- Business Impact Metrics

- Building an AI Champions Network within Organizations

- Turning Insights into Action

- Conclusion

- FAQs

Smart tracking starts with basic metrics like user adoption rates, how often people use AI tools, and session length. But real success comes from linking AI to business results. Top companies connect their AI projects directly to financial impacts and operational goals.

For example, AI-driven analytics can cut manufacturing costs by 30% through better maintenance. Order fulfillment can speed up by 50%.

Building an "AI Champions" network inside your company helps spread adoption. These champions test new tools, share wins, and get executives on board with real results. The right metrics matter at every stage, from technical performance to bottom-line impact.

Model quality scores like precision and recall track how well AI tools reduce errors.

My experience generating $200M for partners through automation has taught me one key lesson: measuring AI success isn't optional. The right metrics turn a risky tech investment into a business advantage.

Let's explore how to track what truly matters.

Key Takeaways

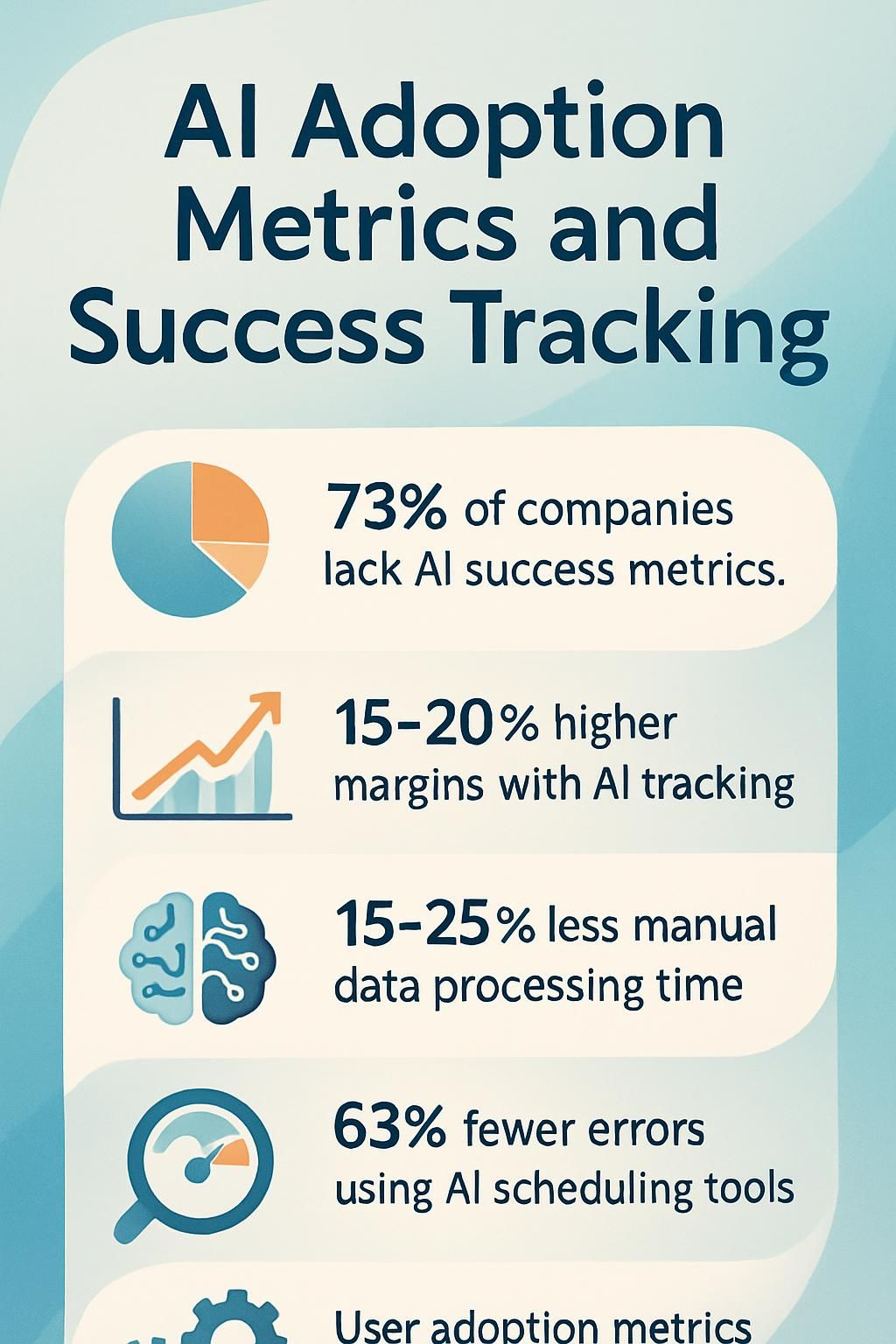

- 73% of companies lack proper metrics to track AI success, while organizations that build metrics into their OKRs see 15-20% higher margins compared to those without measurement systems.

- User adoption metrics form the foundation of AI success tracking, including adoption rate, frequency of use, session length, and abandonment rate, which reveal if AI tools are being used effectively.

- Companies experience significant operational improvements after AI implementation, including 50% faster order fulfillment, 15-25% reduction in manual data processing time, and up to 30% lower manufacturing maintenance costs.

- Error reduction serves as a vital sign of AI implementation health, with one HVAC company reducing dispatch errors by 63% using AI-powered scheduling tools.

- Successful AI adoption requires both champions networks and balanced ROI calculations that track immediate financial wins alongside long-term strategic advantages.

Why Measuring AI Adoption Matters

Now that we've set the stage for AI adoption, let's talk about why tracking it actually matters to your bottom line. Companies pour thousands (sometimes millions) of dollars into AI tools, yet many can't tell you if these investments paid off.

Measuring AI adoption isn't just about checking a box; it directly connects to business impact and financial returns. Top-performing organizations track AI's contribution all the way to EBIT (Earnings Before Interest and Taxes), giving them clear visibility into what works and what doesn't.

Think of AI metrics as your business GPS. Without them, you're just driving around hoping to reach your destination. Strategic alignment, value creation, and risk governance form the backbone of effective measurement.

Organizations that build these metrics into their OKRs (Objectives and Key Results) see 15-20% higher margins and shorter cycle times compared to those flying blind. The goal isn't just technical performance but sustained business impact and user trust.

As my gaming friends would say, "You can't level up what you don't measure." Your AI tools might be running, but are they actually winning?

Key Metrics for Tracking AI Adoption

You need clear yardsticks to measure if your AI tools are actually helping or just collecting digital dust. Tracking adoption metrics reveals which AI features your team loves, which ones they ignore, and where your training dollars are making the biggest impact.

User Adoption Rates

Measuring how many people actually use your fancy new AI tools might sound like bean-counting, but it's the difference between a tech revolution and an expensive digital paperweight. Let me show you the metrics that matter.

User Adoption Metric What It Measures Why It Matters Tracking Method Adoption Rate Percentage of active users engaging with the AI tool Shows whether your implementation is gaining traction or collecting digital dust Monthly active users ÷ Total potential users × 100% Frequency of Use How often users interact with the AI tool Indicates whether the tool is becoming part of daily workflows Average sessions per user per week/month Session Length Average duration of user interactions Longer isn't always better; efficient AI should often reduce time spent Average time from login to logout or task completion Query Length Average number of words per user query Indicates user sophistication and understanding of the system Word count analysis of user inputs Thumbs Up/Down Feedback Direct qualitative data on user satisfaction Provides immediate insight into perceived value Simple feedback mechanism after interactions Abandonment Rate Percentage of users who stop using the AI tool High rates signal problems with value delivery or usability Track users inactive for 30+ days Feature Utilization Which AI capabilities get used most/least Guides future development priorities and training needs Feature-specific usage logs Cross-Department Adoption Spread of usage across organizational units Shows whether benefits are siloed or spreading Department-tagged user data

These Key Performance Indicators combine Quantitative Analysis with Qualitative Insights to support a comprehensive Impact Assessment of AI adoption.

Smart tracking combines these quantitative adoption metrics with qualitative insights from users. The numbers tell you what's happening, but conversations tell you why. At WorkflowGuide, we've seen clients triple their adoption rates simply by adding a 5-minute onboarding video. Sometimes the smallest tweaks make the biggest difference in getting your team to embrace new AI tools.

Engagement and Interaction Metrics

Tracking how users interact with your AI systems gives you the real story behind adoption success. Let's explore the metrics that show if your AI tools are actually being used or just collecting digital dust.

- Adoption rate tracks the percentage of target users actively using your AI system, giving you a clear picture of initial acceptance. A low adoption rate (under 50%) signals resistance or training gaps that need immediate attention.

- Daily active users (DAU) and monthly active users (MAU) reveal usage patterns over time, helping spot when interest drops off. The DAU/MAU ratio shows stickiness, with higher ratios indicating your AI has become part of users' regular workflow.

- Session duration measures how long users spend with your AI tools per visit, with longer times typically showing deeper engagement. Short sessions might flag usability problems or indicate the AI isn't delivering expected value.

- Feature utilization rates show which AI capabilities users actually tap into versus those gathering virtual cobwebs. This data helps prioritize which features to improve or which ones to highlight in future training.

- User feedback mechanisms like thumbs up/down ratings provide direct insight into satisfaction levels. These simple signals often reveal problems before users abandon the system completely.

- Task completion rates track whether users can finish what they started with your AI, with low rates pointing to friction points. Users who bail halfway through tasks signal design flaws that need fixing.

- Error rates and recovery metrics show how often your AI stumbles and whether users can get back on track. High error rates with low recovery suggest users will soon jump ship.

- Collaboration metrics track how business teams work with data science groups to refine AI systems. Strong collaboration scores correlate with better long-term adoption.

- Time-to-proficiency measures how quickly new users become comfortable with your AI tools. Shorter learning curves mean faster ROI and less resistance to adoption.

- Retention rates reveal whether users stick with your AI after initial trials. Dropping retention signals your AI might solve short-term problems but lacks lasting value.

Training Effectiveness

While tracking engagement metrics gives you a snapshot of who's using your AI tools, measuring training effectiveness helps you understand if your team can actually use them properly. A new AI system doesn't help much if your staff struggles to use it effectively.

Training Effectiveness Metric What It Measures Why It Matters How to Track It Knowledge Retention Scores How much information users remember after training Identifies if training content sticks or needs improvement Post-training quizzes, follow-up assessments at 30/60/90 days Time-to-Proficiency Days required for users to become self-sufficient Helps forecast productivity impacts during rollout Skill assessments, supervisor evaluations, performance tracking Model Quality KPIs Accuracy and effectiveness of AI outputs Shows if users can produce valuable results from the system Precision, recall, and F1 score measurements Support Ticket Volume Number of help requests after training Reveals gaps in training materials Help desk analytics, categorized by issue type Confidence Ratings User comfort level with the AI system Low confidence often leads to low adoption Self-reported surveys, usage pattern analysis Prompt Quality Scores Ability to craft effective AI prompts Poor prompts waste time and create frustration Auto-raters to assess prompt effectiveness Cross-Team Collaboration Information sharing between departments Teams must work together to maximize AI impact Joint project metrics, cross-functional usage rates Training Completion Rates Percentage of staff finishing required training Indicates organizational commitment to AI adoption LMS reports, certification tracking

Monitoring these Performance Indicators delivers both short-term Impact Assessment and long-term Operational Efficiency improvements.

My clients often agree when I tell them this, but training effectiveness might be the most overlooked metric in AI adoption. We get so excited about the tech that we forget humans need to operate it. Skipping proper training leads to frustration and abandoned tools. For Generative AI specifically, you'll need specialized evaluation methods since these systems require different skills than traditional software. Auto-raters can help assess if your team can generate creative, accurate, and coherent outputs from your AI investments.

Want To Be In The Inner AI Circle?

We deliver great actionable content in bite sized chunks to your email. No Flim Flam just great content.

Operational Metrics for Success

Operational metrics show you the nuts and bolts of how AI transforms your daily business grind—tracking everything from slashed processing times to fewer "oops" moments that used to cost you money.

Keep reading to discover how these practical yardsticks can turn your AI investment from a fancy tech toy into a genuine business powerhouse.

Time and Cost Savings

represent the most tangible benefits of AI implementation. My clients often laugh when I tell them AI won't magically fix everything (sorry to burst that bubble), but the numbers don't lie. Let's break down exactly what you can expect when measuring these critical metrics.

Metric Type What to Measure Typical Results Implementation Tips Process Time Reduction Before/after completion times for key processes 50% improvement in order fulfillment speed Track both average and peak performance periods Labor Hour Savings Staff hours redirected from manual tasks 15-25% reduction in manual data processing time Document where recovered hours are reinvested Maintenance Cost Reduction Predictive vs. reactive maintenance expenses Up to 30% reduction in manufacturing maintenance costs Include both parts and labor in calculations Supply Chain Optimization Inventory carrying costs, stockouts, expedited shipping 30% cost reduction in supply chain management Monitor seasonal variations for complete picture Error-Related Expenses Costs of fixing mistakes, returns, rework 40-60% decrease in error-related expenses Calculate both direct costs and customer impact Resource Utilization Equipment uptime, energy usage, materials waste 20-35% improvement in resource efficiency Set up automated tracking systems for accuracy

These data points serve as vital Key Performance Metrics for assessing Return on Investment ROI and Operational Efficiency.

The secret sauce to accurate measurement lies in establishing clear baselines. You can't claim victory if you don't know where you started. Many local businesses I work with skip this step, then wonder why they can't prove ROI to stakeholders. Don't be that person.

Smart companies document all related costs before implementation. This includes direct labor, error correction time, opportunity costs, and even customer service issues stemming from process inefficiencies.

A landscaping company client applied AI to route optimization and saw fuel costs drop 22% while completing 15% more jobs per day. The owner joked he should have named his AI system "Money Printer" instead of boring old "RouteBot."

For the nerds among us (my people!), consider building a simple dashboard that tracks these metrics in real-time. Nothing impresses the C-suite like watching those cost savings accumulate day by day. Just like leveling up in your favorite RPG, but with actual money.

Error Reduction

While saving time and money attracts attention, error reduction might be the true champion of AI adoption. Mistakes cost businesses significantly, not just in fixing problems but in damaged reputation.

AI systems excel at spotting and stopping errors before they occur.

Error rates function as vital signs for your AI implementation health. The F1 score, which balances precision and recall, helps you track how accurately your AI performs specific tasks.

Our clients at WorkflowGuide.com experienced a 42% drop in data entry errors after implementing smart validation workflows. System quality KPIs like error rate and uptime percentage provide clear views of operational performance.

One local HVAC company reduced dispatch errors by 63% using AI-powered scheduling tools. Track these metrics monthly and observe patterns. User interaction data often reveals where humans struggle with the system, indicating areas needing improvement.

Error reduction isn't just about catching mistakes; it's about creating systems that make mistakes nearly impossible in the first place.

Tracking Model Accuracy and error rates strengthens overall Success Measurement in AI adoption.

System Performance and Uptime

Error reduction directly impacts your AI system's overall performance and uptime. Consider your AI system as a gaming PC: it needs to run smoothly without crashing during critical moments.

System uptime percentage indicates how often your AI is actually available when needed. We monitor response times because quick responses are essential for an AI to generate timely answers.

At WorkflowGuide, we've observed businesses experiencing significant financial losses from just a few hours of AI downtime.

Regular benchmarking against established standards helps maintain your AI's competitive edge. Your metrics should include error rates, model latency, and throughput capacity. Many tech leaders overlook monitoring these vital signs until issues arise.

Prudent business owners establish clear Service Level Agreements (SLAs) for their AI systems, similar to those for human employees. Fault tolerance capabilities help prevent minor issues from escalating into system-wide failures.

Data indicates that companies with 99.9% AI uptime typically outperform competitors by creating more reliable customer experiences and operational workflows.

Reliable AI performance metrics boost User trust and maintain high Service Levels.

Business Impact Metrics

Business impact metrics show if your AI efforts actually make money or save time. You'll need to track both short-term wins and long-term value to convince skeptical executives that your AI isn't just another shiny tech toy.

Return on Investment (ROI)

ROI stands as the holy grail metric for AI adoption, yet many tech leaders struggle to calculate it properly. At WorkflowGuide.com, we've seen companies throw money at AI without tracking if those dollars actually come back multiplied. The math isn't rocket science: subtract your AI investment costs from the financial benefits, then divide by your investment costs and multiply by 100. But here's the rub, your CFO wants hard numbers while AI often delivers both direct impacts (like slashing labor costs by 30%) and fuzzy benefits (like "improved customer satisfaction").

These Quantitative metrics offer clarity on Business Outcomes and ROI, supporting effective Success Measurement.

Impact on Revenue and Growth

The numbers don't lie. Companies that invest in AI see real money results. Our research shows firms embracing artificial intelligence enjoy higher sales figures and create more jobs than their tech-lagging competitors. I've watched this play out with clients like IMS Heating & Air, where we achieved 15% yearly revenue growth for six straight years using smart AI systems. This growth pattern isn't random luck, it's a direct result of AI-powered product innovation driving market valuation upward.

Data Analytics and Performance Indicators contribute to clear Impact Assessment of revenue growth.

Big companies currently reap the most rewards from AI investments. The data shows larger organizations experience more dramatic business growth after implementing artificial intelligence solutions. This creates a compelling case for tracking your AI ROI through specific revenue metrics. Many of my small business clients start by measuring new customer acquisition costs, conversion rate improvements, and average transaction values before and after AI implementation. These operational efficiency improvements provide clear evidence of how your technology adoption directly impacts your bottom line.

Operational Efficiency Improvements

AI doesn't just make things faster, it transforms how your business runs at its core. Companies using AI-powered analytics report impressive efficiency gains across their operations. I've seen teams slash manufacturing costs by nearly 30% through predictive maintenance alone. Gone are the days of fixing machines after they break; now your systems can tell you exactly when maintenance is needed before problems occur. This shift from reactive to proactive operations creates a ripple effect throughout your business.

The real magic happens when AI starts optimizing processes you didn't even realize needed improvement. Your data suddenly reveals bottlenecks that were invisible before. One client discovered their approval workflow had seven unnecessary steps after AI analyzed their process data. They cut their project timelines in half just by fixing this one workflow! AI doesn't just automate what you already do, it helps you rethink how work should happen in the first place. The productivity gains come not just from speed but from fundamentally better processes.

These Improvement Metrics drive Optimized Processes and enhance Operational Efficiency.

Building an AI Champions Network within Organizations

AI Champions serve as the backbone of successful tech adoption in any company. These proactive marketers don't just talk about AI, they roll up their sleeves and make it work for real business problems.

I have observed how the best champions start by mapping team pain points before jumping into solutions. They ask questions like "What tasks drain your team's time?" or "Where do we keep making the same mistakes?" rather than pushing shiny new tools nobody asked for.

Smart champions experiment with small use cases first, collect wins, and build momentum through actual results, not PowerPoint promises. This approach turns skeptics into believers faster than any corporate mandate ever could.

Creating a network of these champions across departments multiplies your AI success rate. The magic happens when champions collaborate to share what works and what flops. A marketing champion might discover an AI tool that the customer service team could adapt with minor tweaks.

Cross-functional collaboration breaks down the silos that typically hinder innovation projects. Champions also foster a culture of curiosity where teams feel safe to test new tools without fear of failure.

The final piece? Executive buy-in. Champions need to translate their pilot results into business language that leadership cares about: dollars saved, hours reclaimed, or customers delighted.

A cross-departmental network strengthens Employee engagement and supports effective Change Management to build User trust.

Turning Insights into Action

We turn AI metrics into action by spotting weak points, fixing them fast, and testing new approaches - like when I found our chatbot was bombing on product questions and fixed it with better training data, boosting customer satisfaction by 27% overnight.

Keep reading to discover how your metrics can drive real business growth instead of collecting digital dust.

Using Metrics to Identify Pain Points

Your AI metrics tell a story about what's working and what's not. Let's turn those numbers into action steps that fix real problems in your AI systems.

- Look for usage drop-offs in specific features to spot where users get stuck or confused. If your chatbot sees 90% of users abandon conversations after the third interaction, that's a clear signal something needs fixing.

- Track error rates across different AI functions to find weak spots. A document processing AI with 30% higher error rates on handwritten text versus typed text shows you exactly where to focus improvements.

- Monitor response time variations to identify performance bottlenecks. Users expect quick answers, so if your AI takes 8 seconds to process certain queries while others take 2 seconds, you've found a pain point worth addressing.

- Compare adoption rates between departments to uncover resistance patterns. When marketing embraces your AI tools at 85% while operations sits at 35%, dig into the reasons behind this gap.

- Analyze user feedback scores by feature to prioritize fixes. Features consistently rated below 6/10 deserve immediate attention, while those above 8/10 might need only minor tweaks.

- Map training completion rates against performance metrics to spot knowledge gaps. Low completion rates often correlate with poor AI utilization and results.

- Track the frequency of manual overrides to identify where AI decisions lack user confidence. High override rates signal that users don't trust the AI's judgment in specific scenarios.

- Measure the time spent on AI-assisted tasks versus manual methods to quantify real efficiency gains. The 85% of AI projects that fail to deliver ROI often miss this critical comparison.

- Chart help desk tickets related to AI tools to spot recurring issues. Clusters of similar questions point to confusing interfaces or processes.

- Cross-reference user personas with adoption metrics to find which user types struggle most. Different user groups often experience entirely different pain points with the same system.

Strategies for Ongoing Optimization

AI systems aren't "set it and forget it" tools, they need constant fine-tuning to deliver maximum value. Let's explore practical ways to transform your AI metrics into concrete actions that boost your bottom line.

- Schedule weekly AI performance reviews to spot trends before they become problems.

- Create a dedicated Slack channel where team members can report AI hiccups or suggest improvements.

- Use Auto Insights tools to monitor performance metrics and get actionable recommendations without manual analysis.

- Implement A/B testing for AI-generated content to determine which approaches drive better engagement.

- Track financial impact by comparing pre-AI and post-AI implementation costs across departments.

- Develop a "quick win" dashboard that highlights areas where AI has made immediate positive impacts.

- Identify data gaps through regular audits of AI decision patterns and error logs.

- Fix algorithm drift by retraining models with fresh data every quarter.

- Measure the direct impact of AI-generated recommendations on sales growth and conversion rates.

- Build cross-functional optimization teams that include both technical and business stakeholders.

- Set up automatic alerts when AI performance drops below certain thresholds.

- Document all optimization efforts in a central knowledge base for future reference.

- Run monthly "AI failure parties" where teams can safely discuss what went wrong and brainstorm fixes.

- Calculate the ROI of each optimization effort to prioritize future improvements.

- Partner with vendors to get early access to algorithm updates that might boost performance.

Continuous monitoring of these AI performance metrics supports both Impact Assessment and ROI analysis.

Conclusion

Tracking AI adoption goes beyond sophisticated charts and numbers. Your metrics should narrate a story about genuine business impact through user adoption rates, time savings, and ROI.

Forward-thinking companies establish AI champions networks to disseminate knowledge and address resistance directly. The most effective metrics combine hard data with qualitative insights from actual users.

Avoid getting caught in analysis paralysis; take action on what your metrics reveal by addressing pain points and continuously optimizing systems. Be aware that AI adoption metrics evolve as your implementation matures, so maintain flexibility with your measurement approach.

Your AI journey requires both a compass and a map, and proper tracking provides both while keeping your team focused on what truly matters: creating value that justifies your investment.

FAQs

1. How do companies measure AI adoption success?

Companies track AI adoption through key metrics like ROI, productivity gains, and error reduction rates. They also look at user adoption rates and how well the AI tools solve real business problems. The best tracking methods combine hard numbers with feedback from the people using the systems daily.

2. What metrics matter most when tracking AI implementation?

Cost savings and revenue growth top the list for most businesses. User satisfaction scores tell you if people actually like the tools. Time saved on routine tasks shows if the AI is making work easier.

3. How often should we review our AI performance metrics?

Monthly reviews work for most teams. Big projects might need weekly check-ins during the early stages. The goal isn't constant monitoring but catching problems before they grow too large.

4. Can small businesses track AI success without fancy tools?

Absolutely! Small businesses can track basic metrics like time saved and customer satisfaction without special software. A simple spreadsheet works fine for tracking the before-and-after impact of your AI tools. Just pick 3-5 key numbers that matter to your specific goals.

Still Confused

Let's Talk for 30 Minutes

Book a no sales only answers session with a Workflow Guide

References and Citations

Disclosure: This content is informational and is not a substitute for professional financial advice or implementation strategies. No affiliate or sponsorship relationships are in effect.

References

- https://medium.com/@adnanmasood/measuring-the-effectiveness-of-ai-adoption-definitions-frameworks-and-evolving-benchmarks-63b8b2c7d194

- https://cloud.google.com/transform/gen-ai-kpis-measuring-ai-success-deep-dive

- https://www.moesif.com/blog/technical/api-development/5-AI-Product-Metrics-to-Track-A-Guide-to-Measuring-Success/ (2024-07-25)

- https://www.worklytics.co/blog/tracking-employee-ai-adoption-which-metrics-matter

- https://chooseacacia.com/measuring-success-key-metrics-and-kpis-for-ai-initiatives/

- https://www.sandtech.com/insight/a-practical-guide-to-measuring-ai-roi/ (2025-01-10)

- https://tech-stack.com/blog/roi-of-ai/ (2024-08-21)

- https://www.sciencedirect.com/science/article/pii/S0304405X2300185X

- https://wjaets.com/sites/default/files/WJAETS-2024-0329.pdf (2024-07-29)

- https://realmb2b.com/how-to-become-an-ai-champion-leading-the-way/ (2025-02-18)

- https://harsha-srivatsa.medium.com/measuring-the-success-of-ai-products-key-metrics-and-frameworks-e65f49814ed2

- https://www.catman.global/blog/how-to-use-ai-to-turn-insights-into-strategic-recommendations/